Problem Capturing right Host Primary Disk with INTEL VROC RAID1

-

-

I apologise for not getting in touch for so long.

But I was able to find startup logs with Ubuntu live and my raid is recognised directly.

Hope the logs help. -

This post is deleted! -

Hi everyone, reading the investigations allready done gives me a feeling you got close to a fix to this.

I got the experimental vroc file from the download link earlier in this topic.

I have exactly the same issues, Intel VROC / Optane with 2 NVME in raid1.

I can see the individual nvme’s but not the raid array/volume.Is there anywhere near to be expected a fix for this?

-

@rdfeij For the record, what computer hardware do you have?

-

@george1421

SuperMicro X13SAE-F server board with Intel Optane / VROC in raid1 mode.

2x NVME in raid1. -

With me yes: in bios raid1 exists over 2 nvme’s

mdraid=true is enabledmd0 indeed is empty

lsblk only shows content on the 2 nvme but not with md0I hope this will be fixed soon, otherwise we are forced to another (WindowsPE based?) imaging platform since we get more and more VROC/Optane servers/workstations with raid enabled (industrial/security usage).

I’m willing to help out to get this solved.

-

yes: in bios raid1 exists over 2 nvme’s

mdraid=true is enabledmd0 indeed is empty

lsblk only shows content on the 2 nvme but not with md0I hope this will be fixed/solved soon, otherwise we are forced to another (WindowsPE based?) imaging platform since we get more and more VROC/Optane servers/workstations with raid enabled (industrial/security usage).

I’m willing to help out to get this solved.

-

@rdfeij said in Problem Capturing right Host Primary Disk with INTEL VROC RAID1:

@george1421

SuperMicro X13SAE-F server board with Intel Optane / VROC in raid1 mode.

2x NVME in raid1.In addition:

the NVMe raid controller id is 8086:177f ( https://linux-hardware.org/?id=pci:8086-a77f-8086-0000 )

0000:00:0e.0 RAID bus controller [0104]: Intel Corporation Volume Management Device NVMe RAID Controller Intel Corporation [8086:a77f]RST controller, i think it is involved since all other sata controllers are disabled in bios:

0000:00:1a.0 System peripheral [0880]: Intel Corporation RST VMD Managed Controller [8086:09ab]And NVMe’s: (but not involved i think;

10000:e1:00.0 Non-Volatile memory controller [0108]: Sandisk Corp WD Black SN770 NVMe SSD [15b7:5017] (rev 01)

10000:e2:00.0 Non-Volatile memory controller [0108]: Sandisk Corp WD Black SN770 NVMe SSD [15b7:5017] (rev 01) -

@rdfeij Well the issue we have is that non of the developers have access to one of these new computers so its hard to solve.

Also I have a project for a customer where we were loading debian on a Dell rack mounted precision workstation. We created raid 1 with the firmware but debian 12 would not see the mirrored device only the individual disks. So this may be a limitation with the linux kernel itself. If that is the case there is nothing FOG can do. Why I say that is the image that clones the hard drives is a custom version of linux. So if linux doesn’t support these raid drives then we are kind of stuck.

I’m searching to see if I can find a laptop that has 2 internal nvme drives for testing, but no luck as of now.

-

@rdfeij said in Problem Capturing right Host Primary Disk with INTEL VROC RAID1:

Intel Corporation Volume Management Device NVMe RAID Controller Intel Corporation [8086:a77f]

FWIW the 8086:a77f is supported by the linux kernel, so if we assemble the md device it might work, but that is only a guess. It used to be if the computer was in uefi mode, plus linux, plus raid-on mode the drives couldn’t be seen at all. At least we can see the drives now.

-

@george1421 tinkering on

As described here :https://www.intel.com/content/dam/support/us/en/documents/memory-and-storage/linux-intel-vroc-userguide-333915.pdf from chapter4 we need a raid container (in my case raid1 with 2 nvme) and within the container create a volume.

But how can i test this, debug mode doesnt let me boot to fog after tinkering

-

@george1421 said in Problem Capturing right Host Primary Disk with INTEL VROC RAID1:

@rdfeij Well the issue we have is that non of the developers have access to one of these new computers so its hard to solve.

Also I have a project for a customer where we were loading debian on a Dell rack mounted precision workstation. We created raid 1 with the firmware but debian 12 would not see the mirrored device only the individual disks. So this may be a limitation with the linux kernel itself. If that is the case there is nothing FOG can do. Why I say that is the image that clones the hard drives is a custom version of linux. So if linux doesn’t support these raid drives then we are kind of stuck.

I’m searching to see if I can find a laptop that has 2 internal nvme drives for testing, but no luck as of now.

I can give u ssh access if you want, my test box is online. But i’m further also:

I can’t post since i get a spam is detected notice when submitting… -

@george1421

I have it working, created a postinit script with:IMSM_NO_PLATFORM=1 mdadm --verbose --assemble --scan rm /dev/md0 ln -s /dev/md126 /dev/md0Although it recognizes md126 it still tries to do everything to md0, that’s why the symlink is in.

Thank to @Ceregon https://forums.fogproject.org/post/154181Tested and working with SSD Raid1, NVME raid1 and resizable en non-resizable imaging.

It would be nice if there is a possibility to select postinit scripts per host(group).

This way there is no need for difficult extra scripting to define if correct hardware is in the system. -

@rdfeij OK good you found a solution. I did find a Dell Precison 3560 laptop that has dual nvme drives. I was just about to begin testing when I see you post.

Here are a few comments based on your previous post.

-

When in debug mode either a capture or deploy you can single step through the imaging process by calling the master imaging script called

fogat the debug cmd prompt just key in fog and the capture/deploy process will run in single step mode. You will need to press enter at each breakpoint but you can complete the entire imaging process this way. -

The postinit script is the proper location to add the raid assembly. You have full access to the fog variables in the postinit script. So its possible if you use one of the other tag fields to signal when it should assemble the array. Also it may be possible to use some other key hardware characteristics to identify this system like if the specific hardware exists or a specific smbios value exists.

I wrote a tutorial a long time ago that talked about imaging using the intel rst adapter: https://forums.fogproject.org/topic/7882/capture-deploy-to-target-computers-using-intel-rapid-storage-onboard-raid

-

-

@rdfeij Well I have a whole evening into trying to rebuild the fog inits (virtual hard drive)…

On my test system I can not get fos to see the raid array completely. When I tried to manually create the array it says the disks are already part of an array. Then I went down the rabbit hole so to speak. The version of mdadm in FOS linux is 4.2. The version that intel deploys with their already built kernel drivers for redhat is 4.3. mdadm 4.2 is from 2021, 4.3 is from 2024. My thinking is that there must be updated programming in mdadm to see the new vroc kit.

technical stuff you don’t care about but documenting here.

buildroot 2024.02.1 has mdadm 4.2 package

buildroot 2024.05.1 has mdadm 4.3 package (i copied this package to 2024.02.1 and it built ok)But now I have an issue with partclone its failing to compile on an unsafe path in an include for ncurses. I see what the developer of partclone did, but buildroot 2024.02.1 is not building the needed files…

I’m not even sure if this is the right path. I’ll try to patch the current init if I can’t create the inits with buildroot 2024.05.1

-

@george1421 Let me know how that goes. I remember that I had to patch something on buildroot for partclone and ncurses. A new patch may be needed if they made changes for 2024.05.1.

I can give it a try as well.Are you trying to build the newest version of partclone?

-

@rodluz I got everything to compile, but it was a pita.

I did compile the 0.3.32 version of partclone and 4.3 of mdadm on buildroot 2024.05.1. The new compiler complains when package developer references files outside of the buildroot tree. Partclone referenced /lib/include/ncursesw (the multibyte version of ncurses). Buildroot did not build the needed files in the target directory. So to keep compiling I copied from by linux mint host system the files it was looking for into the output target file path then I manually updated the references in the partclone package to point to the output target. Not a solution at all but got past the error. Also partimage did the same thing but references and include slang directory. That directory did exists in the output target directory, so I just updated the package refereces to that location and it compiled. In the end the updated mdadm file did not solve the vroc issue. I’m going to boot next with a linux live distro and see if it can see the vroc drive, if yes then I want to see what kernel drivers are there vs fos.

-

@george1421

In addition to this topic

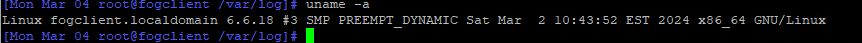

I have it working with FOG version 1.5.10.41 (dev version)

Kernel 6.6.34

Init 2024.02.3stable (also with latest kernel/init) is not working.

-

T Tom Elliott referenced this topic on

T Tom Elliott referenced this topic on

-

@george1421

to be adding some to this topic since our system was broken again with the same problems.I updated recently to newer fog version (1.5.10.1751 and today to 1.5.10.1754) and that seems to break it again.

I noticed that updating also starts using latest Initrd. (2025.02.4)Things are broken with latest Initrd. I set it back to 2024.02.9 AMD/Intel 64 bit and it is working again.

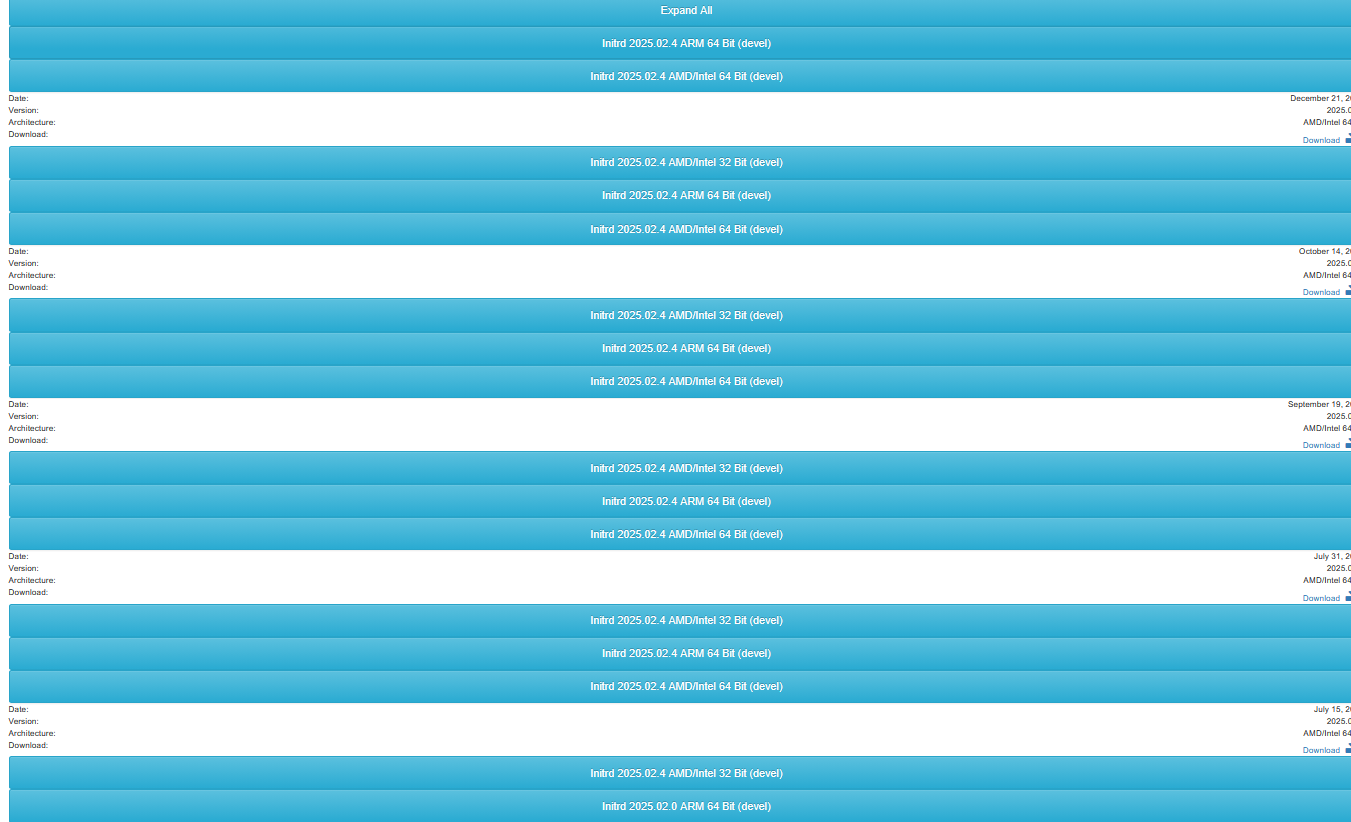

Then i updated Initrd to 202502.0 AMD/Intel 64 bit and it is still working.Strange thing is that in webinterface the latest version is shown multiple times.

When I open and download the upper one during pxe boot I see version 20251221 loaded. That one is not working.Is there anywhere to analyse what the differences are?

I also want to add that we were focussing on nvme. But VROC doesn’t seem to work with nvme in raid.

There are sata ssd/nvme in those slots.