@george1421

to be adding some to this topic since our system was broken again with the same problems.

I updated recently to newer fog version (1.5.10.1751 and today to 1.5.10.1754) and that seems to break it again.

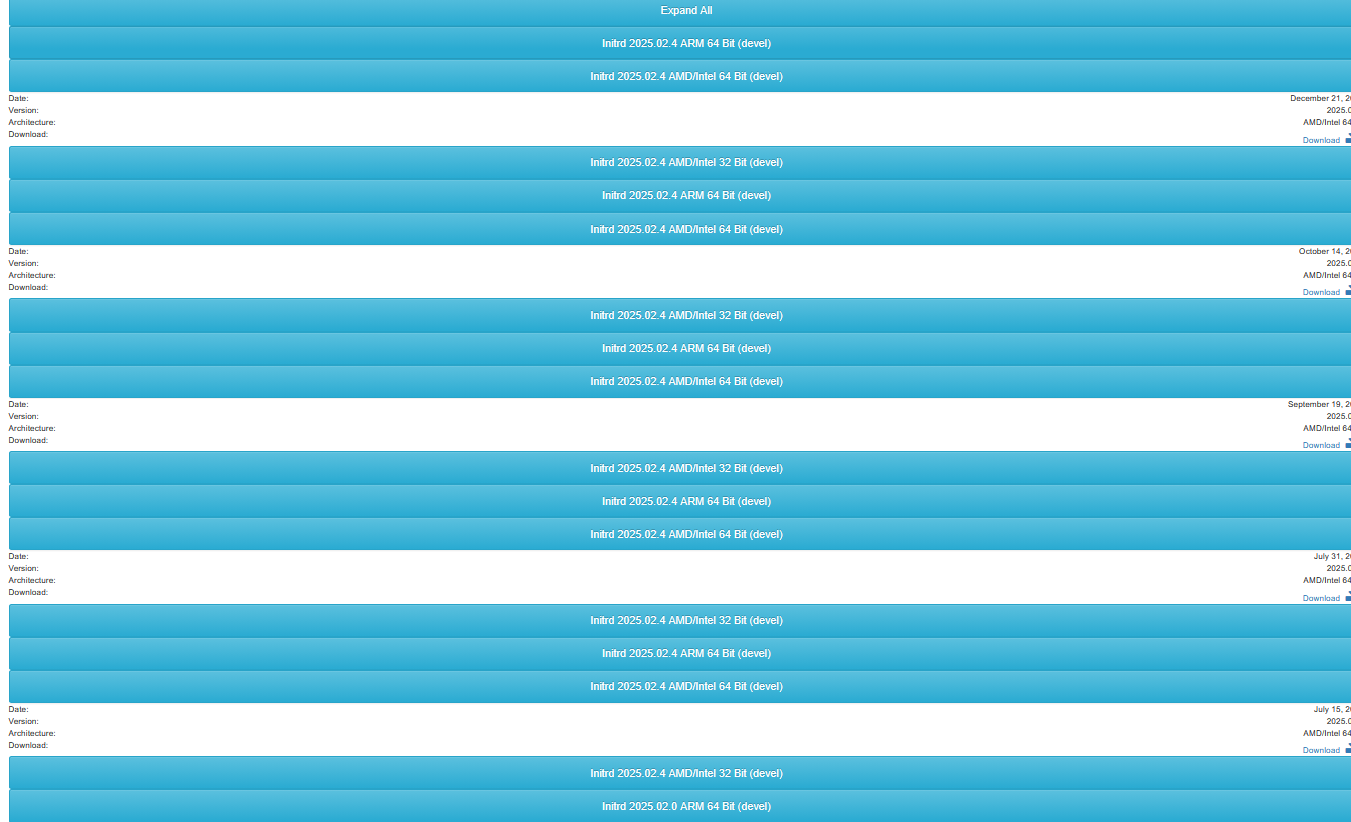

I noticed that updating also starts using latest Initrd. (2025.02.4)

Things are broken with latest Initrd. I set it back to 2024.02.9 AMD/Intel 64 bit and it is working again.

Then i updated Initrd to 202502.0 AMD/Intel 64 bit and it is still working.

Strange thing is that in webinterface the latest version is shown multiple times.

When I open and download the upper one during pxe boot I see version 20251221 loaded. That one is not working.

Is there anywhere to analyse what the differences are?

I also want to add that we were focussing on nvme. But VROC doesn’t seem to work with nvme in raid.

There are sata ssd/nvme in those slots.