Problem Capturing right Host Primary Disk with INTEL VROC RAID1

-

@Ceregon said in Problem Capturing right Host Primary Disk with INTEL VROC RAID1:

Also it seemed that one partition of my disk (40 GB / NTFS / 64k Clustersize) get’s borked at capture. Partclone recognized it as raw. After the deployment i had to format that partition again.

So after a few tests i can say, that a freshly formated parttion with that parameters is borked on the source-host directly after capturing.

The deployed image also has the borked partition. Maybe the clustersize is the problem here? Sadly we need it.

So i guess we will have to manually format the partition and set the correct NTFS-rights.

-

I try the same.

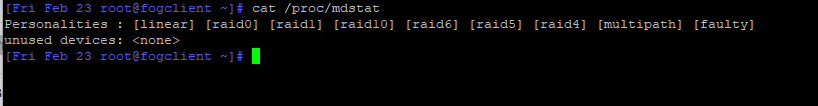

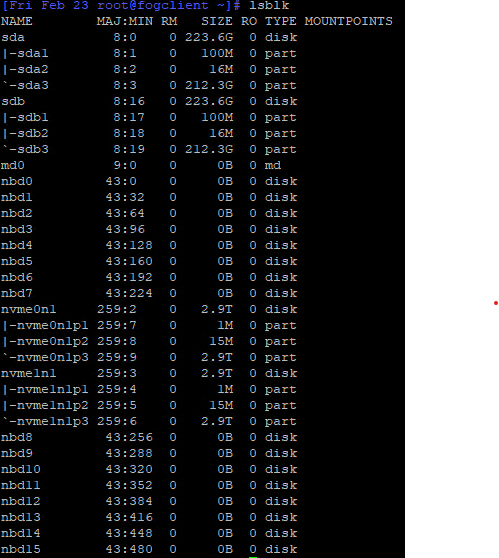

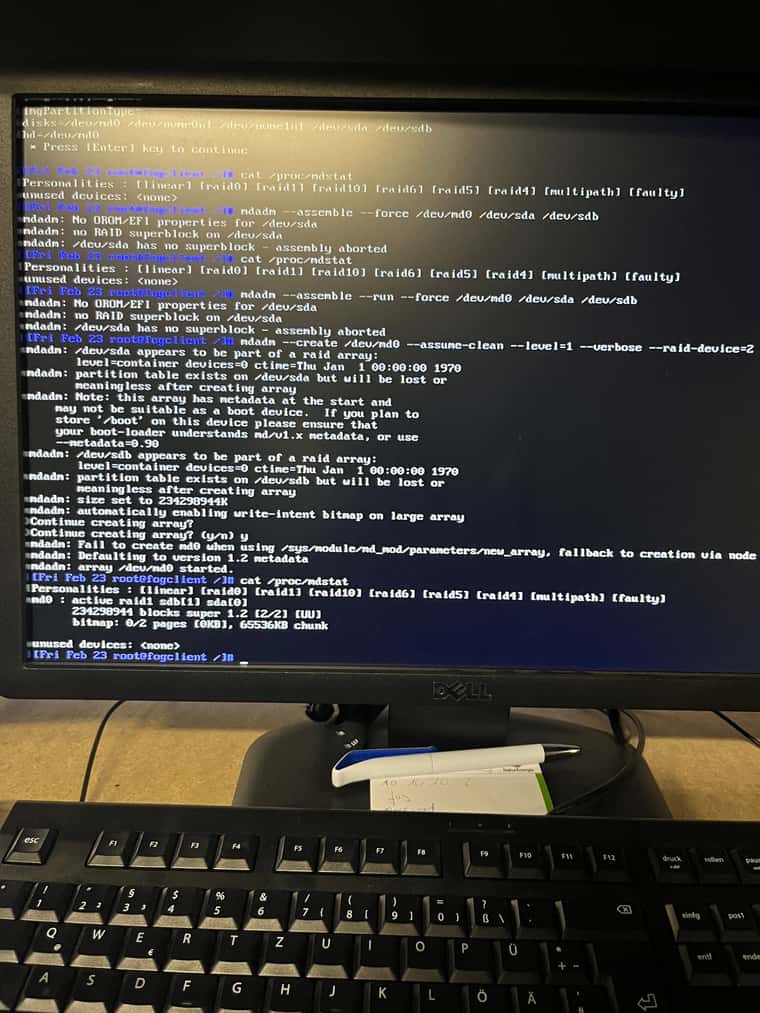

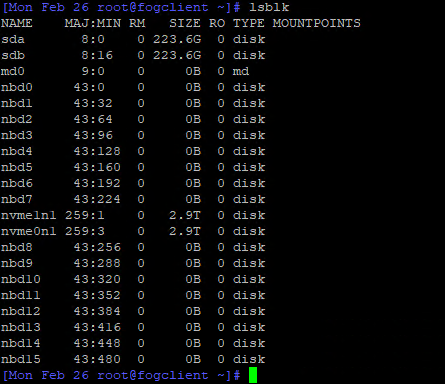

We have one global Win10 Image “Single Disk Resizable” and I want to deploy the Image to the Intel VROC Raid1.In the Debug mode Cat /proc/mdstat ist empty.

But with lsblk I find also the md0

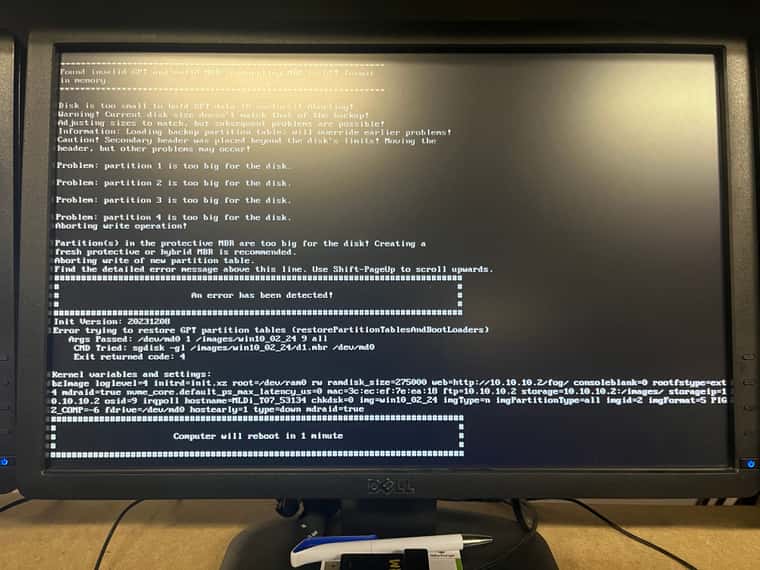

when I upload the image I get the following error.

I don’t think Fos recognises the raid correctly.

Any ideas here? -

@nils98 from the cat command it doesn’t look like your array was assembled. From a previous post it looks like you found a command sequence with mdadm to assemble that array.

If you schedule a debug deploy (tick the debug checkbox before hitting the submit button). pxe boot the target computer and that will put you into debug mode. Now type in the command sequence to assemble the array. Once you verify the array is assembled key in

fogto begin the image deployment sequences. You will have to press enter at each breakpoint. This will give you a chance to see and possibly trap any errors during deployment. If you find an error, press ctrl-C to exist the deployment sequence and fix the error. Then restart the deployment sequence by pressingfogonce again. Once you get all the way through the deployment sequence and the target system reboots and comes into windows. You have established a valid deployment path.Now that you have established the commands needed to build the array before deployment, you need to place those commands into a pre deployment script so that the FOS engine executes them every time a deployment happens. We can work on the script to have it execute only under certain conditions, but first lets see if you can get 1 good manual deployment, 1 good auto deployment, and then the final solution.

-

@george1421 I have now tried to create a new RAID via the Fog. But unfortunately it doesn’t work properly. It creates something under /dev/md0 but Fog cannot find it, even with lsblk /dev/md0 no longer appears.

In addition, I can no longer see the RAID in the BIOS. I can then create a new one here, which Fog sees under “lsblk”, but again it can’t do anything with it.I found a few more commands in the Intel document, but all of them only produce errors.

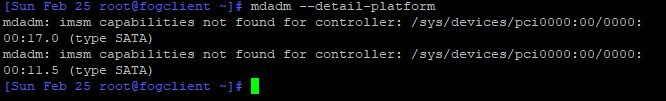

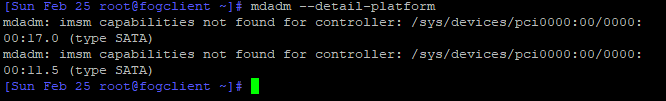

“mdadm --detail-platform” for example.

Is there a way I can load the drivers from the Vroc into the Fog?

I’m not that experienced with this, but I think it would solve some of my problems. -

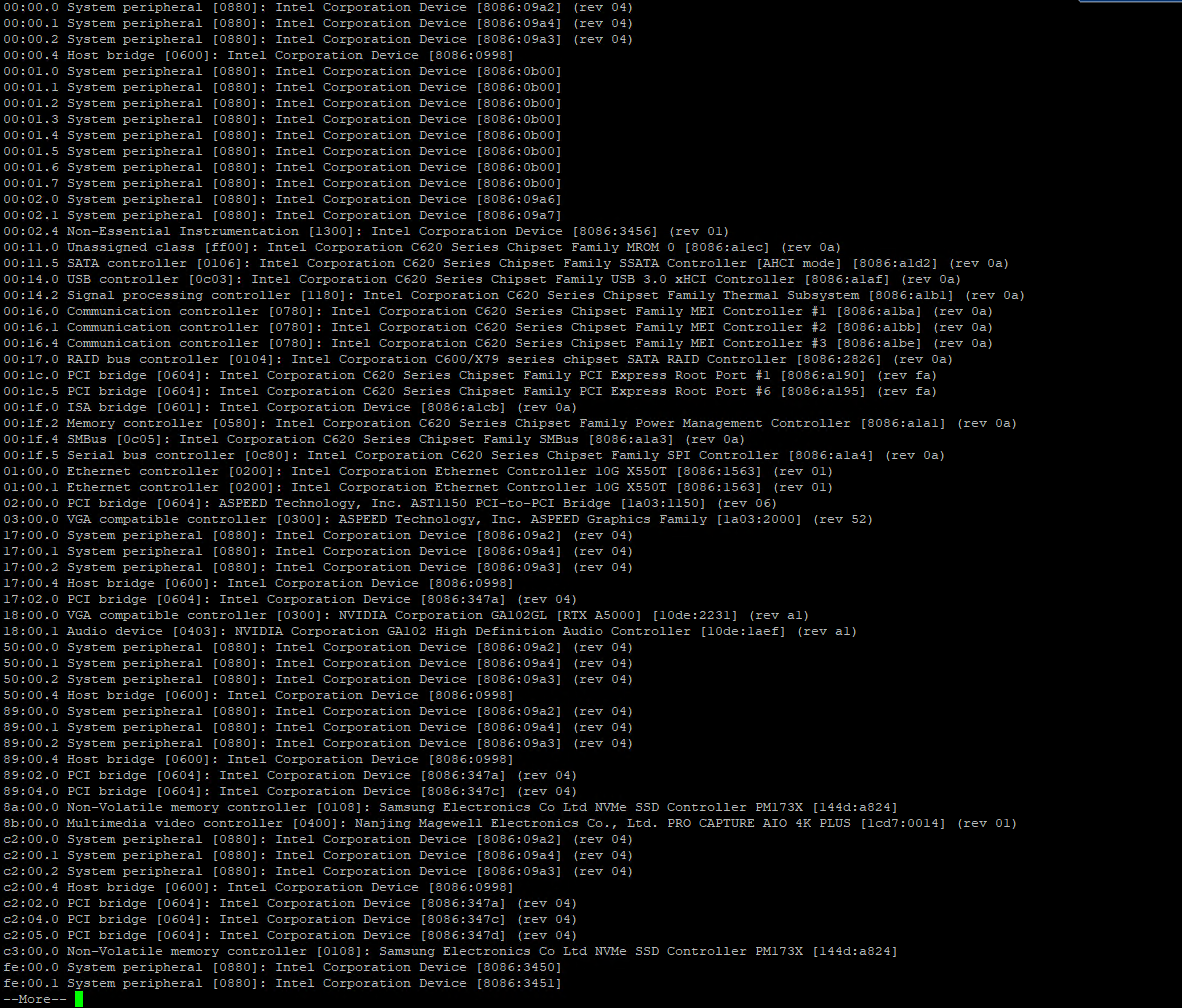

@nils98 I guess lets run these commands to see where they go.

lspci -nn| moreWe want to look through the output. We are specifically looking for hardware related to the disk controller. Normally I would have you look for raid or sata but I think this hardware is somewhere in between. I specifically need the hex code that identifies the hardware. It will be in the form of [XXXX:XXXX] where the X’s will be a hex value.The output of

lsblkThen this one is going to be a bit harder but lets run this command, but if it doesn’t output anything then you will have to manually look through the log file to see if there are any messages about missing drivers.

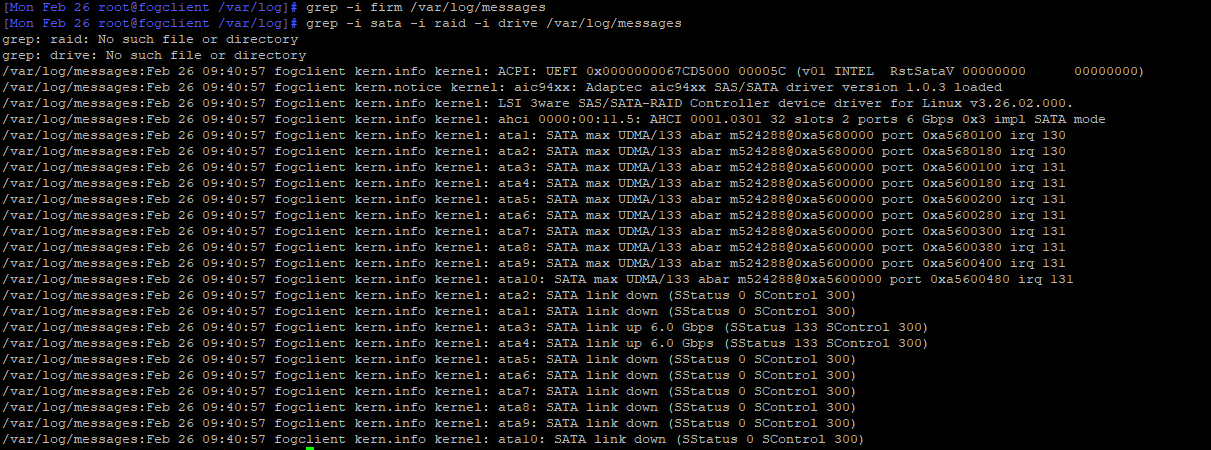

grep -i firm /var/log/syslogThe first one will show us if we are missing any supplemental firmware needed to configure the hardware.grep -i sata -i raid -i drive /var/log/syslogThis one will look for those keywords in the syslog.If that fails you may have to manually look through this log.

-

@george1421 Here is the output.

lblk

Unfortunately there is no syslog, but I found something under /var/log/messages.

I hope it is ok that I just send the screenshots

If you want me to look for more in the messages, please let me know -

@nils98 There are a few interesting things in here, but nothing remarkable. I see this is a server chassis of some kind. I also see there is sata and nvme disks in this server. A quick look of vroc and this is designed for nvme drives and not sata and this is on cpu raid.

Is your array with the sata drives /dev/sda and /dev/sdb or with the nvme drives?

I remember seeing something in the forums regarding the intel xscale processor and vmd. I need to see if I can find those posts.

For completeness, what is the manufacturer and model of this server. What is the target OS for this server. Did you setup the raid configuration in the bmc or firmware, so the drive array is already configured?

And finally if you boot a linux live cd does it properly see the raid array.

Lastly for debugging with FOS linux if you do the following you can remote into the FOS Linux system.- PXE boot into debug mode (capture or deploy)

- Get the ip address of the target computer with

ip a s - Give root a password with

passwdjust make it something simple like hello it will be reset at next reboot. - now with putty or ssh you can connect to the fos linux engine to run commands remotely. This makes it easier to copy and paste into the fos linux engine.

-

@nils98 I don’t know your prerequisities.

Our machines get delivered with a preinstalled windows.

The RAID1 is also already assembled.

We do not create a raid 1 via mdadm in fog. Also i did not inject any drivers for VROC.

I think /dev/md0 get’s created because of the use of the kernel-parameter “mdraid=true” but it’s empty.

If you check in bios/uefi. Is there a raid 1 shown? If not can you create one? I never had problems to see my preassembled VROC raid1 with “lsblk” in debug mode.

-

@george1421 the Vroc Raid is created via the Sata /dev/sda and /dev/sdb.

The Nvme are only content discs that are later connected to Windows.The board is a Supermicro X12SPI-TF running Windows110.

Last time we created the raid with the Windows Install. But I had already created it via the bios. Actually it is already created and I don’t want to touch it with the Fog

I will test Linux live later.

I had already read about connecting via Putty here, thanks.@Ceregon That was exactly the same for us.

The bios shows me a Raid 1 with both SSDs and I can also create a new one there if necessary.

as you can see above, no raid is listed via “lsblk”. -

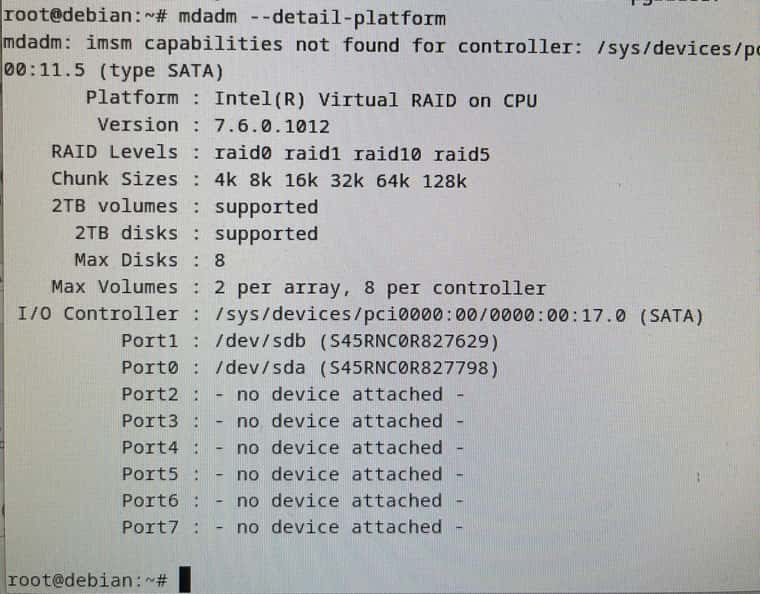

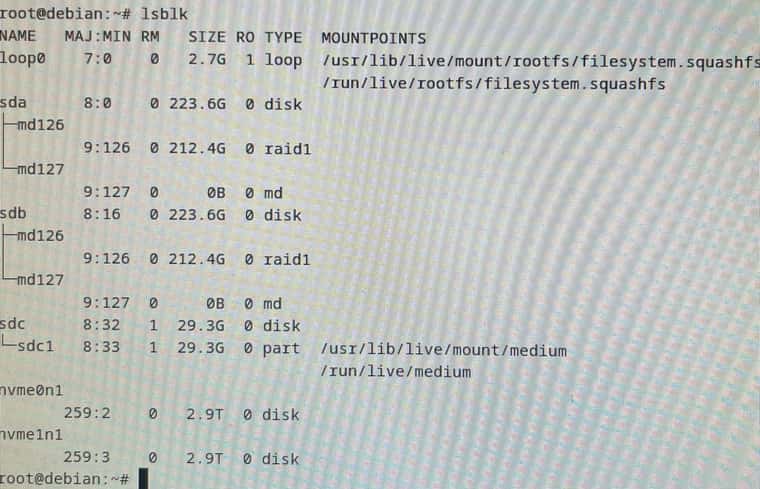

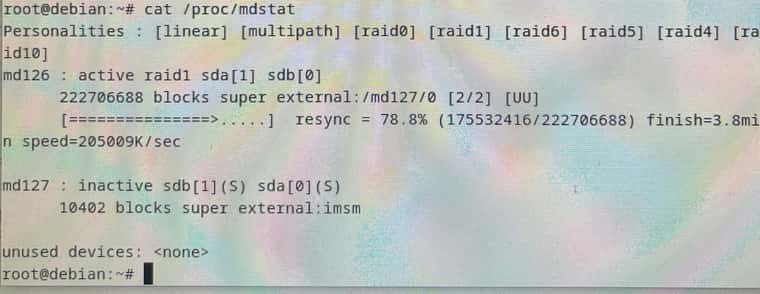

@george1421 with Debian 12 live I recognize the raid and vroc

any ideas what I can change in the VOS to make it look exactly like this?

-

@nils98 Nice, this means its possible with the FOG FOS kernel. If the linux live cd did not work then you would be SOL.

OK so lets start with (under the live image) lets run this commands.

lsmod > /tmp/modules.txt

lspci -nnk > /tmp/pcidev.txtuse scp or winscp on windows to copy these tmp files out and post them here. Also grab the /var/log/messages or /var/log/syslog and post them here. Let me take a look at them to see 1) what dynamic modules are loaded and/or the kernel modules linked to the PCIe devices.

-

@george1421 Here are the files.

Unfortunately I have not found a messages or syslog file, I have only found a boot log file in the folder. -

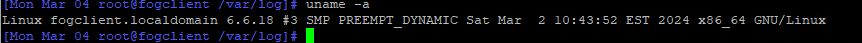

@nils98 Nothing is jumping out at me as to the required module. The VMD module is required for vroc and that is part of the FOG FOS build. Something I hadn’t asked you before, what version of FOG are you using and what version of the FOS Linux kernel are you using? If you pxe boot into the FOS Linux console then run

uname -ait will print the kernel version. -

@george1421

FOG currently has version 1.5.10.16.

FOS 6.1.63

I set up the whole system a month ago. I only took over the clients from another system, which had FOG version 1.5.9.122.The Raid PC has now been added.

-

@nils98 said in Problem Capturing right Host Primary Disk with INTEL VROC RAID1:

FOS 6.1.63

OK good deal I wanted to make sure you were on the latest kernel to ensure we weren’t dealing with something old.

I rebuilt the kernel last night with what thought might be missing, then I saw that mdadm was updated so I rebuilt the entire fos linux system but it failed on the mdadm updated program. It was getting late last night so I stopped.

With the the linux kernel 6.1.63, could you pxe boot it into debug mode and then give root a password with

passwdand collect the ip address of the target computer withip a sthen connect to the target computer using root and password you defined. Download the /var/log/messages and/or syslog if they exist. I want to see if the 6.1.63 kernel is calling out for some firmware drivers that are not in the kernel by default. If I can do a side by side with what you posted from the live linux kernel I might be able to find what’s missing. -

@george1421 here is the message file

-

@nils98 Ok there have been a few things I gleaned by looking over everything in details.

The stock FOS linux kernel looks like its working because I see this in the messages file during boot. I do see all of the drives being detected.

Mar 1 15:46:40 fogclient kern.info kernel: md: Waiting for all devices to be available before autodetect Mar 1 15:46:40 fogclient kern.info kernel: md: If you don't use raid, use raid=noautodetect Mar 1 15:46:40 fogclient kern.info kernel: md: Autodetecting RAID arrays. Mar 1 15:46:40 fogclient kern.info kernel: md: autorun ... Mar 1 15:46:40 fogclient kern.info kernel: md: ... autorun DONE.This tells me its scanning but not finding an existing array. It would be handy to have the live CD startup file to verify that is the case.

Intel VROC is the rebranded Intel Rapid Store Technology [RSTe]

There is no setting for

CONFIG_INTEL_RSTin the current kernel configuration file: https://github.com/FOGProject/fos/blob/master/configs/kernelx64.config Its not clear if this is a problem or not, but just connecting the dots between VROC and RSTe: https://cateee.net/lkddb/web-lkddb/INTEL_RST.html I did enable it in the test kernel belowTest kernel based on linux kernel 6.6.18 (hint: newer kernel that is available via fog repo).

https://drive.google.com/file/d/12IOjoKmEwpCxumk9zF1vtQJt523t8Sps/view?usp=drive_linkTo use this kernel copy it to /var/www/html/fog/service/ipxe directory and keep its existing name. This will not overwrite the FOG delivered kernel. Now go to the FOG Web UI and go to FOG Configuration->FOG Settings and hit the expand all button. Search for bzImage, replace bzImage name with bzImage-6.6.18-vroc2 then save the settings. Note this will make all of your computers that boot into fog load this new kernel. Understand this is untested and you can always put things back by just replacing bzImage-6.6.18-vroc2 with bzImage in the fog configuration.

Now pxe boot into a debug console on the target computer.

Do the normal routine to see if lsblk and

cat /proc/mdstatandmdm --detailed-platformreturns anything positive.If the kernel doesn’t assemble the array correctly then we will have to try to see if we can manually assemble the array using mdadm tool.

I should say that we need to ensure the array already exists before we perform these test because if the array is defunct or not created we will not see it with the above tests.

-

@george1421 Unfortunately, nothing has changed.

“mdm --detailed-platform” does not find “mdm” with “mdadm --detail-platform” it still shows the same error.

I have also searched the log files under the live system again but unfortunately found nothing.

-

@nils98 Well that’s not great news. I really thought that I had it with including the intel rst driver. Would you mind sending me the messages log from booting this new kernel? Also make sure when you are in debug mode that you run

uname -aand make sure the kernel version is right. -