@george1421 seems like in this post it is a similar interest they were implying by putting FOS on a bootable partition of the hard drive

https://forums.fogproject.org/topic/7727/building-usb-booting-fos-image/4

@george1421 seems like in this post it is a similar interest they were implying by putting FOS on a bootable partition of the hard drive

https://forums.fogproject.org/topic/7727/building-usb-booting-fos-image/4

@george1421 sounds like a good starting point. Has anyone tried implementing Shim along with GRUB to get around the secure boot issue? I was reading up about this and it can bridge the gap with UEFI secure boot in situations like this.

@george1421 so for example you could schedule a deployment task that fog would then execute a script that would make a small EFI fat32 partition on the host local storage drive assign it a letter, set the temporary boot order to the partition, reboot the pc that would launch in to that environment and get imaged. So automate the entire process. As of now yes fog can schedule the task and reboot the host but if the boot order isn’t set properly it will not boot in to env and it’s not logical to keep network boot as your first boot option default. So if you’re not there at the host with a usb to point to the server over the network or booting to usb without booting over network you’re out of luck you have to have some level of manual intervention in my situation and I’m sure there’s many others that have a similar situation to mine versus just being able to truly 100% remotely image a client. So add this functionality to the code of fog client and FOS to give it this capability.

@george1421 no that’s not what I’m talking about I already use a usb to point the pcs to the server in our data center over pxe. You can shrink a partition from the local c drive of a computer copy a preinstallation env in to that created partition make it a boot entry with bcdedit then boot to it without needing usb or external drive of any kind. So it’s something you could do 100% remote. The object is to go usb free and pxe boot free. You can boot in to the env locally and pull the image from a shared folder.

@cjiwonder what type of NIC is in the pc you’re trying to boot in to fog with? I always use either ipxe.efi or intel.efi. Ipxe.efi being my first choice. My organization uses all HP pcs so in some of the newer models G10 laptops I have to use intel.efi. Try the ipxe.efi though and see if that works for you. It should.

fishing for ideas on where best place to start on a project like this. I think it be very valuable functionality if able to achieve it.

Anyone ever thought of the idea of being able to truly remotely image a pc with FOG without the need for pxe boot? For example you’re able to take the local hard drive of a pc make a bootable partition place an env like WinPE and set the temporary boot order to boot in to it that can pull the image from a network share. There is already a paid imaging solution that leverages this that is also client based. PDQ owns them now. This would be an awesome feature for people like me that have to use a IPXE usb to point to my fog server in the data center due to the network being set up the way it is. Setting up this isn’t hard but how to integrate it in to my fog installation is the challenging part. Where would be a good starting point to utilize something like this so that when you start a deploy task the fog client could utilize the script that would set the env then boot the pc in to the preinstall env? Any ideas

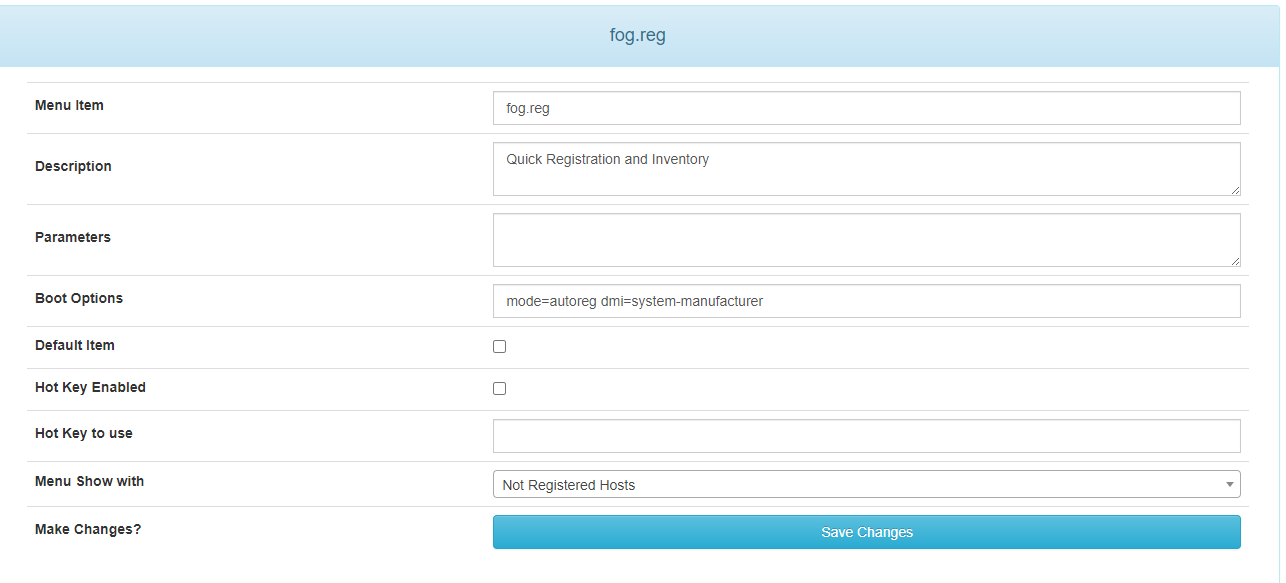

The purpose of me using Capone for me is because we use all hps so I already have Capone configured to serve the golden image based off of the system-manufacturer matching HP

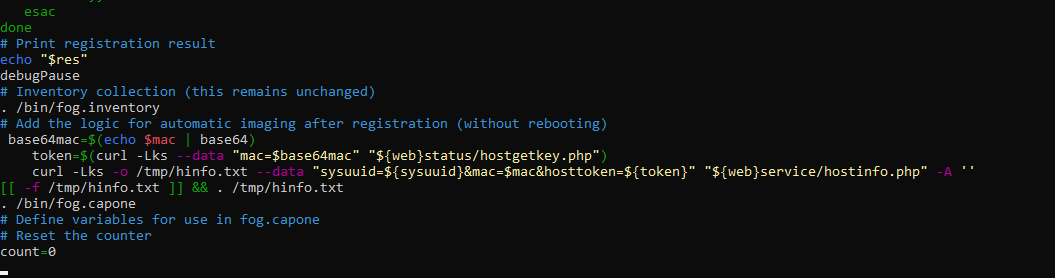

@zaboxmaster @george1421 @Tom-Elliott I have a possible solution for this issue that I just figured out today, I’ve been working on a similar situation but instead of using fog.man.reg Im using quick reg. So what I did to achieve this is I used the post init script to inject a modified version of fog.auto.reg into FOS that includes a section to call capone after the registration, I used information from this post and a past post https://forums.fogproject.org/topic/14278/creating-custom-hostname-default-for-fog-man-reg

to accomplish what I wanted to do on that part

when adding this at first I got an invalid parameter for the dmi value error similiar to your osid error youre getting but when checking in the capone pxe menu I saw how it list mode=capone shutdown=0 and dmi=system-manufacturer so for giggles I added after mode=autoreg i included dmi=system-manufacturer and it went through like a charm.

So in the web interface go to the pxe menu settings and under fog.reginput try putting osid=9 beside it if your image is a windows image or the appropriate id based off the OS you’re using. Hopefully it will achieve the same thing for you as it did for me.

Sorry everyone I haven’t updated on this post, my organization wanted our fog server migrated to our data center on to a vm so my time was reverted to doing that and building an additional one at one of our new hospitals we recently finished construction on. But I plan to get back to working on it this week on my test server I have set up. Once I have it set up I am going to implement it on our production servers, I think I’m very close to implementing this concept and once I have it cracked I will make sure to share this with everyone because I think the option of being able to do this will be valuable to many others. @george1421 @Wayne-Workman

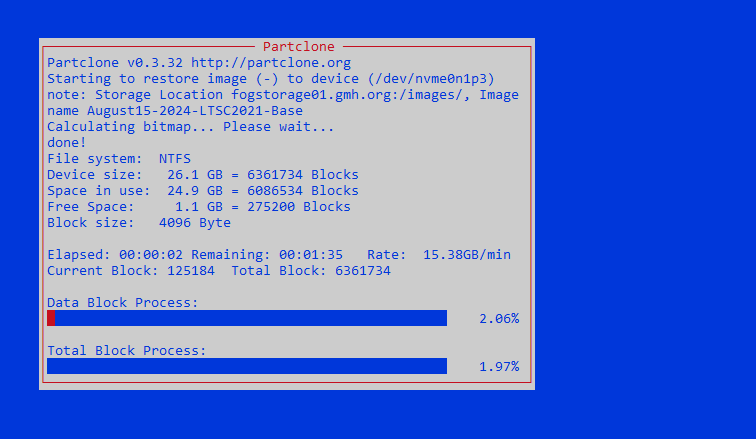

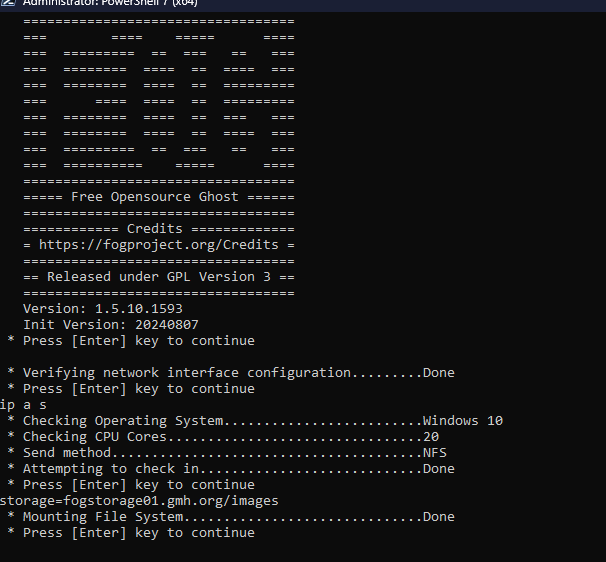

@george1421 passing the command storage=fogstorage01.gmh.org/images is confirmed successful by the screenshot below

so, knowing this I think passing this in a postinitscript will actually work, I am going to attempt this on the next boot to test it out and I will post the script I created to have this work if it is successful

@george1421 so I did your method by using ssh to get into the fos of the target computer during the imaging process and I found a command that worked I typed storage=fogstorage01.gmh.org/images and got a successful completion command that youll see here in the screenshot

so, seeing this I think I can implement this parameter in a postinit script to run by manually mapping subnets or using a helper function within the script to get the host ip address then match it to the proper storage node by implementing something like if the host ip matches subnet 10.130.208.0/24 storage=fogstorage02.gmh.org/images or if its 10.130.192.0/24 storage=fogstorage02.gmh.org/images and see if it completes successfully. I am going to attempt this then I will report back the results.

@george1421 @Wayne-Workman but thank you guys I think with what you provided me so far will put me on the right track to my solution.

@george1421 @Wayne-Workman I was thinking towards the postinit script as well before, but I had the same concerns that @Wayne-Workman mentioned about the changes persisting after the script was ran as well. I’m about to continue working on this now, I was messing around in the ipxe menu items and was able to have the server pass the image I want to use automatically on a new menu item I created like so, in my mind if you are able to pass this logic to set the image you should be able to pass the same logic if I find the right parameters to set the storage node as well since that logic can be passed with the registered host using the location or subnet plugin:

login

set imageID 1

params

param mac0 ${net0/mac}

param arch ${arch}

param imageID ${imageID}

param qihost 1

param username ${username}

param password ${password}

param sysuuid ${uuid}

isset ${net1/mac} && param mac1 ${net1/mac} || goto bootme

isset ${net2/mac} && param mac2 ${net2/mac} || goto bootme

so, once I saw I was successfully able to pass this over and it worked I experimented with different parameters to set the storage node as well based on parameters I saw that gets passed in some of the php scripts already within fog server, I set the storage location to 4 which corresponds with one of my storage nodes identifiers in the sql database but got a kernel panic so not sure if that parameter actually worked when I did that or what. I was trying to see if I could successfully pass the parameter manually that way I could understand how to make it automated after then I could add some subnet mappings for the server to review the ip address of the host and map it accordingly to the proper node, or if this fails I was going to see if there is a way to add logic in the decision making process of the main fog server to measure the latency so that it will always choose the closest node either will work if I can make it happen. I was also looking into the location/subnet plugins that I have installed to see if there is a way to modify those to work with unregistered host or at least mimic functions they add in a similar way for unregistered host. The solution I’m looking for is deff possible just have to put the right pieces together and make it whole, once I do figure this out I will post my solution, I think there would be many others that would like to be able to utilize the same function. If anyone else comes up with some more ideas to add I am open to hear all.

I’ve been researching and inquiring online about this subject and I cannot find any useful info regarding it. So, the location and subnet plugins within fog only works with registered host to direct it to the storage node to use for the imaging task I know this and use this function with all registered host I have. But, I want to know if there is a way to implement this with non-registered host, where if I choose the deploy image option in the PXE menu the main fog server can determine the node to use within the same location/subnet of the client pc booting in to PXE based off info gathered from the pc like Ip address/subnet, I have not been successful yet at cracking this so I’m wondering if anyone else has attempted or been successful at doing this or could give me some ideas of where to tie all this together to make it possible. I want the node assignment during the imaging task to be automated without having to always register the host pc. I have a main fog server and 2 storage nodes on 2 different subnets within our facility right now I am running dnsmasq on the storage nodes to respond to PXE request within the different subnets and serve the files. It works great but it obviously will choose a random node based off the main server’s current logic after the deploy image option is selected; I want to see about implementing this to cut latency down by having the closest node (the node within the corresponding subnet of the client pc) to always be the one to handle imaging.

Not sure if this is relevant but I modified the embedded ipxescript from the original to automate the chain loading of the tftp server I want to use so I don’t have to enter it manually each time here is a copy of my script below, so this process is already automated but I want to automate the logic for the storage node tasked with the imaging and Ive experimented with trying to set parameters within the ipxe script did not work:

#!ipxe

Obtain DHCP lease

dhcp || goto dhcperror

echo Received DHCP lease, proceeding…

Test the hostname resolution by pinging it

ping -c 1 fogserver || goto pingerror

echo Hostname resolved, proceeding…

Proceed to netboot using the hostname directly

chain tftp://fogserver/default.ipxe || goto chainloadfailed

:chainloadfailed

prompt --key s --timeout 10000 Chainloading failed, hit ‘s’ for the iPXE shell; reboot in 10 seconds && shell || reboot

:dhcperror

prompt --key s --timeout 10000 DHCP failed, hit ‘s’ for the iPXE shell; reboot in 10 seconds && shell || reboot

:pingerror

prompt --key s --timeout 10000 Hostname resolution failed, hit ‘s’ for the iPXE shell; reboot in 10 seconds && shell || reboot

And below is the script I tried to use to attempt to set the location of the host booting over pxe to task the storage node with imaging task, which did not work due to the main servers decision is done after choices are made in the pxe menu so I do not think that adding params to this script is the way to go but I may be wrong:

#!ipxe

Obtain DHCP lease

dhcp || goto dhcperror

echo Received DHCP lease, proceeding…

Determine the location based on subnet

set clientip ${net0/ip}

set locationid 0 # Default location ID (if needed)

if (${clientip} =~ 10.130.192.) set locationid 1

if (${clientip} =~ 10.130.208.) set locationid 2

Perform the API call to set the location for this session

Replace <api-user> and <api-pass> with your FOG API credentials

set api_user <api-user>

set api_pass <api-pass>

set api_token “”

set user_token “”

Fetch API token

set api_url

http://<fog-server>/fog/management/index.php?sub=auth&action=login

params set=api_user=${api_user}&api_pass=${api_pass}

route http post ${api_url} params | parse api_token=${api_token},user_token=${user_token}

Set location via API call

set location_url

http://<fog-server>/fog/management/index.php?sub=location&action=setLocation&mac=${net0/mac}&locationid=${locationid}

route http post ${location_url} Authorization: “Bearer ${api_token}”

Test the hostname resolution by pinging it

ping -c 1 fogserver || goto pingerror

echo Hostname resolved, proceeding…

Chain to boot.php and pass location ID

chain tftp://fogserver/default.ipxe?locationid=${locationid} || goto chainloadfailed

:chainloadfailed

prompt --key s --timeout 10000 Chainloading failed, hit ‘s’ for the iPXE shell; reboot in 10 seconds && shell || reboot

:dhcperror

prompt --key s --timeout 10000 DHCP failed, hit ‘s’ for the iPXE shell; reboot in 10 seconds && shell || reboot

:pingerror

prompt --key s --timeout 10000 Hostname resolution failed, hit ‘s’ for the iPXE shell; reboot in 10 seconds && shell || reboot