I tested 10Gbe FOG imaging on my Hyper-V server (from FOG Server VM to Client PC VM, both connected at 10Gbe via internal switch) and imaging speed was exactly the same as 1Gbe. The Hyper-V host has all SSD storage too. After some research, it seems that partclone (clonezilla) does not scale well with faster network connections. What I haven’t tested yet was imaging several 1Gbe workstations on a 10Gbe backbone to a 10Gbe FOG server. Just don’t have the infrastructure for it.

Posts

-

RE: Odd performance issueposted in FOG Problems

-

RE: issues with deploying on UEFI computersposted in FOG Problems

@anwoke8204 Well your first screenshot indicates that you already successfully booted UEFI PXE, but it stops at chaining boot.php via HTTPS.

First thing to try is browse to https://10.4.47.15/fog/service/ipxe/boot.php from a desktop. If it loads and you can see the script, then you know the web service is working correctly. Since you are using HTTPS which uses a self-signed cert that has a validity period, I suggested you check the system date and time in your BIOS. -

RE: issues with deploying on UEFI computersposted in FOG Problems

Another thing to check is the system time in the BIOS. I’ve seen instances where the BIOS data/time was reset, causing the date to be prior to the FOG certificate’s valid period, causing the permission denied error.

-

Schedule consecutive tasksposted in FOG Problems

Is it possible to schedule a snap-in task then a capture task? I tried to add a snap-in task then a capture but the capture task overrode the snapin task. The snapin task is a powershell that clears the Event Viewer logs, and the time it takes can vary. The way I do it normally is after the logs are cleared the PC shuts down to be ready for a capture. The goal is to clear the logs, reboot, then begin capture, automatically.

-

Snap-in: Run a powershell script prior to captureposted in General

I have no experience with snapins and was wondering how can I use them to run a powershell script before rebooting to capture. I have a script that clears the Windows logs and in that script (right now) at the very end shuts the computer down, preparing it for manual capture. I have the FOG client installed on my test system.

:EDIT: Sorry I think I figured it out.

Might as well think out loud in case anyone else can use this info. I got the snapin to run my clearalllogs.ps1 script, now the next challenge is to have the script run then immediately after it reboots, start the capture.

-

RE: Configure FOG Server with two NICsposted in General

@george1421 I see the set fog-ip variable and set storage-ip variable. Could I set those two variables with IPs of different interfaces? So for example, the interface IP server ipxe will be the “set fog-ip” and the interface IP serving NFS will be the “set storage-ip” var. Would that work?

-

RE: Configure FOG Server with two NICsposted in General

@george1421 Even if I configure the storage group with one subnet and tftp with another?

-

Configure FOG Server with two NICsposted in General

Here’s the scenario I’d like to hash out. I want to virtualize FOG with the following config:

The VM server will have two NICs. Is it possible to serve TFTP on two interfaces, one of which will also host DHCP on the internal VM network? The other interface will function normally as if config’d to work with an existing DHCP server on the prod net. I’m trying to find ways to speed up imaging and reduce prod network load to image a set of VMs on the same hyper-v server. -

RE: HyperV Gen1 Hangs on iPXE Initializing Devicesposted in Tutorials

I know this is an old post, but I too have issues booting Gen 1 Hyper-V with ipxe. Turns out the message I get (error 1c25e002) is related to a bug in ipxe when used with gen 1 hyper-v. I tried recompiling as you suggested but it didn’t make a difference. The error code appears right after default.ipxe is downloaded during the boot,php download, says “Invalid Argument”. Works fine on Vbox via legacy boot though.

-

Limit disk space that FOG can useposted in General

Is it possible to limit the amount of disk space that FOG can use without partitioning? I built a new Hyper-V server with about 13TB that will host VMs, FOG included, and I will be mounting the images from the host to the VM with an internal switch via SMB. I understand that the FOG UI will show total size available wherever the /images is located, even if you have a network share mounted on /images. So without partitioning the volume, is there a way to tell FOG to limit the available space to use for images?

-

RE: Very slow imaging performance on XCP-NG guest vmposted in General Problems

@Milheiro Zstd level is compression level right? That I’m aware of. Makes sense if you increase compression that it would take longer to capture/deploy. What I was experiencing was linux kernel level errors, only when deploying to a virtual disk that resides on the xenserver’s local storage array. I wonder if it is something like Truenas where it performs worse when you let the storage controller handle the array vs a soft-raid controlled by the OS.

-

RE: Very slow imaging performance on XCP-NG guest vmposted in General Problems

@Sebastian-Roth The devel kernel version 6.1.22 didn’t help, BUT, I was using the server’s local storage to store the virtual disk. I mounted an SMB share to the xen server, stored the virtual disk on that, voila, no more errors.

Conclusion: Nothing to do with FOG.

-

RE: Very slow imaging performance on XCP-NG guest vmposted in General Problems

@Sebastian-Roth FOG version 1.5.10. Not sure how to find the FOS kernel version. Are you referring to the bzImage kernel version? If so the kernel version I’m on is 5.15.93.

-

Suggestion for install.sh scriptposted in FOG Problems

I see that the install script still tries to set up tftpd-hpa. I have my FOG set up as a next-server, and my DHCP server forwards packets to it. I use dnsmasq instead of tftpd-hpa. The installer errors out at the very end with

Mar 29 20:08:29 fogserver systemd[1]: Starting LSB: HPA's tftp server... Mar 29 20:08:29 fogserver tftpd-hpa[3413975]: * Starting HPA's tftpd in.tftpd Mar 29 20:08:29 fogserver systemd[1]: tftpd-hpa.service: Control process exited, code=exited, status=71/OSERR Mar 29 20:08:29 fogserver systemd[1]: tftpd-hpa.service: Failed with result 'exit-code'. Mar 29 20:08:29 fogserver systemd[1]: Failed to start LSB: HPA's tftp server.TFTP still serves files via dnsmasq though. To be honest I forgot why I moved to dnsmasq, its been so long. Is it possible to mod/update the install script to ask if you want to use tftpd or dnsmasq?

-

Very slow imaging performance on XCP-NG guest vmposted in General Problems

Not a critical issue as I use XCP-NG to image vms for testing the images. Was just wondering if anyone else has this issue.

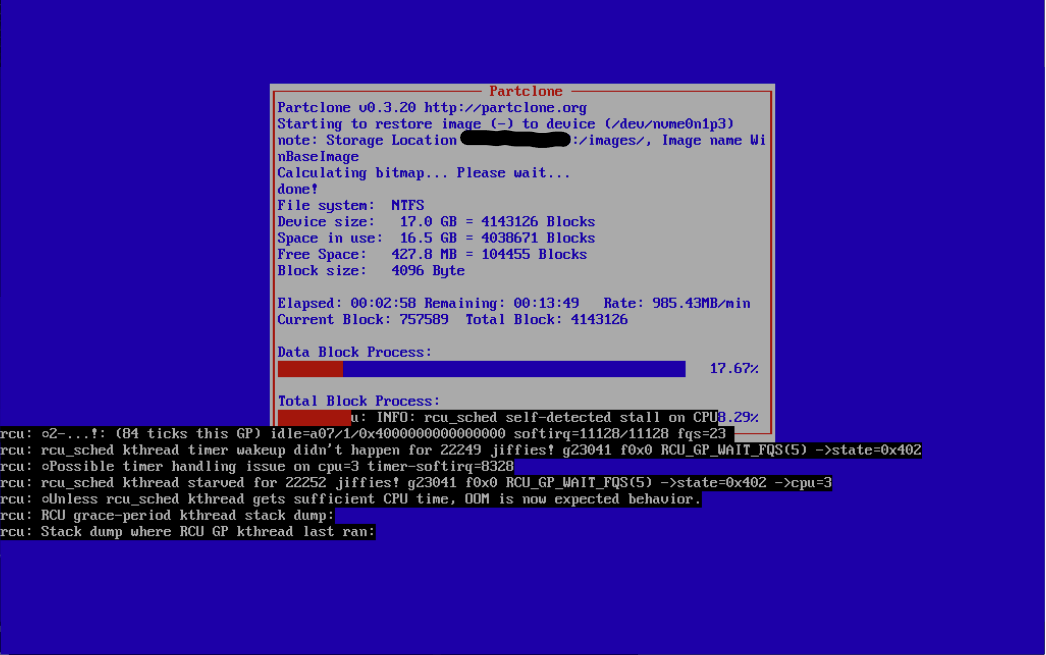

Whenever I deploy an image to a vm, it crawls down to less than 1GB/min with several delays between screen updates. Eventually I see these message appear:

The host is a Dell PowerEdge R710. I give the guest 4 cores and 8GB ram. I tried 1 socket, 4 cores and also tried 4 sockets 1 core. The network connection is 1Gbps. I tried imaging the same vm using Acronis True Image which took about 8min. If I deploy an image with FOG on the same vm, the process can take +20min.

-

RE: ipxe boot slow after changing to HTTPSposted in FOG Problems

@Sebastian-Roth said in ipxe boot slow after changing to HTTPS:

never got UEFI PXE booting to work in vbox on Linux

Even when using the Paravirtualized Network Adapter in VBox?

-

RE: ipxe boot slow after changing to HTTPSposted in FOG Problems

@Sebastian-Roth said in ipxe boot slow after changing to HTTPS:

The default on Linux virtualbox: Intel PRO/1000 MT Desktop (82540EM)

Hmm. Assuming you’re booting legacy pxe instead of UEFI, since UEFI PXE boot on Vbox requires the Paravirtualized Network Adapter (virtio) adapter. Not that it made make a difference for me at least once ipxe is loaded.

-

RE: ipxe boot slow after changing to HTTPSposted in FOG Problems

@Sebastian-Roth Cool thanks, much apppreciated. By the way, this isn’t an operation-breaking critical issue, so take your time.