Thanks so much for the info, guys!

Posts

-

Install/Update Database Schema?posted in FOG Problems

FOG Version: 1.5.9-RC2

OS: Debian 10 BusterHI all,

I am attempting to update my FOG server cluster to 1.5.10 and after doing a

git pulland reinstall of FOG, it’s saying the following:* You still need to install/update your database schema. * This can be done by opening a web browser and going to: http://10.15.0.2/fog/management * Press [Enter] key when database is updated/installed.After navigating to the FOG Dashboard, I see nothing that suggests that there is an update to the Database Schema. Am I missing something?

I DO howwever notice that my nodes under the “Node Disk usage” section now say “(Unauthorized)” next to them, so that’s new and maybe suggests that something is off.

I would love some expertise here if anyone is willing to help.

Thanks very much in advance! -

RE: Cannot delete image off of all nodes. Type 2 FTP Error...posted in FOG Problems

@Sebastian-Roth Thanks for the reply, Sebastian!

Yeah, it appears that only one of the nodes is exhibiting this problem.

Another thing I also found out that’s interesting is that when I ask the FOG dashboard to delete an image off the Master and all Nodes, it throws that one error that I showed, but it actually does delete the image off of the Master and the other two Nodes. But what’s weird is that even though that image only exists on only one storage node, it still shows on the FOG dashboard and when you boot a host into FOG.

So I think manually deleting is using

rmwould not trigger the master to recopy it in this scenario. If I wanted to do this, would I just delete the entire directory? Or would I just delete thed1p1.imgfile? I feel like the entire directory, right?Hoping imaging slows down soon so I can do this update.

Speaking of, is doing an update just running the following commands?:

cd /fogproject

git checkout dev-branch

git pull

sudo ./installfog.shIs there anything else to it? Aside from backing up the FOG database?

-

RE: Cannot delete image off of all nodes. Type 2 FTP Error...posted in FOG Problems

Thanks very much for the replies, y’all! It’s definitely a peculiar issue and one that I have not run into with FOG.

I think it’s worth saying that this particular node is a fairly new addition to the cluster (maybe a week old?) and I haven’t had any issues with any of the other two storage nodes while being on this version of FOG. Do you think it’s worth maybe just trying a FOG OS reinstall on the storage node?

I’m honestly hesitant to update to the latest dev-branch on the Master since we are right smack in the middle of a heavy imaging production plan for the Spring at my job, but once things slow down I will back up the FOG server and attempt an update. If I can capture/deploy images but not delete them, it’s not a huge problem to deal with until things get a little less chaotic.

If I absolutely have to delete an image directory off of the nodes, would manually doing it have the same effect? Or does it have to run through the Master and FTP to do it correctly?

-

RE: Cannot delete image off of all nodes. Type 2 FTP Error...posted in FOG Problems

Just issuing an update here:

I was able to connect to my FOG node using FileZilla and typing in the IP address and FTP user/password as listed on the Storage Management section of the FOG Dashboard so I feel like it isn’t a password issue.

So, that tells me that it may be something related to permissions? There’s no firewall rules set on any of these subnets so that shouldn’t be the issue either.

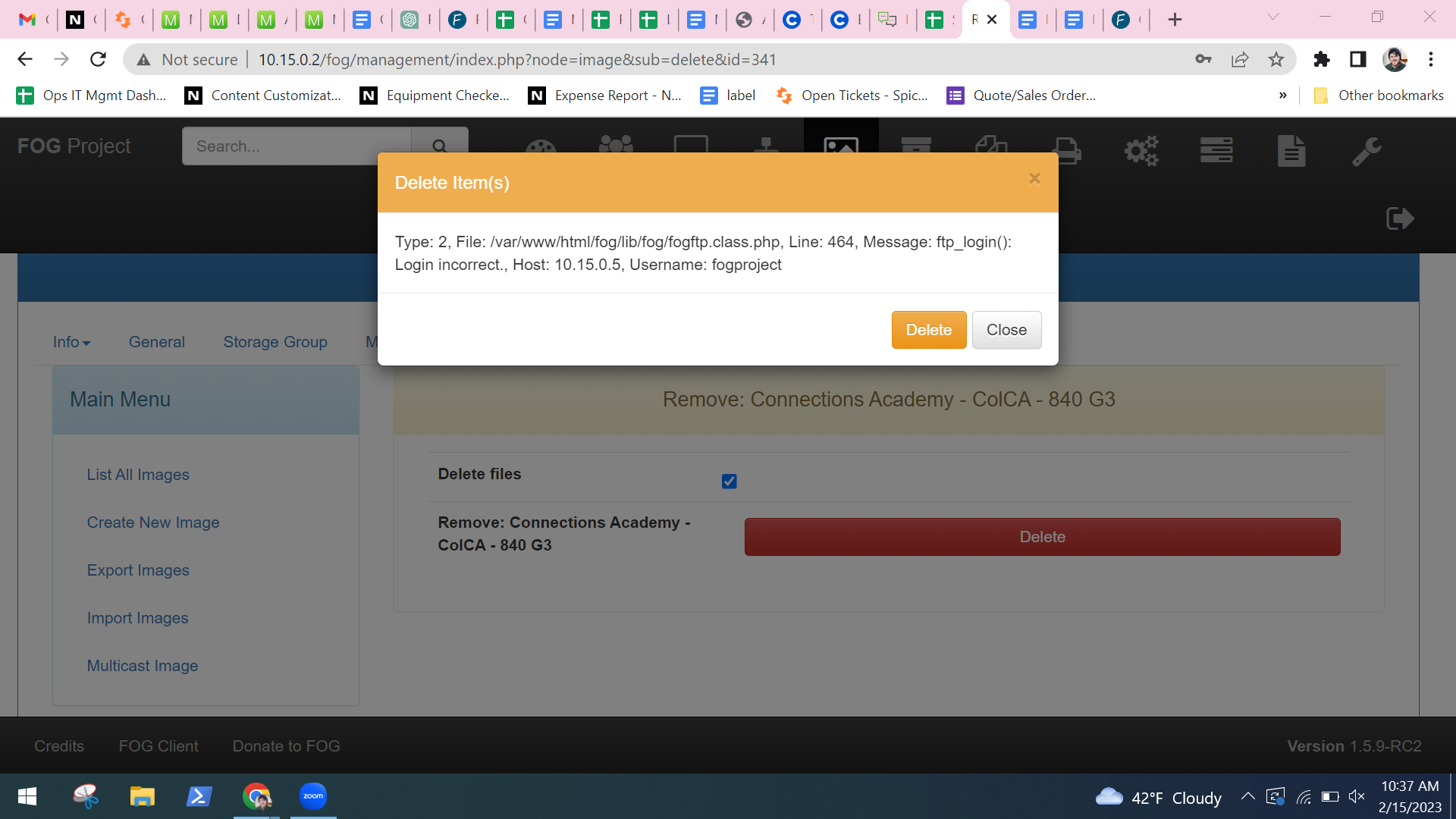

Here’s a screenshot of the error I’m getting:

Any ideas anyone?

Thanks in advance! -

Cannot delete image off of all nodes. Type 2 FTP Error...posted in FOG Problems

Hello all,

OS: Debian

Fog Version: 1.5.9-RC2I am getting the following error when I try to delete an image off of every node using the FOG Dashboard:

Type: 2, File: /var/www/html/fog/lib/fog/fogftp.class.php, Line: 464, Message: ftp_login(): Login incorrect., Host: 10.15.0.5, Username: fogprojectI’m not quite sure which login they’re referring to, because the only login they’re asking for is the one that I use to log into the FOG dashboard and that one is correct. Could they maybe be referring to the SQL database password?

I can capture images and they propagate and deploy across all of the nodes just fine, it’s just when I try to delete them.

Any help would be greatly appreciated, thank you!

-

RE: FOG Node Propagation issues....FTP? Faulty Install?posted in FOG Problems

Hey everyone,

Just wanted to issue an update on this as it’s still marked as “unsolved” and I’ve done a bit of tinkering and learning here.

I ended up just cutting my losses and wiping the external storage node, reinstalling Debian, and cloning the FOG repo again to do a fresh install. In the process of doing this I noticed that on my storage node page on the dashboard, I already had a storage node set under the same IP address of the node that I was adding, but it was just disabled on the FOG dashboard, so it appears that there was an IP conflict that may have been causing some of the issues I was seeing. Maybe not though(?).

At any rate, I removed the old node and replaced it with the new one via the normal installation methods and everything has been going smoothly for the past few days.

Feel free to mark this one as “Solved”

Thanks, -

FOG Node Propagation issues....FTP? Faulty Install?posted in FOG Problems

Hello,

OS: Debian 10

FOG Version: 1.5.9-RC2I installed an additional node to my FOG Cluster today and it doesn’t appear to be propagating images correctly. I currently only have the Master Node, Node 1, and now the (new) Node 2.

Here is a snippet of my log file:

[02-06-23 8:46:44 pm] # VACA Pearson - 840 G3: No need to sync d1.original.fstypes (Node 1) [02-06-23 8:46:44 pm] # VACA Pearson - 840 G3: No need to sync d1.original.swapuuids (Node 1) [02-06-23 8:46:44 pm] # VACA Pearson - 840 G3: No need to sync d1.partitions (Node 1) [02-06-23 8:46:45 pm] # VACA Pearson - 840 G3: No need to sync d1p1.img (Node 1) [02-06-23 8:46:47 pm] # VACA Pearson - 840 G3: No need to sync d1p2.img (Node 1) [02-06-23 8:46:49 pm] # VACA Pearson - 840 G3: No need to sync d1p3.img (Node 1) [02-06-23 8:46:49 pm] * All files synced for this item. [02-06-23 8:46:50 pm] # VACA Pearson - 840 G3: No need to sync d1.fixed_size_partitions (Node 2) [02-06-23 8:46:50 pm] # VACA Pearson - 840 G3: No need to sync d1.mbr (Node 2) [02-06-23 8:46:50 pm] # VACA Pearson - 840 G3: No need to sync d1.minimum.partitions (Node 2) [02-06-23 8:46:50 pm] # VACA Pearson - 840 G3: No need to sync d1.original.fstypes (Node 2) [02-06-23 8:46:50 pm] # VACA Pearson - 840 G3: No need to sync d1.original.swapuuids (Node 2) [02-06-23 8:46:50 pm] # VACA Pearson - 840 G3: No need to sync d1.partitions (Node 2) [02-06-23 8:46:51 pm] # VACA Pearson - 840 G3: No need to sync d1p1.img (Node 2) [02-06-23 8:46:51 pm] # VACA Pearson - 840 G3: File size mismatch - d1p2.img: 11539496891 != 2360515784 [02-06-23 8:46:51 pm] # VACA Pearson - 840 G3: Deleting remote file d1p2.img [02-06-23 8:46:52 pm] # VACA Pearson - 840 G3: No need to sync d1p3.img (Node 2) [02-06-23 8:46:52 pm] | CMD: lftp -e 'set xfer:log 1; set xfer:log-file /opt/fog/log/fogreplicator.VACA Pearson - 840 G3.transfer.Node 2.log;set ftp:list-options -a;set net:max-retries 10;set net:timeout 30; mirror -c --parallel=20 -R --ignore-time -vvv --exclude ".srvprivate" "/images/VACAPearson-840G3" "/images/VACAPearson-840G3"; exit' -u fogproject,[Protected] 10.15.0.4 [02-06-23 8:46:52 pm] | Started sync for Image VACA Pearson - 840 G3 - Resource id #4469 [02-06-23 8:46:52 pm] * Found Image to transfer to 2 nodes [02-06-23 8:46:52 pm] | Image Name: Visit by GES v4.20.81 - 840 G3 [02-06-23 8:46:53 pm] # Visit by GES v4.20.81 - 840 G3: No need to sync d1.fixed_size_partitions (Node 1) [02-06-23 8:46:53 pm] # Visit by GES v4.20.81 - 840 G3: No need to sync d1.grub.mbr (Node 1) [02-06-23 8:46:53 pm] # Visit by GES v4.20.81 - 840 G3: No need to sync d1.mbr (Node 1) [02-06-23 8:46:53 pm] # Visit by GES v4.20.81 - 840 G3: No need to sync d1.minimum.partitions (Node 1) [02-06-23 8:46:53 pm] # Visit by GES v4.20.81 - 840 G3: No need to sync d1.original.fstypes (Node 1) [02-06-23 8:46:53 pm] # Visit by GES v4.20.81 - 840 G3: No need to sync d1.original.swapuuids (Node 1) [02-06-23 8:46:53 pm] # Visit by GES v4.20.81 - 840 G3: No need to sync d1.partitions (Node 1) [02-06-23 8:46:54 pm] # Visit by GES v4.20.81 - 840 G3: No need to sync d1p1.img (Node 1) [02-06-23 8:46:56 pm] # Visit by GES v4.20.81 - 840 G3: No need to sync d1p2.img (Node 1) [02-06-23 8:46:58 pm] # Visit by GES v4.20.81 - 840 G3: No need to sync d1p3.img (Node 1) [02-06-23 8:46:58 pm] * All files synced for this item. [02-06-23 8:46:58 pm] # Visit by GES v4.20.81 - 840 G3: No need to sync d1.fixed_size_partitions (Node 2) [02-06-23 8:46:58 pm] # Visit by GES v4.20.81 - 840 G3: No need to sync d1.grub.mbr (Node 2) [02-06-23 8:46:58 pm] # Visit by GES v4.20.81 - 840 G3: No need to sync d1.mbr (Node 2) [02-06-23 8:46:58 pm] # Visit by GES v4.20.81 - 840 G3: No need to sync d1.minimum.partitions (Node 2) [02-06-23 8:46:58 pm] # Visit by GES v4.20.81 - 840 G3: No need to sync d1.original.fstypes (Node 2) [02-06-23 8:46:59 pm] # Visit by GES v4.20.81 - 840 G3: No need to sync d1.original.swapuuids (Node 2) [02-06-23 8:46:59 pm] # Visit by GES v4.20.81 - 840 G3: No need to sync d1.partitions (Node 2) [02-06-23 8:46:59 pm] # Visit by GES v4.20.81 - 840 G3: No need to sync d1p1.img (Node 2) [02-06-23 8:46:59 pm] # Visit by GES v4.20.81 - 840 G3: No need to sync d1p2.img (Node 2) [02-06-23 8:47:00 pm] # Visit by GES v4.20.81 - 840 G3: No need to sync d1p3.img (Node 2) [02-06-23 8:47:00 pm] * All files synced for this item. [02-06-23 8:47:00 pm] * Found Image to transfer to 2 nodes [02-06-23 8:47:00 pm] | Image Name: Visit GES Check-In v5.1.82 - 840 G3 [02-06-23 8:47:00 pm] # Visit GES Check-In v5.1.82 - 840 G3: No need to sync d1.fixed_size_partitions (Node 1) [02-06-23 8:47:00 pm] # Visit GES Check-In v5.1.82 - 840 G3: No need to sync d1.grub.mbr (Node 1) [02-06-23 8:47:00 pm] # Visit GES Check-In v5.1.82 - 840 G3: No need to sync d1.mbr (Node 1) [02-06-23 8:47:00 pm] # Visit GES Check-In v5.1.82 - 840 G3: No need to sync d1.minimum.partitions (Node 1) [02-06-23 8:47:00 pm] # Visit GES Check-In v5.1.82 - 840 G3: No need to sync d1.original.fstypes (Node 1) [02-06-23 8:47:00 pm] # Visit GES Check-In v5.1.82 - 840 G3: No need to sync d1.original.swapuuids (Node 1) [02-06-23 8:47:01 pm] # Visit GES Check-In v5.1.82 - 840 G3: No need to sync d1.partitions (Node 1) [02-06-23 8:47:01 pm] # Visit GES Check-In v5.1.82 - 840 G3: No need to sync d1p1.img (Node 1) [02-06-23 8:47:04 pm] # Visit GES Check-In v5.1.82 - 840 G3: No need to sync d1p2.img (Node 1) [02-06-23 8:47:06 pm] # Visit GES Check-In v5.1.82 - 840 G3: No need to sync d1p3.img (Node 1) [02-06-23 8:47:06 pm] * All files synced for this item. [02-06-23 8:47:06 pm] # Visit GES Check-In v5.1.82 - 840 G3: No need to sync d1.fixed_size_partitions (Node 2) [02-06-23 8:47:06 pm] # Visit GES Check-In v5.1.82 - 840 G3: No need to sync d1.grub.mbr (Node 2) [02-06-23 8:47:06 pm] # Visit GES Check-In v5.1.82 - 840 G3: No need to sync d1.mbr (Node 2) [02-06-23 8:47:06 pm] # Visit GES Check-In v5.1.82 - 840 G3: No need to sync d1.minimum.partitions (Node 2) [02-06-23 8:47:06 pm] # Visit GES Check-In v5.1.82 - 840 G3: No need to sync d1.original.fstypes (Node 2) [02-06-23 8:47:06 pm] # Visit GES Check-In v5.1.82 - 840 G3: No need to sync d1.original.swapuuids (Node 2) [02-06-23 8:47:06 pm] # Visit GES Check-In v5.1.82 - 840 G3: No need to sync d1.partitions (Node 2) [02-06-23 8:47:06 pm] # Visit GES Check-In v5.1.82 - 840 G3: No need to sync d1p1.img (Node 2) [02-06-23 8:47:07 pm] # Visit GES Check-In v5.1.82 - 840 G3: No need to sync d1p2.img (Node 2) [02-06-23 8:47:07 pm] # Visit GES Check-In v5.1.82 - 840 G3: No need to sync d1p3.img (Node 2) [02-06-23 8:47:07 pm] * All files synced for this item. [02-06-23 8:47:07 pm] * Found Image to transfer to 2 nodes [02-06-23 8:47:07 pm] | Image Name: Widex USA - 840 G3 [02-06-23 8:47:09 pm] # Widex USA - 840 G3: No need to sync d1.fixed_size_partitions (Node 1) [02-06-23 8:47:09 pm] # Widex USA - 840 G3: No need to sync d1.mbr (Node 1) [02-06-23 8:47:09 pm] # Widex USA - 840 G3: No need to sync d1.minimum.partitions (Node 1) [02-06-23 8:47:09 pm] # Widex USA - 840 G3: No need to sync d1.original.fstypes (Node 1) [02-06-23 8:47:09 pm] # Widex USA - 840 G3: No need to sync d1.original.swapuuids (Node 1) [02-06-23 8:47:09 pm] # Widex USA - 840 G3: No need to sync d1.partitions (Node 1) [02-06-23 8:47:11 pm] # Widex USA - 840 G3: No need to sync d1p1.img (Node 1) [02-06-23 8:47:21 pm] # Widex USA - 840 G3: No need to sync d1p2.img (Node 1) [02-06-23 8:47:33 pm] # Widex USA - 840 G3: No need to sync d1p3.img (Node 1) [02-06-23 8:47:33 pm] * All files synced for this item. [02-06-23 8:47:33 pm] # Widex USA - 840 G3: No need to sync d1.fixed_size_partitions (Node 2) [02-06-23 8:47:33 pm] # Widex USA - 840 G3: No need to sync d1.mbr (Node 2) [02-06-23 8:47:33 pm] # Widex USA - 840 G3: No need to sync d1.minimum.partitions (Node 2) [02-06-23 8:47:33 pm] # Widex USA - 840 G3: No need to sync d1.original.fstypes (Node 2) [02-06-23 8:47:33 pm] # Widex USA - 840 G3: No need to sync d1.original.swapuuids (Node 2) [02-06-23 8:47:33 pm] # Widex USA - 840 G3: No need to sync d1.partitions (Node 2) [02-06-23 8:47:34 pm] # Widex USA - 840 G3: No need to sync d1p1.img (Node 2) [02-06-23 8:47:34 pm] # Widex USA - 840 G3: File size mismatch - d1p2.img: 22242172849 != 5294692168 [02-06-23 8:47:34 pm] # Widex USA - 840 G3: Deleting remote file d1p2.img [02-06-23 8:47:36 pm] # Widex USA - 840 G3: No need to sync d1p3.img (Node 2) [02-06-23 8:47:36 pm] | CMD: lftp -e 'set xfer:log 1; set xfer:log-file /opt/fog/log/fogreplicator.Widex USA - 840 G3.transfer.Node 2.log;set ftp:list-options -a;set net:max-retries 10;set net:timeout 30; mirror -c --parallel=20 -R --ignore-time -vvv --exclude ".srvprivate" "/images/WidexUSA-840G3" "/images/WidexUSA-840G3"; exit' -u fogproject,[Protected] 10.15.0.4Here it appears to be syncing the images between the two nodes, but it has the

CMD: lftp -e 'set xfer:log 1; set xfer:log-file /opt/fog/log/fogreplicator.<image name>.transfer.Node 2.log;set ftp:list-options -a;set net:max-retries 10;set net:timeout 30; mirror -c --parallel=20 -R --ignore-time -vvv --exclude ".srvprivate" "/images/<image name>" "/images/<image name>"; exit' -u fogproject,[Protected] 10.15.0.4text after the last line it tries to sync on Node 2.Here is another snippet of the same log file later in the log (check the timestamp):

[02-06-23 9:33:30 pm] | Image Name: ! Dell 7480 [02-06-23 9:33:30 pm] # ! Dell 7480: No need to sync d1.fixed_size_partitions (Node 1) [02-06-23 9:33:30 pm] # ! Dell 7480: No need to sync d1.mbr (Node 1) [02-06-23 9:33:30 pm] # ! Dell 7480: No need to sync d1.minimum.partitions (Node 1) [02-06-23 9:33:30 pm] # ! Dell 7480: No need to sync d1.original.fstypes (Node 1) [02-06-23 9:33:30 pm] # ! Dell 7480: No need to sync d1.original.swapuuids (Node 1) [02-06-23 9:33:30 pm] # ! Dell 7480: No need to sync d1.partitions (Node 1) [02-06-23 9:33:31 pm] # ! Dell 7480: No need to sync d1p1.img (Node 1) [02-06-23 9:33:44 pm] # ! Dell 7480: No need to sync d1p2.img (Node 1) [02-06-23 9:33:44 pm] * All files synced for this item. [02-06-23 9:33:44 pm] | Replication already running with PID: 2531 [02-06-23 9:33:44 pm] * Found Image to transfer to 2 nodes [02-06-23 9:33:44 pm] | Image Name: ! Dell E5450 [02-06-23 9:33:44 pm] # ! Dell E5450: No need to sync d1.fixed_size_partitions (Node 1) [02-06-23 9:33:44 pm] # ! Dell E5450: No need to sync d1.mbr (Node 1) [02-06-23 9:33:44 pm] # ! Dell E5450: No need to sync d1.minimum.partitions (Node 1) [02-06-23 9:33:44 pm] # ! Dell E5450: No need to sync d1.original.fstypes (Node 1) [02-06-23 9:33:44 pm] # ! Dell E5450: No need to sync d1.original.swapuuids (Node 1) [02-06-23 9:33:44 pm] # ! Dell E5450: No need to sync d1.partitions (Node 1) [02-06-23 9:33:44 pm] # ! Dell E5450: No need to sync d1p1.img (Node 1) [02-06-23 9:33:55 pm] # ! Dell E5450: No need to sync d1p2.img (Node 1) [02-06-23 9:33:55 pm] * All files synced for this item. [02-06-23 9:33:55 pm] | Replication already running with PID: 2548 [02-06-23 9:33:55 pm] * Found Image to transfer to 2 nodes [02-06-23 9:33:55 pm] | Image Name: ! Dell E5480 [02-06-23 9:33:56 pm] # ! Dell E5480: No need to sync d1.fixed_size_partitions (Node 1) [02-06-23 9:33:56 pm] # ! Dell E5480: No need to sync d1.mbr (Node 1) [02-06-23 9:33:56 pm] # ! Dell E5480: No need to sync d1.minimum.partitions (Node 1) [02-06-23 9:33:56 pm] # ! Dell E5480: No need to sync d1.original.fstypes (Node 1) [02-06-23 9:33:56 pm] # ! Dell E5480: No need to sync d1.original.swapuuids (Node 1) [02-06-23 9:33:56 pm] # ! Dell E5480: No need to sync d1.partitions (Node 1) [02-06-23 9:33:56 pm] # ! Dell E5480: No need to sync d1p1.img (Node 1) [02-06-23 9:34:16 pm] # ! Dell E5480: No need to sync d1p2.img (Node 1) [02-06-23 9:34:16 pm] * All files synced for this item. [02-06-23 9:34:16 pm] | Replication already running with PID: 2585 [02-06-23 9:34:16 pm] * Found Image to transfer to 2 nodes [02-06-23 9:34:16 pm] | Image Name: ! Dell E7470 [02-06-23 9:34:17 pm] # ! Dell E7470: No need to sync d1.fixed_size_partitions (Node 1) [02-06-23 9:34:17 pm] # ! Dell E7470: No need to sync d1.mbr (Node 1) [02-06-23 9:34:17 pm] # ! Dell E7470: No need to sync d1.minimum.partitions (Node 1) [02-06-23 9:34:17 pm] # ! Dell E7470: No need to sync d1.original.fstypes (Node 1) [02-06-23 9:34:17 pm] # ! Dell E7470: No need to sync d1.original.swapuuids (Node 1) [02-06-23 9:34:17 pm] # ! Dell E7470: No need to sync d1.partitions (Node 1) [02-06-23 9:34:17 pm] # ! Dell E7470: No need to sync d1p1.img (Node 1) [02-06-23 9:34:32 pm] # ! Dell E7470: No need to sync d1p2.img (Node 1) [02-06-23 9:34:32 pm] * All files synced for this item. [02-06-23 9:34:32 pm] | Replication already running with PID: 2603 [02-06-23 9:34:32 pm] * Found Image to transfer to 2 nodes [02-06-23 9:34:32 pm] | Image Name: ! HP Elitebook 840 G3 [02-06-23 9:34:33 pm] # ! HP Elitebook 840 G3: No need to sync d1.fixed_size_partitions (Node 1) [02-06-23 9:34:33 pm] # ! HP Elitebook 840 G3: No need to sync d1.mbr (Node 1)Here it’s only syncing to Node 1. It just stops syncing to Node 2. Weird, right?

I did see on a previous log there was a

Type: 1024, File: /var/www/fog/lib/fog/fogftp.class.php, Line: 219, Message: FTP connection failed, which has not popped up again…but that doesn’t sound good!I will say that I had issues during the installation of this node if you reference this post but I just reinstalled and it worked and didn’t think anything of it.

Also, the FOG dashboard is running REALLY slow when I try to load it. If I disable the new node that I just installed it seems to run quicker. Does that make sense to anybody? Does it need to have a solid FTP connection or be able to smoothly communicate to all nodes in order to load the FOG Dashboard? I’ve seen that before where the FOG dash is slow and just shutting off one of the problematic nodes seems to make it run a lot faster. Just curious.

Any help or insight would be supremely appreciated. I feel like I may just need to cut my losses and just try to wipe the machine and reinstall it as a new node again, but i just wanted to run it by some of the folks here to see if there are any ideas…

Thanks in advance!

-

RE: Fog Storage Node Installation failed: cp cannot stat '/tftpboot/*' : No such file or directoryposted in FOG Problems

Update:

Trying the installation again worked.

¯_(ツ)_/¯

-

Fog Storage Node Installation failed: cp cannot stat '/tftpboot/*' : No such file or directoryposted in FOG Problems

Hello,

OS: Debian 10

FOG Master Version: 1.5.9-RC2I am attempting to add a storage node to my existing FOG network and after following all the prompts, during the installation I was hit with this error:

It seems to be failing on the step

*Compiling iPXE binaries trusting your SSL certificate......Failed!*And then I get a notification that something caused the FOG installation to fail and I get the following error:

cp: cannot stat '/tftpboot/*': No such file or directoryAny ideas anyone? Should I just try a fresh installation again?

-

RE: Images suddently not replicating to storage nodes from Master Nodeposted in FOG Problems

@sebastian-roth Thank you very much for the response. Sorry for the delay, I was on a time crunch for delivering this image and I did not have enough time to continue troubleshooting. I ended up just deleting the image and recapturing and it replicated afterwards. Hopefully it was just a fluke. But i know how to check the replication log file now!

Thanks again for taking the time to respond.

-

RE: Images suddently not replicating to storage nodes from Master Nodeposted in FOG Problems

The image is Q1 2021 - Connect Academy TX HP and Q1 2021 Connect Academy TX - Len

[03-15-21 1:10:18 pm] * All files synced for this item. [03-15-21 1:10:19 pm] * Found Image to transfer to 12 nodes [03-15-21 1:10:19 pm] | Image Name: Q1 2021 - Connect Academy TX - HP [03-15-21 1:10:19 pm] * Not syncing Image between nodes [03-15-21 1:10:19 pm] | Image Name: Q1 2021 - Connect Academy TX - HP [03-15-21 1:10:19 pm] | File or path cannot be reached. [03-15-21 1:10:19 pm] * Not syncing Image between nodes [03-15-21 1:10:19 pm] | Image Name: Q1 2021 - Connect Academy TX - HP [03-15-21 1:10:19 pm] | File or path cannot be reached. [03-15-21 1:10:19 pm] * Not syncing Image between nodes [03-15-21 1:10:19 pm] | Image Name: Q1 2021 - Connect Academy TX - HP [03-15-21 1:10:19 pm] | File or path cannot be reached. [03-15-21 1:10:19 pm] | Replication already running with PID: 22051 [03-15-21 1:10:19 pm] * Not syncing Image between nodes [03-15-21 1:10:19 pm] | Image Name: Q1 2021 - Connect Academy TX - HP [03-15-21 1:10:19 pm] | File or path cannot be reached. [03-15-21 1:10:19 pm] * Not syncing Image between nodes [03-15-21 1:10:19 pm] | Image Name: Q1 2021 - Connect Academy TX - HP [03-15-21 1:10:19 pm] | File or path cannot be reached. [03-15-21 1:10:19 pm] * Not syncing Image between nodes [03-15-21 1:10:19 pm] | Image Name: Q1 2021 - Connect Academy TX - HP [03-15-21 1:10:19 pm] | File or path cannot be reached. [03-15-21 1:10:19 pm] * Not syncing Image between nodes [03-15-21 1:10:19 pm] | Image Name: Q1 2021 - Connect Academy TX - HP [03-15-21 1:10:19 pm] | File or path cannot be reached. [03-15-21 1:10:19 pm] * Not syncing Image between nodes [03-15-21 1:10:19 pm] | Image Name: Q1 2021 - Connect Academy TX - HP [03-15-21 1:10:19 pm] | File or path cannot be reached. [03-15-21 1:10:19 pm] * Not syncing Image between nodes [03-15-21 1:10:19 pm] | Image Name: Q1 2021 - Connect Academy TX - HP [03-15-21 1:10:19 pm] | File or path cannot be reached. [03-15-21 1:10:19 pm] * Not syncing Image between nodes [03-15-21 1:10:19 pm] | Image Name: Q1 2021 - Connect Academy TX - HP [03-15-21 1:10:19 pm] | File or path cannot be reached. [03-15-21 1:10:19 pm] * Not syncing Image between nodes [03-15-21 1:10:19 pm] | Image Name: Q1 2021 - Connect Academy TX - HP [03-15-21 1:10:19 pm] | File or path cannot be reached. [03-15-21 1:10:19 pm] * Found Image to transfer to 12 nodes [03-15-21 1:10:19 pm] | Image Name: Q1 2021 - Connect Academy TX - Len [03-15-21 1:10:19 pm] * Not syncing Image between nodes [03-15-21 1:10:19 pm] | Image Name: Q1 2021 - Connect Academy TX - Len [03-15-21 1:10:19 pm] | File or path cannot be reached. [03-15-21 1:10:19 pm] * Not syncing Image between nodes [03-15-21 1:10:19 pm] | Image Name: Q1 2021 - Connect Academy TX - Len [03-15-21 1:10:19 pm] | File or path cannot be reached. [03-15-21 1:10:19 pm] | Replication already running with PID: 28711 [03-15-21 1:10:19 pm] | Replication already running with PID: 22078 [03-15-21 1:10:19 pm] | Replication already running with PID: 28714 [03-15-21 1:10:19 pm] | Replication already running with PID: 28718 [03-15-21 1:10:19 pm] * Not syncing Image between nodes [03-15-21 1:10:19 pm] | Image Name: Q1 2021 - Connect Academy TX - Len [03-15-21 1:10:19 pm] | File or path cannot be reached. [03-15-21 1:10:19 pm] | Replication already running with PID: 28748 [03-15-21 1:10:19 pm] * Not syncing Image between nodes [03-15-21 1:10:19 pm] | Image Name: Q1 2021 - Connect Academy TX - Len [03-15-21 1:10:19 pm] | File or path cannot be reached. [03-15-21 1:10:19 pm] * Not syncing Image between nodes [03-15-21 1:10:19 pm] | Image Name: Q1 2021 - Connect Academy TX - Len [03-15-21 1:10:19 pm] | File or path cannot be reached. [03-15-21 1:10:19 pm] | Replication already running with PID: 28758 [03-15-21 1:10:19 pm] | Replication already running with PID: 28769 [03-15-21 1:10:19 pm] * Found Image to transfer to 12 nodesIt looks like it seems to be saying the file or path cannot be reached. I can ping the nodes and the Master. I don’t undersatand what could possibly be going on. It was just with these images and it just happened this morning for the first time.

-

RE: Images suddently not replicating to storage nodes from Master Nodeposted in FOG Problems

@sebastian-roth Thank you for your reply!

Please excuse my ignorance on this one. Would it be

tail -f opt/fog/log/fogreplicator.logto get the full log? It seems like I can only get the head or the tail. Is there a command that would help me do this?and yes, each node has about 70%-80% free space!

-

Images suddently not replicating to storage nodes from Master Nodeposted in FOG Problems

FOS: 1.5.8

OS: Debian 10I captured three new images on Friday evening and none of them replicated to any of my 10 storage nodes. I have had issues in the past where I’ve had to compare checksums and delete the

d1p1.imgfiles to re-propogate them, but in this case, the entire image directory did not transfer to any of the nodesThe images were all set to “replicate” and there is only one storage group. I have rebooted all the machines and Master can ping all of the nodes and vice versa.

Is there any other info I can post to this topic to help everyone understand this problem?

Has anyone run into this issue before?Thanks very much, y’all are great!

-

RE: Automate Snapins to run after deployment?posted in General

@george1421 said in Automate Snapins to run after deployment?:

@danieln FOG has a plug-in module called persistent groups. This plugin allows you to create one or more template hosts. You can assign setting, snapins, printers, etc to this template host. Then when you assign a target computer to the template group, the settings assigned to the template host are copied to the target compute.

That sounds like it’s exactly what I’m looking for. are these persistent groups something you’d configure in the Groups tab on the FOG dashboard? If so, I can’t seem to find it.

Right now there is a bug with the persistent group where it makes the connection to the snapin and printer, but the tasks are not created to actually deploy the snapin and printer. I have a workaround for that until we can find the timing issue in the code.

I’d be curious what the workaround is, if you don’t mind sharing. This feature is not something of extreme importance, but it is something that would may my workflow much, much easier.

-

Automate Snapins to run after deployment?posted in General

If I have a group of hosts that I want to always deploy a specific set of drivers and software regardless of what it is imaged with, is there a way to have those hosts always start a Snapin pack after they’re imaged or do I have task them every time?

-

RE: Clients imaging despite recieving "Read ERROR: No such file or directory" and "ata1.00: failed command" errorsposted in FOG Problems

@george1421 said

FOG (FOS) kernels can be downloaded from here https://fogproject.org/kernels/ download both the x64 and x32 bit kernels. Save the x64 as

bzImageand the x32 adbzImage32(case is important). Then you can just move the files to/var/www/html/fog/service/ipxedirectory on the FOG server. It probably wouldn’t hurt to rename the existing ones before you move the new kernels in. You can confirm the version of the bzImage files withfile /var/www/html/fog/service/ipxe/bzImageIt should print out the version of the kernel.Thank you for this info! I downloaded those files, renamed them, and moved them to

/var/www/html/fog/service/ipxe. I ended up keeping the old files and renaming thembzImageOLDandbzImage32OLDrespectively. The new output offile /var/www/html/fog/service/ipxe/bzImageis this:/var/www/html/fog/service/ipxe/bzImage: Linux kernel x86 boox executable bzImage, version 4.19.123 (jenkins-agent@Tollana) #1 SMP Sun May 17 01:04:09 CDT 2020, R0-rootFS, swap_dev 0x8, Normal VGA /var/www/html/fog/service/ipxe/bzImage32: Linux kernel x86 boox executable bzImage, version 4.19.123 (jenkins-agent@Tollana) #1 SMP Sat May 16 23:59:01 CDT 2020, R0-rootFS, swap_dev 0x7, Normal VGA /var/www/html/fog/service/ipxe/bxImage32OLD: Linux kernel x86 boot executable bzImage, version 4.19.145 (sebastian@Tollana) #1 SMP Sun Sep 13 05:43:10 CDT 2020, R0-rootFS, swap_dev 0x7, Normal VGA /var/www/html/fog/service/ipxe/bxImageOLD: Linux kernel x86 boot executable bzImage, version 4.19.145 (sebastian@Tollana) #1 SMP Sun Sep 13 05:35:01 CDT 2020, R0-rootFS, swap_dev 0x8, Normal VGAVersion 4.19.123 is what is on the master as well as all the other nodes. I trust that it will look at these since they’re named properly even though the other files are in the same directory but they’re renamed. I will run a recapture/deploy test and report back with findings.

Thank you both again!

-

RE: Clients imaging despite recieving "Read ERROR: No such file or directory" and "ata1.00: failed command" errorsposted in FOG Problems

@sebastian-roth said

Sure, taking a quick look doesn’t hurt. The 1.5.9-RC2 was a release candidate not long before 1.5.9 was released. Possibly that uses a different kernel - depends on when it was installed.

You should be able to get the kernel version by running the following command on all your nodes:

file /var/www/html/fog/service/ipxe/bzImage*(the*will include the 64 bit and 32 bit kernel - the output should show kernel version 4.19.x or possibly 5.6.x.)Well I’ll be damned. The kernel on the master and all of the working nodes is version 4.19.123 and the problematic node’s kernel is version 4.19.145. You think that may be what’s causing the issue? But why would some of the other images be working fine then?

At any rate, is there an easy way to update this kernel without having to do a full reinstall?

-

RE: Clients imaging despite recieving "Read ERROR: No such file or directory" and "ata1.00: failed command" errorsposted in FOG Problems

@sebastian-roth said

you’re thinking its more along the lines of hardware issues with the laptop and not with FOS or the Node itself? I feel like it only throws those ATA errors when connecting to that one node, but I could be wrong. Maybe that’s the next thing i’ll test.

Yes I would say it’s very unlikely to be caused by FOS or the node unless you have different FOG/kernel versions installed.

I’d like to circle back around to this to get some more clarifcation. My master node has version 1.5.9-RC2 and this particular node (as well as all the other nodes) have version 1.5.9. I’m unsure as to what the differences between RC2 and the 1.5.9 version are, but do you think this is something worth investigating?