@Sebastian-Roth Thanks for the update! Again, this feature for us is more of a “nice to have” than an essential, so no immediate rush. I know all of y’all are busy!

Best posts made by danieln

-

RE: [FOG 1.5.10] - Log Viewer displaying blank drop down menuposted in Bug Reports

-

RE: Assigning Snapins to Hosts via FOG API – Proper JSON Structure and Method?posted in General Problems

I saw this a while ago! Pretty impressive. Right now, we’re working to integrate the FOG API into Oracle NetSuite, but I do use Powershell quite a lot as well and could definitely see a benefit here. I will check it out.

Thanks,

-

RE: Images suddently not replicating to storage nodes from Master Nodeposted in FOG Problems

@sebastian-roth Thank you very much for the response. Sorry for the delay, I was on a time crunch for delivering this image and I did not have enough time to continue troubleshooting. I ended up just deleting the image and recapturing and it replicated afterwards. Hopefully it was just a fluke. But i know how to check the replication log file now!

Thanks again for taking the time to respond.

Latest posts made by danieln

-

RE: "Windows Other" vs "Windows 10" when creating new Windows 11 images?posted in General

@george1421 Great, thanks for the info!

-

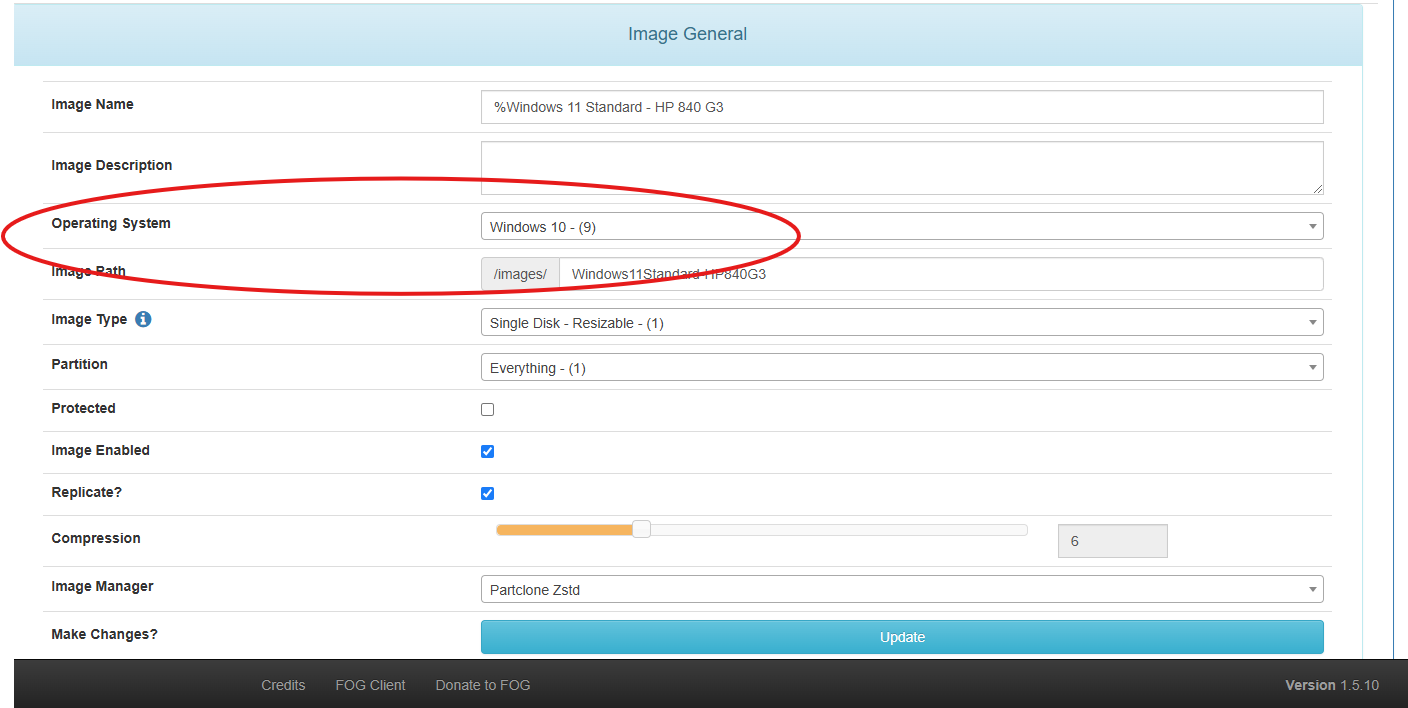

"Windows Other" vs "Windows 10" when creating new Windows 11 images?posted in General

Server OS: Debian 10 Buster

FOG OS: v1.5.10Hey all,

I have started to successfully capture and deploy Windows 11 images to and from my FOG server and I noticed that when you create a new image, “Windows 10 - (9)” exists as the default for the Operating System selector:

Would there be any utility in changing this to “Windows Other - (4)”? It appears to be working without any issues, so I’m not going to second guess it too much, I was just curious.

Thanks in advance!

-

RE: SNAP INS. Lets talk about them...posted in FOG Problems

Yeah, this was my suspicion. I see both sides of it too. Sounds like I’ll have to either super simplify some of my install scripts and make some sacrifices or figure out another solution.

I appriciate the response and confirmation!

-

RE: SNAP INS. Lets talk about them...posted in FOG Problems

Hey Tom,

You’re right, this is usually a manual task. I use a Windows freeware “pin to taskbar” utility that I have built into my install office script and when it’s run with an elevated command prompt it automatically pins Word, Excel, and PowerPoint to the taskbar. It’s also Office 2019 SPLA and not 365 (although we’re likely switching to that in the future)

As for the Snap Ins themselves, I could be wrong, but it really seems like it just runs the Snap In as the SYSTEM user which is great because that should theoretically have highest privileges, but it runs it completely in the background.

I have however been able to have a test Snap In batch script that write a test text file to the Users desktop upon execution, but I have to include

set indicator_path=C:\Users\Admin\Desktop\and specify where I’d like the file written to. Just using%USERPROFILE%\Desktop\doesn’t work.I can’t imagine anyone on this forum hasn’t used Snap Ins to execute PowerShell or batch scripts and I just wanted to make sure i’m not missing anything.

To be fair though ,the Office install script that we use is a little more complex than just a simple msi and we use their Office Deployment Tool to run an additional XML file off of a network share drive that has our product key baked into it. There is just something about the way that snap ins run that is just different from just running it as an Administrator that I haven’t figured out yet.

-

SNAP INS. Lets talk about them...posted in FOG Problems

OS: Debian 10 Buster

FOG OS: 1.5.10Hi All,

We are trying to integrate Snap Ins into our workflow for running scripts and installing applications to make things more streamlined and easier for deployment and I’m really struggling to get any Snapins to work properly and I’m at a loss for what else to try. I’m hoping someone can point out if I’m missing anything, or if Snapins just aren’t reliable in general.

Through this process, I have also learned that Snapins run as the SYSTEM user, which makes it difficult to create scripts that require user interaction. I’ve been trying to build a script that runs a quick QC Diagnostic test and allows the person imaging the computer to interact with it or at least see the test results it in a PowerShell window, but no luck so far. And it sounds like the SYSTEM user does not allow for any sort of interactivity and completely runs in the background. Not the end of the world, I suppose. I may be able to figure out some sort of workaround on that front (i.e. a snapin task that manually schedules a ps1 file using the Task Scheduler on the computer image or something).

However, more recently, I’ve tried using snap ins to run a simple batch script that installs Office 2019 and pins it to the taskbar. The script downloads to the

C:\Program Files (x86)\FOG\tmpdirectory but just sits there, and the task in FOG Dashboard don’t clear it. This script has always been run manually by the person doing the deployment off of a share drive but we’re trying to automate this. I can even run the script as an Admin fromC:\Program Files (x86)\FOG\tmpand it works. But from what I understand, it’s supposed to download from the FOG Server, run the script, and then delete it. Right?Here’s what I’ve tried so far:

- Reinstalled FOG from scratch, both on the Master Server and on storage nodes.

- Increased memory limits (in www.conf file to 2048M), which helped fix some loading issues but didn’t solve Snapin problems.

- Made sure Snapins were correctly defined and verified paths, passwords, and permissions.

- Snapins do get tasked, but they just don’t seem to execute on the target machines.

- Used FOG API to automate tasks post-imaging, but Snapin execution still fails.

We’re using only Windows clients, with PowerShell scripts that ideally run from a network share. Even though Snapins get queued, they never seem to actually run. Just trying to check my own knowledge here.

I have also reviewed the log files that FOG creates and it usually just either hangs at “Starting snap in” or it says it’s done and nothing happens on the computer (this is rare though)

Has anyone else experienced similar issues with Snapins? Am I missing something really obvious, or are Snapins known to be unreliable in certain setups? Any insights would be incredibly appreciated.

Thanks in advance for your help!

-

RE: Assigning Snapins to Hosts via FOG API – Proper JSON Structure and Method?posted in General Problems

I saw this a while ago! Pretty impressive. Right now, we’re working to integrate the FOG API into Oracle NetSuite, but I do use Powershell quite a lot as well and could definitely see a benefit here. I will check it out.

Thanks,

-

RE: Assigning Snapins to Hosts via FOG API – Proper JSON Structure and Method?posted in General Problems

@danieln Figured it out. Posting the answer here for posterity. This is the request that worked:

Request:

PUT http://10.15.0.2/fog/host/<FOG host ID>JSON body:

{ "snapins": 1 }it was

"snapins"that I had to pass into the JSON body. I found this by just running a general GET request for snapinsGET http://10.15.0.2/fog/snapin(another total guess that ended up being correct) and in the JSON body response it returned"snapins"with an array of objects, so I just passed that into the PUT request body and it worked. The Snapins are literally numbered in the order in which you create them and you can reference them here to assign them.Hope this is helpful to any future person who tries to do this!

-

Assigning Snapins to Hosts via FOG API – Proper JSON Structure and Method?posted in General Problems

Server OS: Debian 10 Buster

FOG OS: 1.5.10Hey all,

I’ve been integrating the FOG API in with our existing mangement software and I have been successful with using the API to assign Images to hosts and then queue up deployment tasks, but I’m currently hitting a snag trying to assign a snapin to a host using the FOG API. I haven’t able to determine the right JSON structure and request type for this action and it doesn’t appear to be on the API Documentation so I’m really just kinda guessing at this point. I will describe below what I have tried so far:

Request:

PUT http://10.15.0.2/fog/host/7700/JSON Body:

{ "taskTypeID": 11, "snapinID": 1 }Response:

{ "id": "7700", "name": "f43909d8047b", "description": "Created by FOG Reg on May 26, 2022, 12:02 pm", "ip": "", "imageID": "529", "building": "0", "createdTime": "2022-05-26 12:02:49", "deployed": "2024-11-19 09:25:39", "createdBy": "fog", "useAD": "", "ADDomain": "", "ADOU": "", "ADUser": "", "ADPass": "", "ADPassLegacy": "", "productKey": "", "printerLevel": "", "kernelArgs": "", "kernel": "", "kernelDevice": "", "init": "", "pending": "", "pub_key": "", "sec_tok": "", "sec_time": "2024-09-26 11:11:04", "pingstatus": "<i class=\"icon-ping-down fa fa-exclamation-circle red\" data-toggle=\"tooltip\" data-placement=\"right\" title=\"Unknown\"></i>", "biosexit": "", "efiexit": "", "enforce": "", "primac": "f4:39:09:d8:04:7b", "imagename": "! HP Elitebook 840 G3", "hostscreen": { "id": null, "hostID": null, "width": null, "height": null, "refresh": null, "orientation": null, "other1": null, "other2": null }, "hostalo": { "id": null, "hostID": null, "time": null }, "inventory": { "id": "7700", "hostID": "7700", "primaryUser": "", "other1": "", "other2": "", "createdTime": "2022-05-26 12:03:01", "deleteDate": "0000-00-00 00:00:00", "sysman": "HP", "sysproduct": "HP EliteBook 840 G3", "sysversion": "", "sysserial": "5CG83651CN", "sysuuid": "caf1b9df-b277-11e8-97a3-6c1072018059", "systype": "Type: Notebook", "biosversion": "N75 Ver. 01.57", "biosvendor": "HP", "biosdate": "07/28/2022", "mbman": "HP", "mbproductname": "8079", "mbversion": "KBC Version 85.79", "mbserial": "PFKZU00WBBA9CO", "mbasset": "", "cpuman": "Intel(R) Corporation", "cpuversion": "Intel(R) Core(TM) i5-6300U CPU @ 2.40GHz", "cpucurrent": "Current Speed: 2900 MHz", "cpumax": "Max Speed: 8300 MHz", "mem": "MemTotal: 7924528 kB", "hdmodel": "INTEL SSDSCKKF256G8H", "hdserial": "BTLA820625EQ256J", "hdfirmware": "LHFH03N", "caseman": "HP", "casever": "", "caseserial": "5CG83651CN", "caseasset": "", "memory": "7.56 GiB" }, "image": { "imageTypeID": "1", "imagePartitionTypeID": "1", "id": "529", "name": "! HP Elitebook 840 G3", "description": "", "path": "HPElitebook840G3", "createdTime": "2024-08-16 14:34:05", "createdBy": "fog", "building": "0", "size": "607121408.000000:12935051.000000:52981968896.000000:10500096.000000:5376049152.000000:176639.000000:723513344.000000:", "osID": "9", "deployed": "2024-11-18 16:16:26", "format": "5", "magnet": "", "protected": "0", "compress": "6", "isEnabled": "1", "toReplicate": "1", "srvsize": "27312695983", "os": {}, "imagepartitiontype": {}, "imagetype": {} }, "pingstatuscode": 6, "pingstatustext": "No such device or address", "macs": [ "f4:39:09:d8:04:7b", "38:ba:f8:c7:32:a1", "38:ba:f8:c7:32:a2", "3a:ba:f8:c7:32:a1", "38:ba:f8:c7:32:a5" ] }It’s returning all the info about this host, including the Image ID, which I’ve been able to dynamically change with the API, but there doesn’t appear to be any apparent field in the response that indicates a snapin association. It seems like my current JSON body might be incorrect, or perhaps I’m using the wrong request type or endpoint.

So, my questions to the developers are:

-

What is the correct JSON body to use in order to assign specific snapins to a host before deploying?

-

Should I be using a PUT or a POST request for this action?

Assigning an image to a host is typically done using a PUT request, so I assumed snapin assignments would follow the same convention, but this may not be the case. -

Is there a specific endpoint that I should be using for assigning snapins to a host?

If possible, could you provide an example of the correct JSON body, endpoint, and HTTP method for assigning a snapin to a host?

This is something that can apprently be done via the API Documentation. I have tried assigning both

"10"and"11"as thetaskTypeIDand have had no luck.Thank you so much for your time and assistance!

-

-

Question about Multicast Image Tasking Behavior with FOG APIposted in General

Sever OS: Debian 10 Buster

FOG OS: 1.5.10Hey everyone,

I am currently using the FOG API to create multicast image deployment tasks for groups of 24 laptops at a time. I have a question about the behavior of these multicast tasks in case of failure:

If one of the laptops in the group fails during tasking or deployment, would the other 23 laptops wait indefinitely until the task is manually canceled? Or is there a mechanism that allows the rest of the group to continue or time out appropriately?

If so, I think unicast may be the way to go.

Thanks in advance!

-

RE: Using Snap Ins to run scripts post imaging...?posted in General Problems

@JJ-Fullmer Thanks for the reply! This is helpful.

I feel like maybe the best way to go about it is to assign the Snap In to each host. Just to confirm, this will deploy the Snap In after each time that host is image, regardless of which image it receives?

I’m also thinking of separating my inventory into FOG Groups by computer model and then assigning the Image Associated with it and Snap Ins at the group level.