UEFI boot on vmware

-

I am trying to create an image on my vmware server

however when I am trying to boot from network so that I can take an aimage to my fog server I am encountering an issue

With bios I could manage to take images however newer laptop dont have legacy options and I am starting to have issues.

I have a vmserver and I store my images on the server so that when I need an updated image I just open it, run the updates or do any modifications needed and then run sysprep and backup it using fog.

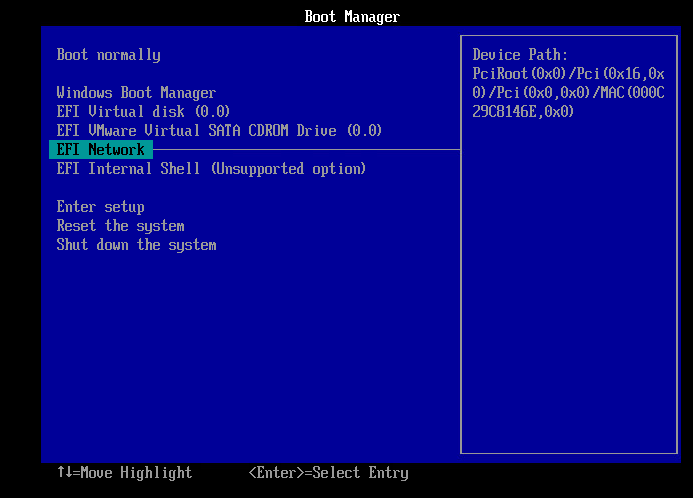

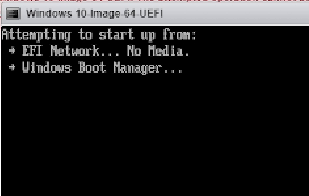

I am now trying to take an image with UEFI and when I am trying to boot using network however when I seleft efi network it displays start pxe over ipv4 and then goes back to the boot menu

Any ideas what I need to do to fix my issue

-

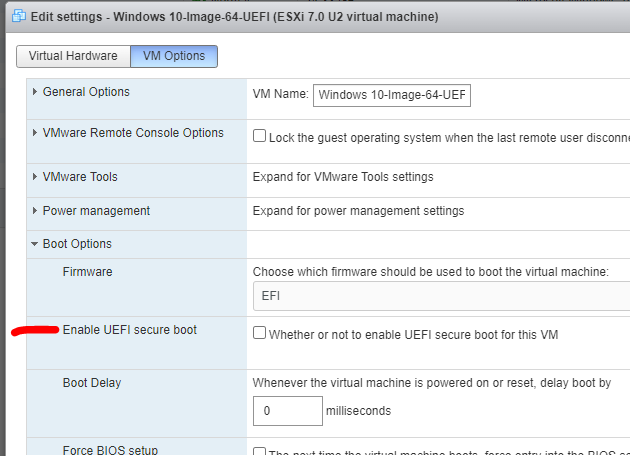

@dejv make sure that secure boot is disabled.

I do my image development work this way. So I can say it works.

Also I have been known to create an image in uefi mode, but then switch the VM to bios mode and capture the image in bios mode just to be sure I don’t miss the reboot and it starts booting the sysprep’d image instead of capturing it. M$ has a way of changing the uefi boot order to boot windows first.

-

@george1421 Its disabled :s

-

@dejv OK so have you ever pxe booted a uefi based computer before?

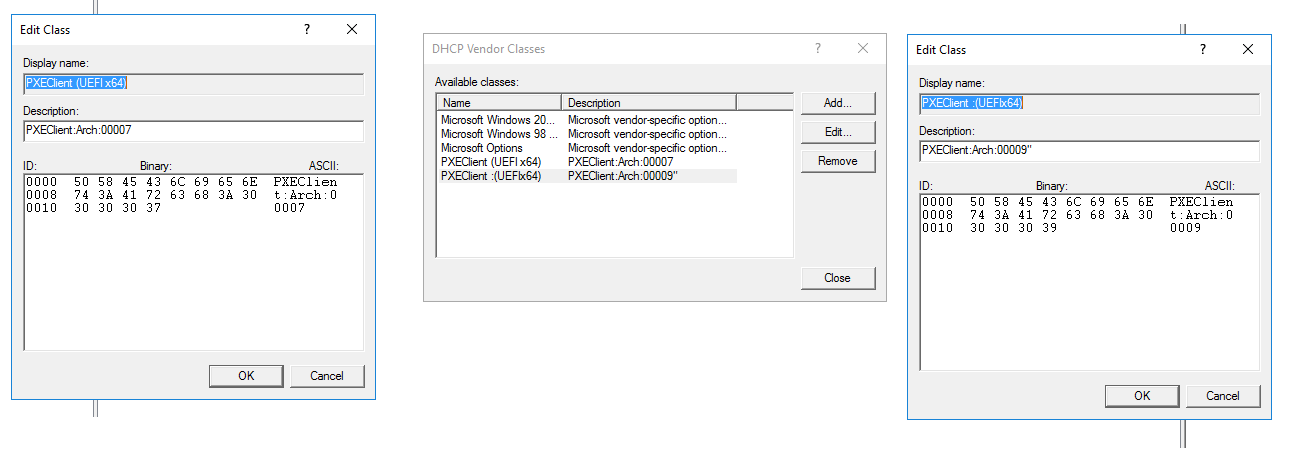

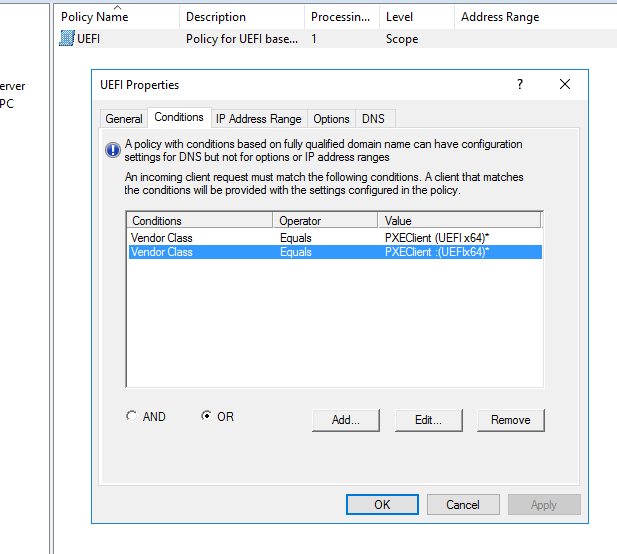

For your uefi policy do you have both type arch 7 and arch 9’s covered. In my setup I have an entry in dhcp for both arch 7 and arch 9 policies.

Are you seeing any error after it should be loading ipxe.efi?

Are you getting this error only on a warm start? If you start the vm from a powered off state does it pxe boot? (has happened to me before, I can’t get it to pxe boot for nothing, until I power off the VM and let it sit for a few minutes).

As you can tell from the questions, the problem may be anywhere at the moment (with the exception of FOG).

-

@george1421 first time, I had arch 7 configured (probably was a few years ago when I configured fog but I dont recall I have used uefi up till now) I have now added arch 9

I am getting the error on first start booting from network and also when selecting to boot from

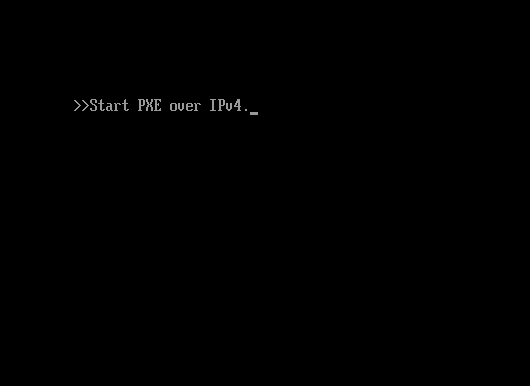

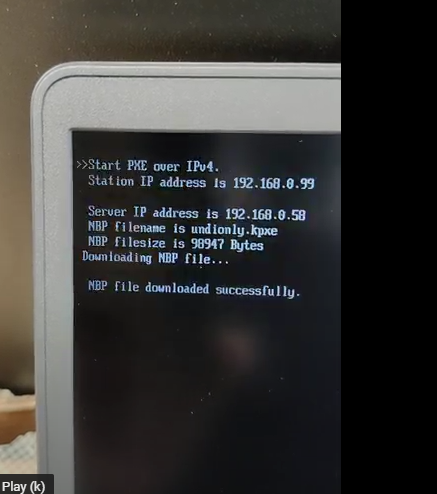

When it starts on boot from network I am getting

If I select to boot from efi network it says start pxe over ipv4 and then goes into the boot menu again

-

@dejv Ok so I take it this is the first time for uefi on your campus. That is OK, it tells us where not to look.

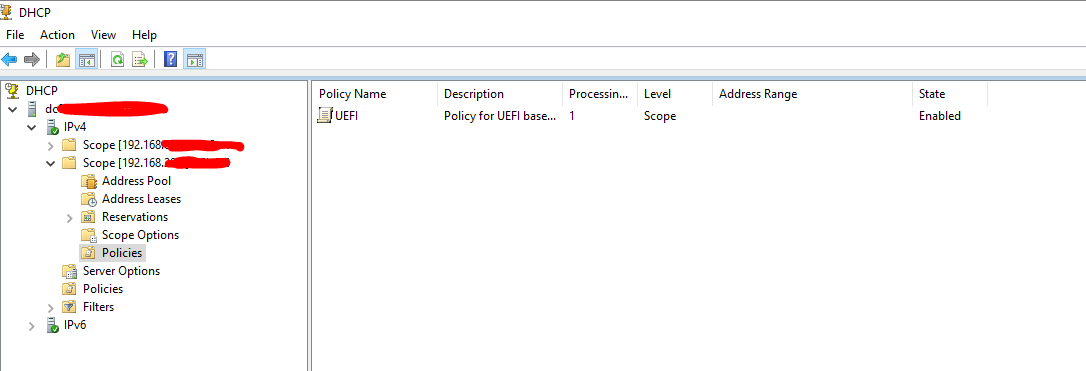

The first thing is that you need to make sure your policy in the dhcp server is activated. Its been so long since I set it up I don’t remember, but when others have issues with the policies in windows dhcp server its because they forgot to activate the policy.

Now second bit is to try to uefi pxe boot with a real computer. This will help us eliminate vmware from the truth table.

Lastly if the fog server is on the same subnet as the pxe booting computer and the dhcp server we can use tcpdump to capture what the dhcp server is telling the pxe booting computer. That will help us spot the root of the problem. https://forums.fogproject.org/topic/9673/when-dhcp-pxe-booting-process-goes-bad-and-you-have-no-clue Upload the pcap to a file share site, share it as public and then either DM me on FOG forum or post the link here and I’ll take a look at it. Once the pcap has been reviewed you can remove it from the file share site.

I’ve ordered these from the easy to less easy so start at the top first.

-

@george1421 first of all thanks for your help :):):)

-The policy on the dhcp is enabled on the dhcp of the pcs

-Booting UEFI with a real computer

I have a dell laptop and after changing from raidon to ahci I managed to boot into the fog menu, registered the machine.The fog server and the laptops are on a different subnet. However the fog server and the vmware I have issues booting from network are on same subnet

-

@dejv said in UEFI boot on vmware:

The fog server and the laptops are on a different subnet. However the fog server and the vmware I have issues booting from network are on same subnet

OK, pxe booting to a physical machine works, but they are on a different subnet. So the problem could still be with dhcp and the local subnet. Lets have you use the fog server and tcpdump to capture a pcap (packet capture) of the pxe booting process on the vm’s subnet. You can either use tcpdump as in the article or use wireshark on a witness computer connected to the same subnet as the fog server and VM. If you use wireshark then use the capture filter of this

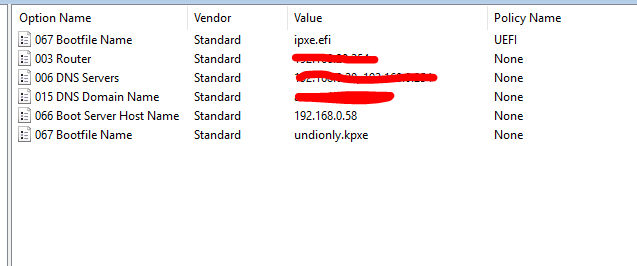

port 67 or port 68You can either upload the pcap or review it with wireshark. If you want to self review, look at the OFFERs packets coming back from the dhcp server. There is typical only one OFFER packet, but if you have multiple OFFERS then you might have multiple dhcp servers on this subnet. But anyway, in the DHCP OFFER, look at the ethernet header. There should be a {boot-file} field. That needs to be ipxe.efi. Also look down in the dhcp options, there should be a dhcp option 67 value. That needs to be set to ipxe.efi.

-

@george1421 Basically I went into the server room and connected the laptop to the server switch (which gives ip on the same network as the fog server and the vm)

However it is not booting into the fog menu, it is booting into the dell diagnostics

I think the issue is that it is opening the file undionly.kpxe instead of ipxe.efi?

-

@dejv said in UEFI boot on vmware:

However it is not booting into the fog menu, it is booting into the dell diagnostics

I think the issue is that it is opening the file undionly.kpxe instead of ipxe.efi?Good debugging step. This is kind of where the truth table was taking me. Just because it works on subnet X, doesn’t mean it will work on subnet Y. It does work on subnet X so we can rule out the fog server as culprit.

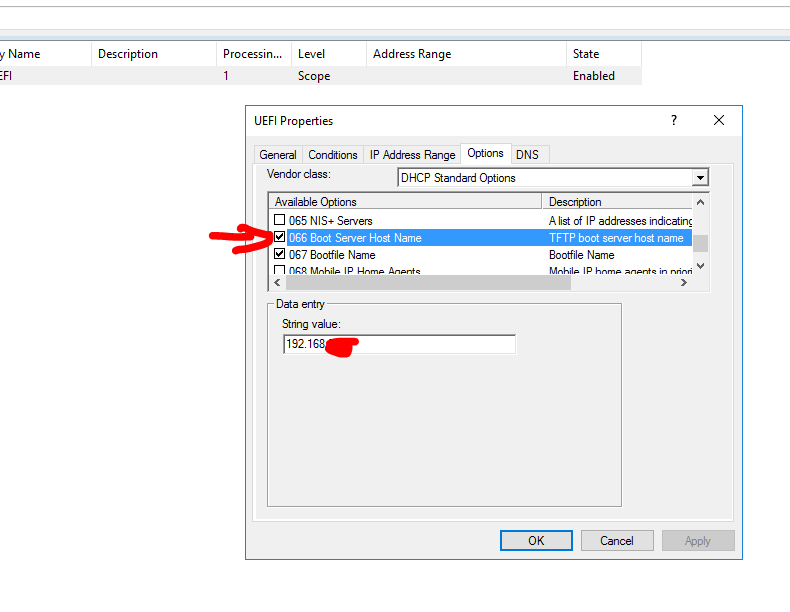

You need to ensure your policies are being applied to the subnet where the VM is running. It sounds like there is no policy so its applying the default values for dhcp option 67.

-

@george1421 I am on leave and will be back on wed to try it out.

-

@george1421 Managed to get it fixed.

I followed these insturctions and found out that I had the 066 was not selected

https://wiki.fogproject.org/wiki/index.php?title=BIOS_and_UEFI_Co-Existence

thanks a lot for the help and keep up the good work.

Wishing a great Christmas and New year to all the team, and to your families and loves ones!

-

@george1421 I changed the option back from ahci to raid on exactly after image has been deployed.

However I laptop is not booting. Is there any easy way to do this without taking too much time or maybe someone to automate it?

-

@dejv said in UEFI boot on vmware:

I changed the option back from ahci to raid on exactly after image has been deployed

I guess the simple question is why would you do this? Do you need raid capabilities? There is no performance gain/loss by leaving it in ahci mode.

In theory this should work (switching between ahci mode and raid-on) before the first boot of windows as long as you have the needed intel drivers onboard the target image before you capture it. Switching between the two modes actually turning on/off different hardware. That hardware presents itself as a physically different disk controller depending on the mode. AHCI mode drivers are built directly into windows, depending on the model of hardware the raid drivers may not be.

-

@george1421 mainly I wanted to select raid on so that if a laptop hass issues and looses bios settings it will still work

Example I have a couple of HP laptops that for some reason if left not being used for a couple of days they loose bios settings even if they have battery life :S