FOG Server Deployment Architecture & Stress Test Tools

-

Hi,

I’m using FOG version 1.5.9. Image capturing, unicast, multi cast are working fine. The images is Linux based.

Goal: To perform stress test to know the number of clients FOG Server can support simultaneously with a tolerable duration (less than 10 min).

Challenges: Having limited number of clients(3). Would like to stress test the capabilities on 300 clientsExperiment & Benchmark:

Actual Clients- able benchmark on 1-3 clients only

- using Gigabit CT Desktop Adapter PCIe NIC

- 1 client 6.14 gb image unicast takes around 19 sec from fogdb.tasks.taskTimeElapsed(block size 4096)

- Speed = 6140 MB / 19 sec = 323.16 MBps * 8 = 2585.28 Mbps

- 3 client 6.14 gb image unicast takes around 39 sec from fogdb.tasks.taskTimeElapsed(block size 4096)

- Speed = 6140 MB / 19 sec = 157.44 MBps * 8 = 1259.52 Mbps

- 1 client 6.14 gb image unicast takes around 19 sec from fogdb.tasks.taskTimeElapsed(block size 4096)

Virtual Box

-

benchmark using 3 VM at single machine

- 1 client 6.14 gb image unicast takes around 34 sec from fogdb.tasks.taskTimeElapsed(block size 4096)

- Speed = 6140 MB / 34 sec = 180.59 MBps * 8 = 1444.72 Mbps

- 3 client 6.14 gb image unicast takes around 57 sec from fogdb.tasks.taskTimeElapsed(block size 4096)

- Speed = 6140 MB / 57 sec = 107.72 MBps * 8 = 861.76 Mbps

Compare to Actual Client:

1 Client : actual client is 1.79 time faster than vm client

3 Client : actual client is 1.46 time faster than vm client

Therefore unable to use VB to make the stress test - 1 client 6.14 gb image unicast takes around 34 sec from fogdb.tasks.taskTimeElapsed(block size 4096)

TFTP Client

-

we are using “https://www.tftp-server.com/tftp-client.html” try to download images from tftp server

-

we use .bat file to trigger the download in parallel

- 1 client 4.73 gb image with block size 65465 -> 101 sec

- Speed = 4730 MB / 101 sec = 46.83 MBps * 8 = 374.64 Mbps

- 3 client 4.73 gb image with block size 65465 -> 273 sec

- Speed = 4730 MB / 273 sec = 17.33 MBps * 8 = 138.64 Mbps

Compare to Actual Client:

1 Client : actual client is 6.90 time faster than tftp client

3 Client : actual client is 9.09 time faster than tftp client

Unable to use TFTP Client to make the stress test - 1 client 4.73 gb image with block size 65465 -> 101 sec

Question

- We are using “Gigabit CT Desktop Adapter PCIe NIC” on actual client. The data rate of the port is “1000 Mbps”. Based on our benchmark the unicast for 1 client and 3 client is “2585.28 Mbps” & “1259.52 Mbps” this is much faster than the PCIe NIC capabilities. May i know how and why FOG able to achieve this?

- If we compare TFTP Client vs Actual Clients, the speed is much faster using FOG even the block size at FOG is much smaller than TFTP Client. May I know what is the architecture behind in FOG that enable it to download much faster?

(we do use the single linux client with similar NIC & network to get file 4.73 gb from TFTP it takes 1469 sec [3.22 MBps/25.75 Mbps]. This is far more slower. We are using the default block size) - May I know what tools/method can perform stress test on unicast & multicast to know the best number of client the FOG server can support simultaneously

Thank You

WT -

@wt_101 First let me say I applaud your efforts. No one has taken the time to quantify how well FOG images or do load benchmark testing with FOG.

I can tell you from practice experience that FOG will:

-

Transfer at or around 6.1GB/min (as seen by the speed counter on the partclone screen, more on this below) on a well designed 1GbE network. I have seen it get as high as 13.6GB/min where the fog server is running on a 28 core VM Host server with 20GB uplink to the network core with the distribution and access layers all 1GbE.

-

All of the heavy lifting is done by the target computer not the FOG server. I can run the FOG server on a Raspberry Pi4 and still get 6GB/min transfer rates (single unicast image). During imaging the FOG server only moves images from disk to the network and collects status information on the capture/deployment process.

-

You can saturate a 1 GbE link on the fog server with 2-3 simultaneous unicast deployments. Because I didn’t have the hardware at the time I did not test saturation of a 10GbE link. If I had to guess it would be in the 10 simultaneous deployment range. I think PCIe performance would saturate before the 10GbE nic would.

-

Partclone speed. That speed on the partclone screen is a composite speed. It includes the intake speed of the image stream to the target computer (including everything it took to get the image to the target computer like FOG server storage disk performance, OS overhead, fog server nic throughput, and well as any network bandwidth overhead), decompression of the FOG image on the target computer and then eventually the speed as which the target computer can write the image to disk. As you see the partclone speed (GB/min) is the throughput of the entire process not just network throughput. So its possible here to see speeds that are impossible to achieve on a 1GbE network if your target computer has many cores, lots of ram and fast nvme disks.

-

Image compression. Historically FOG used gzip compression for its stored imaged. This cut down on network bandwidth and fog server disk requirements. Within the last 3 years FOG has switch to zstd for image compression as the default over gzip. Gzip is still supported but the deployment performance gains by using zstd is remarkable. I do have to caveat this with zstd IS slower than gzip on image capture, but its much faster on image expansion on the target computer. You see the most impact because you typically capture once, but deploy many times. So overall zstd IS faster.

-

What we are seeing as a bottleneck on the larger campus is FOG server performance servicing computers with the FOG Client installed. (this is only a guesstiment) Around 100 FOG clients communicating with the FOG server we see the SQL server utilization shoot up. This causes a slow down in the responsiveness of the FOG Web UI. We’ve traced this down to the default settings in MySQL database. When FOG is installed it uses the MyISAM db engine. Once the fog deployment hits 100 clients we recommend that he FOG admin switch the mysql data engine over to the INNODB engine. Basically the issue with MyISAM is that on a record update the table is locked for all other updates until the current transaction is complete. Where the innodb engine uses row level locking. Because of the MyISAM table level locking there is a lot of resource contention in the database when many clients hit the FOG server at the same time. The innodb releases this contention. If there is db resource contention when we run

topwe would typically see higher than normal mysql cpu requirements and many (many) php-fpm worker tasks. To properly stress test the fog server with many fog clients you will need to simulate the check in communication the fog client does with the FOG server. For really large campuses it may even be beneficial to setup a dedicated and tuned sql server. -

Testing tftp server performance is a bit of a waste of time. The biggest file that is transferred over tftp is 1MB (ipxe.efi). So once the FOG iPXE menu is displayed everything else is done by http. Now I can see value in tuning the apache server for distributing (streaming) large files. AFAIK no one has looked into performance tuning apache. Since you mentioned block size, I do have to say if you are having issues transferring the ipxe code by tftp, check into the networks MTU. If the MTU is less than the default tftp block size you will have PXE startup issues.

-

Testing with Virtual box may not be the best choice since VB is a type 2 hypervisor (i.e. the underlying OS will also impact the performance of the VMs). You might consider using ESXi or dedicated hyper-v server to give you clean and repeatable performance numbers. While VB works ok in a development environment, I think its own internal performance needs to be taken into consideration.

About 4 years ago I did basic bench marking testing the 3 subsystems on the FOG server involved with imaging. The results are here: https://forums.fogproject.org/topic/10459/can-you-make-fog-imaging-go-fast

-

-

Hi @george1421

Thanks for informative reply it helps to explain my doubt on

- VB/TFTP client are not suitable for perform benchmark

- Fog client mass communication might cause FOG server performance bottleneck

- Recommended ESXi or dedicated hyper-v server to perform benchmark (I am exploring these methods)

but I still have some question based on your reply

For point 2 & 5 I would summarized as :

- heavy lifting is done by the target computer not the FOG server

- During imaging the FOG server only moves images from disk to the network (capture/deployment process)

- FOG use zstd for image compression (much faster on image expansion )

Question:

My image at FOG Web UI showing 6.14 GB, in FOG server image folder is about 1.7 GB-

Q1: Can I understand that the image is compressed by zstd from 6.14 GB to 1.7 GB stored in server storage node?

-

Q2: For image deployment what is the process being carry out from below option : (please let me know if there is option C)

- A: move 1.7 GB to network > download to client (1.7 GB) > decompress 1.7 GB to 6.14 GB

- B: decompress 1.7 GB to 6.14 GB > move 6.14 GB to network > download to client ( 6.14 GB)

-

Q3: If Q2 is (A) can we assume that the "zstd " application will be download to client machine to perform the compression/decompression?

For point 4. as you mention

- speed on the partclone screen is a composite speed

- partclone speed (GB/min) is the throughput of the entire process not just network throughput

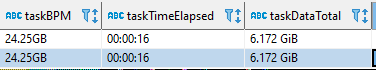

Question: Below image are getting from fog DB > tasks table

- Q1: Do you mean by 24.25 GB/min is the composite speed from partclone?

- Q2: What exactly is taskTimeElapsed when the duration start to capture?

- Q3: What is the different on taskTimeElapsed compare to the start and end time at “imagingLog” table? (we just guessing start and end time is the total duration of the task and taskTimeElapsed is part of it.)

For point 6. as you mention

- “seeing as a bottleneck on the larger campus is FOG server performance servicing computers with the FOG Client installed”

Question

We do plan to install FOG Client into our Linux machine for the purpose of renaming the host name based on static name configure at FOG WebUI after post deployment.- Q1: Is there a way to preform host rename after our image deployment without FOG Client installed?

Thank You

WT -

@wt_101 When I say heavy lifting is done by the client computer, I mean all of the work and the actual performance of fog imaging is directly impacted by the target computer’s capabilities and components. While I understand this is technically impossible, but if you have 2 computers that are exactly matched, except for one has DDR3 1600 and the other has DDR4 2133 RAM, the second computer with the faster ram will deploy the image faster because the transferred image is decompressed in ram on the target computer (more on that in Q2).

Q1 To be honest I never paid attention to what the web ui says for size vs what is on the disk. Off the top of my head having a 3:1 compression ratio seems a bit high in my estimation. Is it possible, yes. What really is a metric is what is the size of actual data on the target computer vs the size of the image files. Its possible that the web ui is recording something different that raw source disk vs compressed image file. There is a compression slider in the image definition. This tells the compressor what compression metric to use (not the right words) during compression. The higher the number the more compression methods it uses to compress the data. i think the slider is set for a default of 4 or 6 for gzip that value is a good balance between compression size and speed. For zstd the Goldilocks number is 11. Where the gzip compressor has a range of 0 to 9, zstd has a range of 0 to 22. I don’t think anyone has done any testing to find the actual Goldilocks number in a quantitative way though. I suspect they found a number that worked well for them and called it good.

Q2 Option A is correct. The image is compressed/decompressed on the client so only a compress image is ever communicated with the client. This saves on storage image size on the storage node as well as transfer bandwidth. From a metric standpoint I know that a 25GB target image can be transferred in about 4 minutes. The only way that’s possible on a 1 GbE network is to transfer a compressed image.

Q3 See that is where the magic of FOG is. The developers created a custom version of linux. That version of linux is called FOS (FOG Operating System). That OS has all of the tools built in that FOG uses to image a target computer. Yes FOS has zstd and gzip compressors built in. When you pxe boot a computer during image, first the iPXE boot loader is transferred to the target computer. iPXE is responsible for the FOG iPXE menu. Once you make a menu selection (like registration) you will see two files transferred to the target computer if you have a fast eye. You will see bzImage (the kernel) and init.xz (virtual hard drive) send to the target computer, that IS FOS linux being sent over. The OS is very small and very fast.

For Point 4, that is more of a question for the developers. I don’t look under the hood for statistics settings. I just know that on the Partclone screen what that speed number means. I don’t know if the FOG program as a way to record that speed or not. As for taskelasped time I think that means something else. As I mentioned above, on a 1 GbE network a 25GB image should take about 4 minutes of transfer time. 16 seconds seems a bit quick.

For Point 6, The fog client is used for more than just renaming the client and connecting the target computer to AD. Its also used for application deployment and some rudimentary system management. You do not need to run the FOG Client if you don’t want to manage the target computer after image deployment.

Q1 yes there is a way. On my campus, which is mostly MS Windows based, I don’t use the fog client at all, yet I still have a touchless deployment. I leverage a feature in FOG called a Post Install Script to make changes to MS Windows unattend.xml file just after the image is pushed to the target computer. For a linux client it is just as easy most of the things that configure linux is just in text file, and FOS Linux is… wait for it… linux, so the possibilities are endless. The concept of a post install script is that you would create a bash script on the fog server that is executed by FOS Linux. That bash script would mount the target computer’s hard drive (post image deployment) and make the necessary adjustments to the hostname and any other deployment specific settings. The post install script can have access to fog host definition variable so you can leverage some of the extra fields in the host definition for specific uses (like other1 and other2 fields).