Feature Request for FOG 1.6.X - Add image integrity verification check

-

Hi all,

One item I would like to see added is for when an image is captured for FOG to take a hash of some kind of the image files, and then have a view in the gui where you can see those hashes, and potentially ask it to do a check for those files against the captured hashes to verify they have not changed. The idea is so that if the image is altered outside of the image capture process, (as may be done by a hacker), the image could be flagged as changed outside of the normal capture process and would need to be hard overridden to be deployed.

Perhaps also a periodic check for image integrity as well with results that can be seen in the gui.

-

@jonhwood360 What I could suggest, then, would be to build a Plugin.

My thoughts of how this plugin would operate:

- To allow the admin full configuration capabilities, make the plugin create a Database that would contain a “primary” table for storing definition of the item such as the name, description, and configuration items (Such as sha256 hash, or md5 or whatever may be preferred).

- An association table so you can associate which images you want to be managed by said definition.

- Create a service file to run on a regular interval. (This part would have to be manually done as creating the systemd or init file on subsequent reboots.) So documentation would need to be on point.

I would need to figure out how to integrate plugin service files. Not a huge issue as we currently inject classes, hooks, and events. I just need to add code to allow injecting services which hasn’t been done yet.

Why do I say a plugin? Because at one point there was a plugin being worked on, but then other things got in the way. If you’re keen to assist it would certainly help us out and you’d get to learn a few cool things along the way.

-

@tom-elliott There was another request along the same lines where the OP wanted to create a md5 hash of each of the captured image files so he could track if the files were corrupted.

I was able to find the thread here: https://forums.fogproject.org/topic/15133/md5-after-image-capture

-

@tom-elliott I will assist as I am able to as per our private conversation. I agree a plugin would be good. Here are my thoughts:

- Plugin should probably allow the hash algorithm to be defined per image. That way a range of security requirements could be met. A drop down of available algorithms maybe?

- I agree with the database, although would this be a totally new database separate from the main fog one? If so I would wonder about query complexity and displaying that information on the image page in the gui?

- Does fog have a scheduler built in for timed tasks? If so, the plugin could maybe piggyback off of that to address the

periodic update requirement

@george1421 Exactly, although I’d like to have the option for the algorithm used for the hash be user defined. I know some people may want Sha512, where as for some MD5 is sufficient for this purpose. A default of sha256 probably would be good as its more than the minimum (md5) but not as intense as 512.

edited: because I typed host instead of image. sorry for the confusion if any

-

@jonhwood360

I’ll try to document better:-

- Why would we use this per host? Unless you mean per server containing images?

-

- Not a totally new database. It would integrate with the existing FOG database, under it’s own tables for definition and association

-

- Services have a web based portion under /var/www/fog/lib/service (plugins would use /var/www/fog/lib/plugin/{pluginname}/service/} which controls the event loops, but the systemd is what starts and maintains the service operationally speaking (so reboots of the server would start the relevant service.)

-

-

@tom-elliott said in Feature Request for FOG 1.6.X - Add image integrity verification check:

@jonhwood360

I’ll try to document better:-

- Why would we use this per host? Unless you mean per server containing images?

Sorry, I meant image, not host. However since fog does potentially have a distributed architecture, maybe a hash per image per image repository?

-

-

@jonhwood360 said in Feature Request for FOG 1.6.X - Add image integrity verification check:

Exactly, although I’d like to have the option for the algorithm used for the hash be user defined. I know some people may want Sha512, where as for some MD5 is sufficient for this purpose. A default of sha256 probably would be good as its more than the minimum (md5) but not as intense as 512.

I think the cryptographic method is a bit irrelevant in regards to its “bit” complexity. What is important that we can create a hash (fingerprint) of each file as fast as we can to keep up with the imaging process. We are not actually looking to encrypt the image file, but just get a fingerprint of it so we can tell if it has been changed. If we use md5, the linux OS has an external command called md5sum and shasum. These would be preferred instead of the FOG developers having to create their own hashing code. shasum does have a command line switch to set the cryptographic algorithm. So IF sha was selected as the fingerprint protocol then it would be possible to create a FOG setting to pick the algorithm (speaking ignorant of the code required to do such). The linux shasum code supports these methods: 1 (default), 224, 256, 384, 512. The only gotcha I can see is that FOS Linux (the OS used to capture and deploy images) needs to have support for the shasum command. I know it has md5sum, but not sure about shasum.

-

By and large I agree with you about the hash algorithm being irrelevant, however, some entities have requirements for minimum acceptable hashing for such kind of verification. I think a baseline of a choice between md5 and shasum baseline is fine, especially while development of the feature is ongoing (md5 is still widely used in forensics), however that will not always be the case and building support in for additional options might be better. The time cost involved is something the end user should accept when they select the additional complexity.

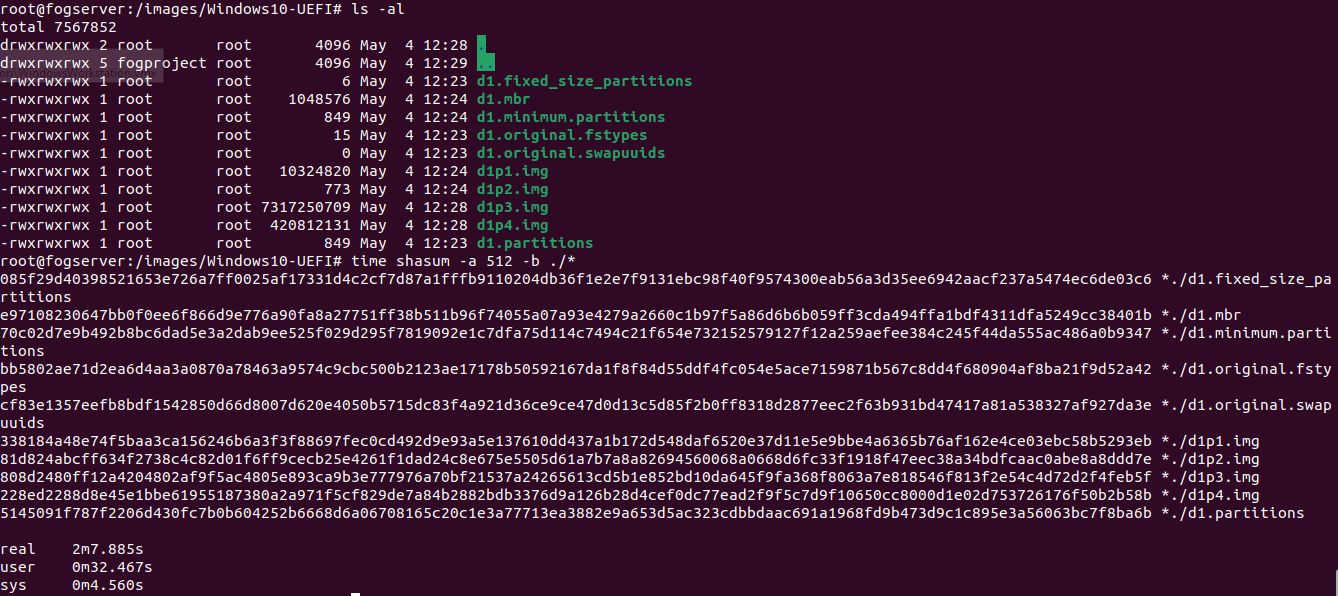

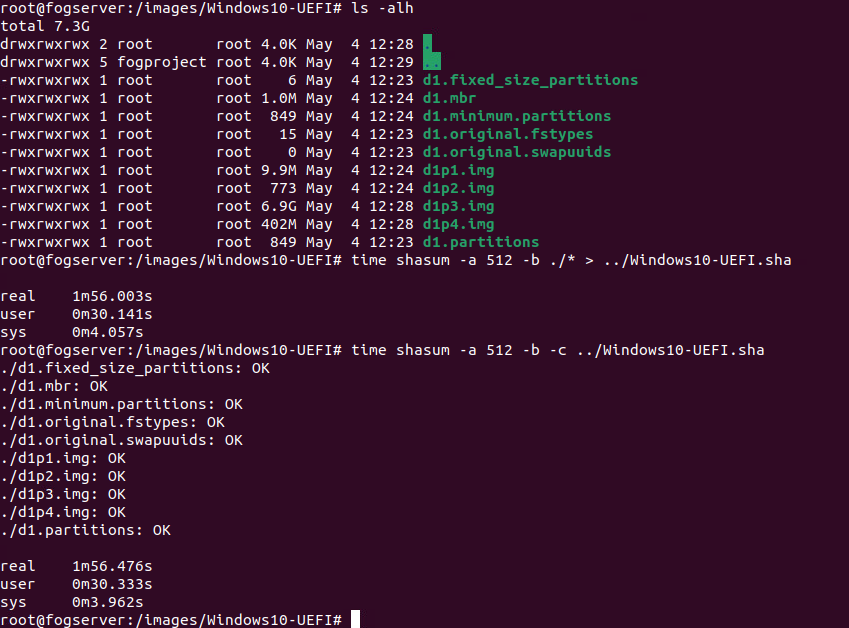

Just as a test, I timed sha 512 on my Windows 10 image. Here is the results:

Took about 2m 8s for 8 GB give or take. So the wait isn’t terrible at higher algorithm complexity. This test was done with a two virtual processor VM on a server with a bunch of other vms running.

Interestingly enough, the shasum utility on ubuntu can compare hashes to a text file for verification.

Also, I don’t think the hash need be taken inline with the imaging process, but be done post imaging, either on demand, or run in background automatically before image is made available for distribution.

Thoughts?