"could not complete tasking (/bin/fog.upload)"

-

Greetings,

I have a FOG Server and Im using a QNAP NAS to store the images I capture. When I schedule a Capture, I successfully capture the image but after the 100%, I get errors such as :

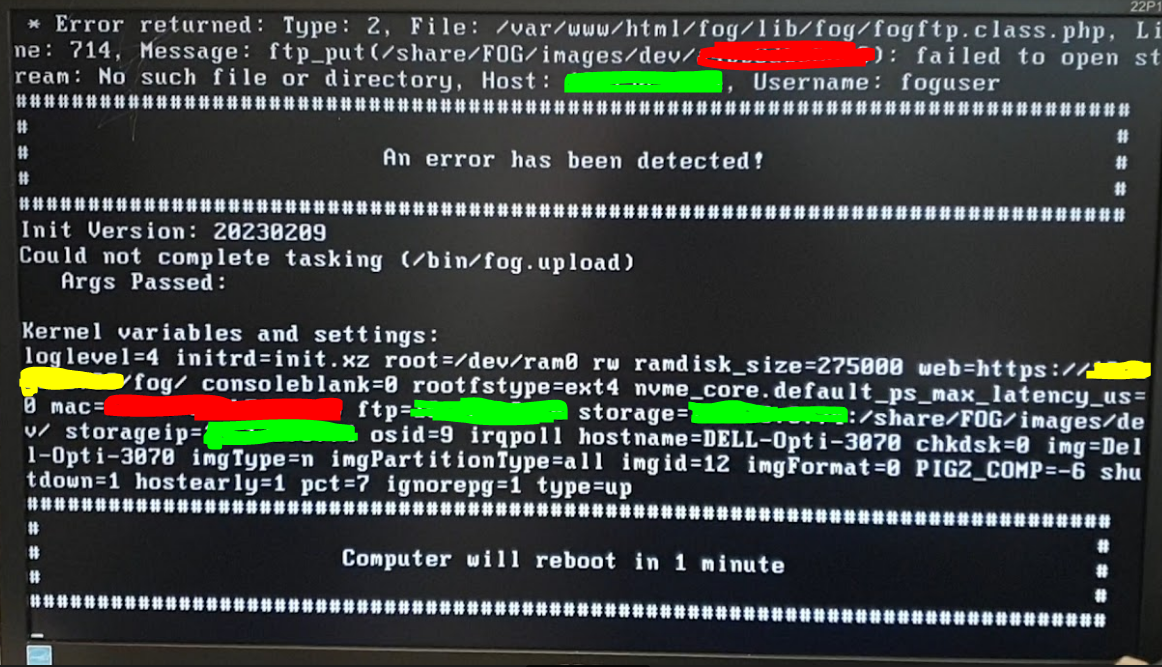

- Error returned: Type: 2, File: /var/www/html/fog/lib/fog/fogftp.class.php, Line 714, Message: ftp_put(/share/FOG/images/dev/[MAC Adress]): failed to open stream: no such file or directory, Host: [My Host], Username: foguser

And finally, as title says : Could not complete tasking (/bin/fog.upload)

I don’t quite understand how and why this is happening. My Fog server is able to communicate and write on my NAS (My image is there) but after the capture, the task get stuck at ~68%.

Any idea how to fix ?

-

@DPR-SIO First let me say that the FOG Project devs don’t support this configuration. They only support using FOG servers or FOG Storage nodes.

With that said you could have success using an external NAS device if the NAS can meet certain requirement. FOG uses NFS and then ftp to capture images. NFS is used to capture the image to /images/dev/<mac_address> folder and then uses FTP to move it from the read/write area to the read only area in /images. The error indicates that its failing on the FTP login and move commands.

Looking in the wiki and tutorial I see some article that might help.

https://wiki.fogproject.org/wiki/index.php?title=NAS_Storage_Node

https://forums.fogproject.org/topic/10973/add-a-nas-qnap-ts-231-as-a-storage-node-fog-v1-4

and the tutorial I wrote for synology nas (this one for you is more about the concepts and not the commands)

https://forums.fogproject.org/topic/9430/synology-nas-as-fog-storage-node

-

@george1421 Thanks for the reply and the guidance. I Think I may have found the solution to my problem but I’m not sure : do I need to Install FOG storage node script on NAS and use it as a Storage node for my FOG Server ?

More I think about it, More I think it is. -

@DPR-SIO Running the FOS Install script on a NAS probably will not work since the install script assumes your “NAS” is a linux computer.

Its possible to setup a NAS as a storage node as long as it has NFS and ftp capabilities. The NFS share directory and the FTP user directories need to point to the same location. In the storage node definition there is an FTP path field, that needs contain the actual path on the NAS where the files are located. The qnap and synology instructions do a good job of showing how things need to be configured. You need to make things configured in the correct location on the NAS, confirm that the ftp user can access both /images/dev and /images directory to move files.

-

@DPR-SIO said in “could not complete tasking (/bin/fog.upload)”:

I Think I may have found the solution to my problem but I’m not sure

Would be interesting to hear what you found.

-

@Sebastian-Roth said in “could not complete tasking (/bin/fog.upload)”:

@DPR-SIO said in “could not complete tasking (/bin/fog.upload)”:

I Think I may have found the solution to my problem but I’m not sure

Would be interesting to hear what you found.

I’ll give a go to this in last. I’ll try others things first.

@george1421 said in “could not complete tasking (/bin/fog.upload)”:

@DPR-SIO Running the FOS Install script on a NAS probably will not work since the install script assumes your “NAS” is a linux computer.

Its possible to setup a NAS as a storage node as long as it has NFS and ftp capabilities. The NFS share directory and the FTP user directories need to point to the same location. In the storage node definition there is an FTP path field, that needs contain the actual path on the NAS where the files are located. The qnap and synology instructions do a good job of showing how things need to be configured. You need to make things configured in the correct location on the NAS, confirm that the ftp user can access both /images/dev and /images directory to move files.

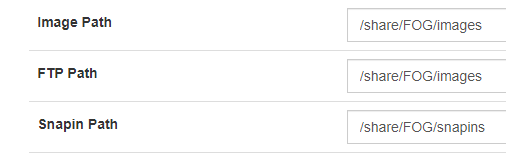

I think my Storage node settings are wrong and Might be the issue. Anything that catch your attention ? My FOG server manage to save the image on my NAS but I still get an error in the end. “Failing to update database”.

Please understand I don’t like leaving IP and MAC address seen so Red is MAC, Green my NAS (Storage node then) and yellow my FOG Server.

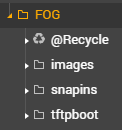

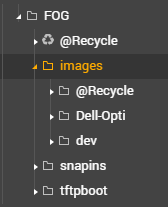

The folders looks like this on my NAS : FOG/images snapins tftpboot

On My FOG it’s /mnt/FOG (I did “mount -t nfs <NAS IP>:/share/FOG /mnt”) -

@DPR-SIO OK so to debug this, I would (from a windows computer)

ftpto your NAS IP using thefoguser(and password) you created on your NAS. If you get logged in key inpwdto print working directory, See if that gives you an absolute path or just your home directory.So the error you posted is failed to open stream, so it means that directory you blanked out in red, the ftp account can not reach that folder. So start with

cd /sharecan you get to there? do allsto see what the content of share is. Work your way down the path. Its possible its a permission problem why you can’t get to the directory to move it. Or the base path is not correct. -

@george1421 So I used CMD to connect to my NAS with ftp <nas ip> :

- Connection is successful with foguser

- pwd return “/”

- ls return the inside of my Shared folder FOG (@Recycle, snapins, images, tftpboot)

-

@DPR-SIO OK then you have a good chance to make this work.

so it looks like for your ftp user account your images should be in /images/dev If what you are showing me is the pwd when you are logged in using ftp.

So if you look at your storage node configuration you have

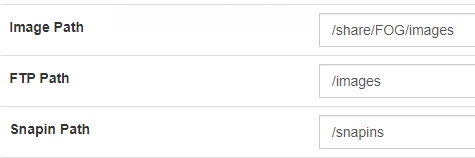

/share/FOG/imagesWhich is probably your absolute path on your NAS. From the FTP account it looks like root directory for the user account is at the/share/FOGpath. SO… long story short in the storage node configuration change the FTP path from/share/FOG/imagesto/imagesand the snapins path to/snapinsNow the image Path will be the NFS path. For now don’t change that, but inspect that directory on the NAS. Do you see a subdirectory in that path with a name that looks like a mac address? If yes you have the image path set correctly. If I remember right where the image capture was failing was on the FTP login not image capture.

At the end of this workaround, you can delete any directory and contents in the /images/dev/ where the directory name appears as a mac address. That directory should only exist during an actual image capture task.

-

@george1421 It looks like it finally managed to update the database and proceed to the final moment of the capture. I did what you suggested and changed the FTP Path and I can’t believe it worked.

As you can see, my Image is there !

Many Thanks for the help, I was this close of giving up for another solution, I was stuck for so long !

-

S Sebastian Roth has marked this topic as solved on