HP ProBook 640 G8 imaging extremely slowly

-

@sebastian-roth How does this look? https://photos.app.goo.gl/2iZT3HmDE3A1wxJH9

-

@jacob-gallant said in HP ProBook 640 G8 imaging extremely slowly:

How does this look? https://photos.app.goo.gl/2iZT3HmDE3A1wxJH9

Yes, perfect. So we know Ubunut using a 5.8.x kernel (with many specific patches included) is using the same kernel driver

e1000ethat we also use with FOS. From the iperf output to me it looks like Ubuntu has the same issue with high number of retries when testing with iperf - same as using Arch Linux. You seem to not notice the issue when testing with speedtest.net but I think this test is not valid in this case because packets from the internet usually come in smaller portions (lower path MTU than in the local subnet where you have jumbo frames) and would not cause the same slowness…So sorry I have put some hope on this when we had the first tests with Ubuntu. Now I think it’s just the same.

As a last resort we might compiling a one-off kernel for you using the driver provided by Intel - though I have to say that I haven’t looked into this yet and it might turn out to be a hazzle. Not sure yet.

-

@sebastian-roth OK, totally understand. Just let me know! Thanks for everything Sebastian.

-

@Jacob-Gallant There is one more thing you might want to look at with the current kernel before you get into testing the patched one below. Schedule a debug deploy task and when you get to the shell run

ip link show | grep mtuand see what number it states right after the key wordmtu.Although it did not compile straight from the Intel code it wasn’t too much work to fix and get it build.

Download patched kernel binary, and put in

/var/www/html/fog/service/ipxe/directory on your FOG server. Now edit the host settings of your HP ProBook 640 G8 and set Host Kernel tobzImage-5.10.12-e1000e-3.8.4. Schedule a deploy task and watch the screen when it PXE boots - it should saybzImage-5.10.12-e1000e-3.8.4...okwhen loading the kernel.Will be interesting to hear of deployment speeds are in a normal range with this kernel.

Just for reference if we need to re-compile this again:

- When using the Intel driver code v3.8.4 there are still calls to PM QoS functions that don’t exist in 5.10.x kernels anymore. Swaping out the function names as seen in this post on the kernel mailing list.

- Next is a function call that was completely removed.

- Then I commented out the use of

xdp_umem_page *pagesin kcompat.c as this was removed in mainline kernel and is only used for older kernel versions in kcompat.c anyway. - Finally re-enabled

CONFIG_PMin our kernel config to get past the last compile error. A different solution would be to move the function definition ofe1000e_pm_thawoutside the#ifdef CONFIG_PMblock.

-

@sebastian-roth Hey Sebastian, the mtu size was 1500 when I ran than command. Unfortunately when I used the patched kernel I received a “no network interfaces found” error, did I miss a step?

-

@jacob-gallant said in HP ProBook 640 G8 imaging extremely slowly:

Unfortunately when I used the patched kernel I received a “no network interfaces found” error, did I miss a step?

Don’t think there is a step you can miss. Interesting it wouldn’t find your network card using the official source code from Intel. Didn’t expect that. I’ll have a look at the code again.

Meanwhile you could schedule a debug deploy task for this host, boot up to the shell and then run the following commands, take a picture and post here:

dmesg | grep -e "e1000e" -e "eth[0-9]" -e "enp[0-9]" ip a sUpdate: I have checked kernel config and code used and I am sure the e1000e driver is included. We’ll see what the dmesg output can offer.

-

@sebastian-roth Here you are. https://photos.app.goo.gl/uJJKgG4PSPHY8kQJ6

-

@Jacob-Gallant Comparing the probe function source code between 5.10.12 kernel and Intel driver does not bring up many differences in that code. Very strange you see the

e1000e: probe of ... failed with -22and I am not sure what that means.Wooooho, I just found that the 3.8.4 version mentioned on the Intel website as “latest” is not actually the latest one: https://sourceforge.net/projects/e1000/files/e1000e stable/

I’ll build another kernel using that one later on.

-

@Jacob-Gallant That one compiled without any modification needed, haha! Wish I had found that one earlier.

https://fogproject.org/kernels/bzImage-5.10.12-e1000e-3.8.7 (note the different Intel driver version at the end of the filename)

Please give it a try using debug again and run the same

dmesgcommand. -

@sebastian-roth Thanks again Sebastian, here is the results of that command. Unfortunately we see the same network performance issues with this kernel.

-

@Jacob-Gallant Ok, so the 3.8.7 driver version does initialize and probe the NIC correctly.

Unfortunately we see the same network performance issues with this kernel.

Oh well… we have tried! Can’t believe we are the only ones seeing this issue. Maybe this NIC is not in use widely?!

If you are really keen you could try contacting people on the mailinglist or through email directly - Linux kernel or Intel drivers.

-

@sebastian-roth Thanks, I appreciate your efforts!

-

Hello .

I have the same problem. But when i plug a usb Stick ( empty or not ) at start , the speed is ok . But i dont know why.

-

@Dungody That really is strange, though interesting. Have you been able to verify this by testing with and without the USB key for several times??

Sure you have exactly the same model, HP ProBook 640 G8?

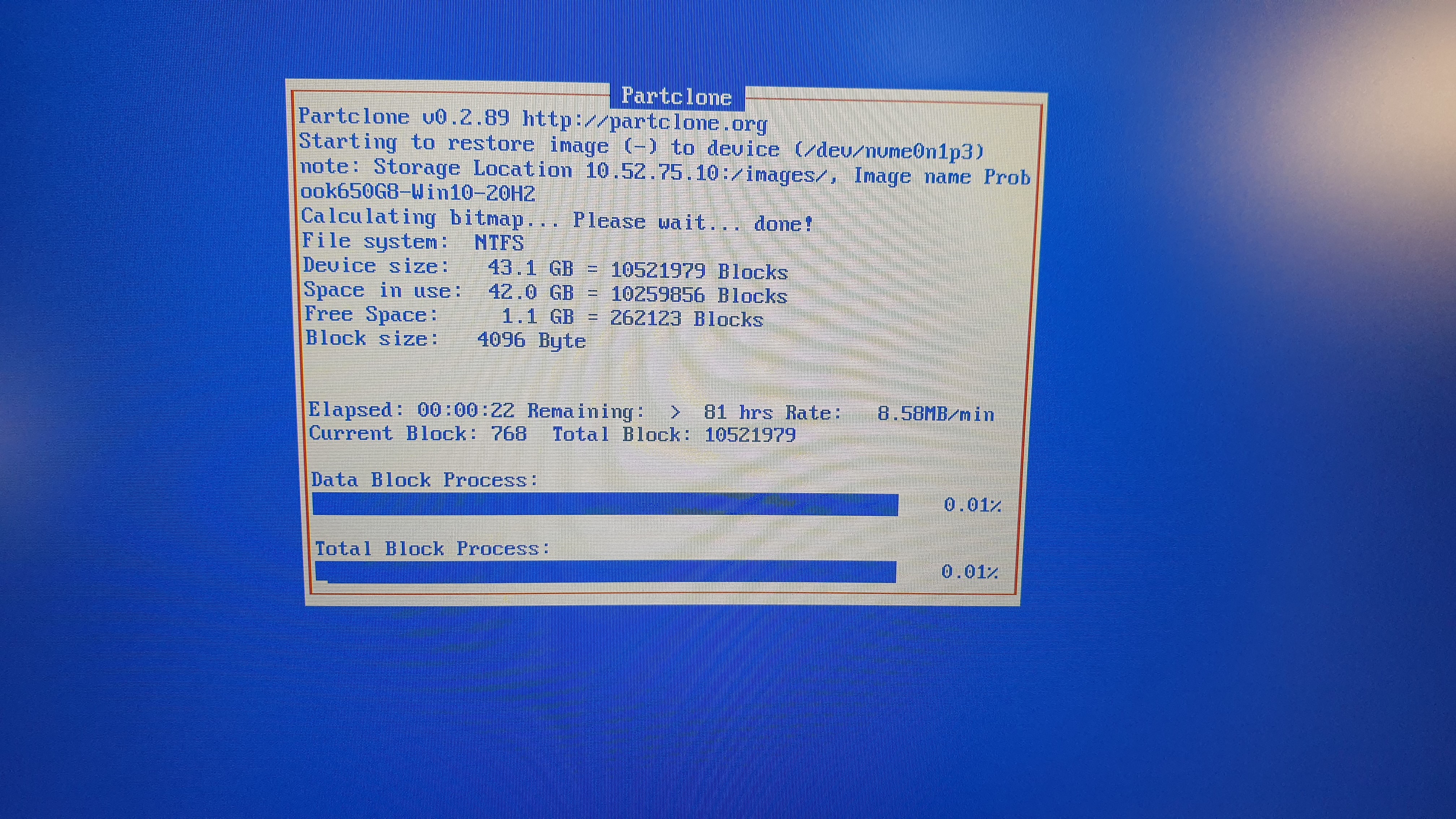

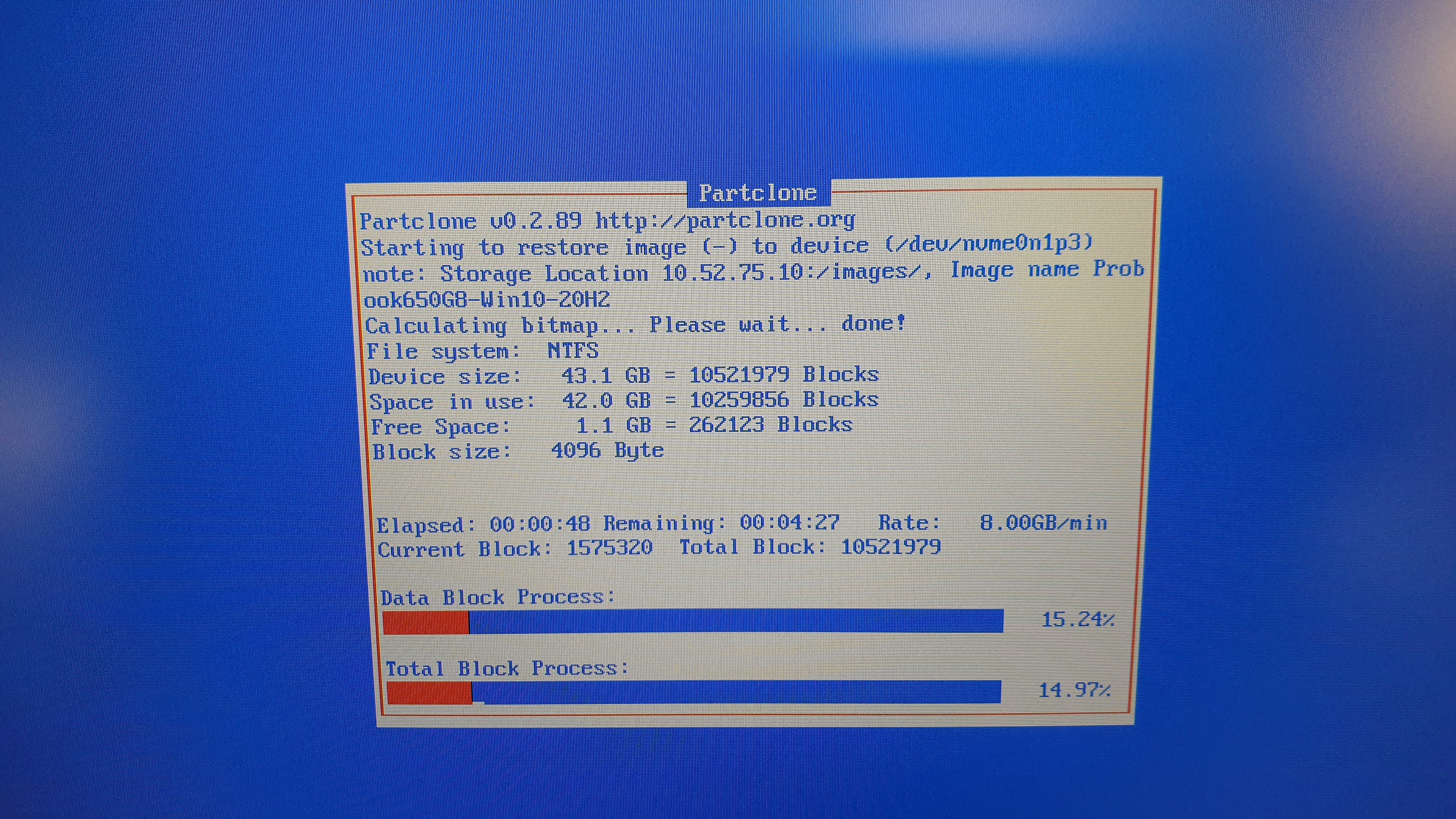

From what we have seen in the network dump I was sure this is due to network congestion. But maybe I was on the wrong track with this and network was only what we saw slowing down because of IO slowness being the root cause. Does FOG deploy to the USB key when it’s plugged in?? Which device name do you see in the blue partclone screen?

/dev/sda1? -

@dungody said in HP ProBook 640 G8 imaging extremely slowly:

Hello .

I have the same problem. But when i plug a usb Stick ( empty or not ) at start , the speed is ok . But i dont know why.

Depending on the firmware I have seen inserting a usb drive change the device naming order, where depending on how the disks are detected on one firmware the usb drive would be detected first making /dev/sda be the usb drive, and on some other firmware (hardware) inserting the usb drive it will take on the /dev/sdb name. It would be interesting to prove out by setting up a debug deploy with the usb drive installed and then run the

lsblkcommand to see what was in the /dev/sda slot. Then issue thefogcommand to start imaging from the debug console.It would be strange to see it deploy to the USB drive faster than the onboard nvme disk.

-

@george1421 I deploy everday 5 or 6 650 G8 / 640 G8, and the only way is to plug an usb stick. Maybe for cache .

-

@sebastian-roth Hello , sorry for my bad english - i m french .

In fact i imaging on the disk NVME .

I have the same problem on the 650G8 and 640G8. when I have no usb stick plugged in the speed is very slow,

but when I have a usb stick plugged the speed is normal on the nvme,

that’s why I thought has a file cache problem. -

@Dungody Thanks for the update and pictures! Definitely looks like you deploy to the NVMe driver. I still can’t see why a USB key plugged into the ProBook would make a difference. Though it sounds like it does.

In the picture I notice that you have partclone version 0.2.89. In FOG 1.5.9 we use the newer partclone 0.3.13. So I am wondering which version of FOG you use and if that is also playing a role here.

@Jacob-Gallant Would you want to give that a try as well?

-

@sebastian-roth i m using FOG 1.5.7 , Kernel 5.6.18.

-

@dungody said in HP ProBook 640 G8 imaging extremely slowly:

i m using FOG 1.5.7

If you don’t want to upgrade to the latest version of FOG we might have you try at least the FOG 1.5.9 init.xz file to see if the updated version of partclone works better with that hardware. I’m still at a loss why using a USB drive would settle down the system, unless they have some kind of strange power saving mode where it would enter a low power state when it thinks there is no user input.

Does other USB devices (external mouse) have this type if impact or is it only usb storage devices that “cure” this issue?