Feature request for FOG 1.6.x - Replace NFSv3

-

@Sebastian-Roth In the case of socat the term server and client are relative to the direction of data flow. Data always flows from the client to the server (processes). Understand at this point there is no encryption in the mix to add that overhead. With socat ssh is only used to initiate the FOG Server side of the push/pull. No data is flowing across that link.

Since I have the test lab, I decided to test a scp file transfer from the target computer to the FOG Server.

# time scp /mnt/t2/r10gb.img root@192.168.10.1:/images/r11gb.img The authenticity of host '192.168.10.1 (192.168.10.1)' can't be established. ECDSA key fingerprint is SHA256:OpIsFYWVDCr/ovMlmPPSl46jpT332P3+BHnchdxzTCI. Are you sure you want to continue connecting (yes/no/[fingerprint])? yes Warning: Permanently added '192.168.10.1' (ECDSA) to the list of known hosts. root@192.168.10.1's password: r10gb.img 100% 10GB 110.5MB/s 01:32 real 1m40.016s user 0m43.767s sys 0m12.531sSo on a quite FOG server and network the speeds of

scpis about 4 seconds slower than NFS and about 10 seconds slower than socat, but you have the benefit with scp of the data being encrypted.You can see the usage increase in the user space application (scp) over nfs and socat transfers.

-

@george1421 The scp timing is interesting as I am unsure about the time taken for accepting the key and entering the passphrase might also account for some of the time. Would you mind redoing this test using a SSH key?

-

@Sebastian-Roth no problem I’ll hit that first thing in the AM. But really a 10 second (total) difference is pretty much a rounding error. The other thing I need to see is if the difference is linear or that 4 seconds difference (scp vs nfs) is just channel setup times. I did find an interesting fact about dd and file creation. You need to have more ram in your system than the size of the file you want to create with dd. I tried to create a 10GB file on a computer with 4GB of ram and it failed. When I went to 16GB of ram I was able to create a 10GB file. I’ll probably cat 2 10GB files to make a 20GB file to see if the difference is linear with scp.

If you look in the posted output scp actually reported a transfer time of

01:32which is in line with the speed I’m getting with socat. Now something that might throw a wrench in the works is if scp can’t take an input from STDIN. It would be a shame if scp can only use real files to send. socat can be pipelined. -

@george1421 said in Feature request for FOG 1.6.x - Replace NFSv3:

I did find an interesting fact about dd and file creation. You need to have more ram in your system than the size of the file you want to create with dd. I tried to create a 10GB file on a computer with 4GB of ram and it failed. When I went to 16GB of ram I was able to create a 10GB file. I’ll probably cat 2 10GB files to make a 20GB file to see if the difference is linear with scp.

I can’t imagine that is really the case. I am sure I have created temporary files using dd way bigger that the size of RAM in my machine. What error did you get?

If you look in the posted output scp actually reported a transfer time of 01:32 which is in line with the speed I’m getting with socat.

Sounds good.

Now something that might throw a wrench in the works is if scp can’t take an input from STDIN. It would be a shame if scp can only use real files to send. socat can be pipelined.

While

scpmight not be able to the SSH protocol itself and thereforesshcommand is able to pipe pretty much anything through the tunnel that you want.time cat /mnt/t2/r10gb.img | ssh root@192.168.10.1 "cat > /images/r11gb.img"Now that I think of it, we could even use it to tunnel other protocols. Can’t think of a good use of this just yet but as a dumb example we could even use NFSv4 unencrypted and pipe it through a SSH tunnel (start

sshwith port forwarding local port 2049 to FOG server IP:2049 and then NFS mount towards 127.0.0.1). -

I am personally a fan of an SSH/SCP solution. It’s a very familiar protocol, secure and pretty straightforward. SSH ports are likely already configured in firewalls as well. Also has pretty good error handling.

Tools like socat are cool, but I think a lot of people are not very familiar with them and since you’d need SSH or the like to get it going anyway, it seems like an extra step without any clear benefit (unless I’m missing something).

The only nod towards socat I’d give is that it is likely more reliable in network transfers, but this comes at the cost of needing another port open in the firewall.

It also would be kind of ironic to move away from NFS because of insecure open ports only to then turn around and open an insecure port anyway lol.

-

@Quazz said in Feature request for FOG 1.6.x - Replace NFSv3:

Tools like socat are cool, but I think a lot of people are not very familiar with them and since you’d need SSH or the like to get it going anyway, it seems like an extra step without any clear benefit (unless I’m missing something).

In the initial testing performance between scp/socat/nfs is pretty much the same. Understand I was working with a 10Gb file of all zeros so I don’t know the impact of real data on the transfer speeds.

From FOS’ perspective I kind of put nfs and ssh in one camp and socat/netcat into another. With nfs and ssh the target computer can do a push/pull of random files under the direction of the FOG code. With socat there needs to be a coordinate with the FOG server and FOS Engine because socat is a throw/catch program. I think it would be easier to use ssh as it kind of parallels the action of NFS.

It also would be kind of ironic to move away from NFS because of insecure open ports only to then turn around and open an insecure port anyway lol.

One option is to move FOS/FOG to nfsv4 and that consolidates everything down to a single well known port. With nfsv4 we can also introduce authentication so the NFS share won’t be just open to the world for writing. NFSv4 won’t address data security in transit, but it will help protect data at rest.

The downside with using port 22 ssh is there may be some policies where a certain encryption structure must be used and changing the sshd in certain circumstances will break imaging. The thought would be to then spin up a new sshd server on a different port so the sshd configuration could be tightly managed by FOG.

I’m not saying there is a right answer yet only this is what I see and protocol alone either of the methods were withing a few seconds of each other with just pure data transfer.

-

@george1421 said in Feature request for FOG 1.6.x - Replace NFSv3:

The downside with using port 22 ssh is there may be some policies where a certain encryption structure must be used and changing the sshd in certain circumstances will break imaging. The thought would be to then spin up a new sshd server on a different port so the sshd configuration could be tightly managed by FOG.

Hmmm, there are pros and cons on both sides with using default SSH on port 22 and spinning up an extra one on another port. Whichever we decide there will be setups that can’t handle it this or the other way round. So I would suggest we try to make it default to port 22 but build scripts and all in such a way that it’s fairly easy for anyone to switch to a non-standard SSH port if needed. @george1421 @Quazz What do you think?

We’ll need to work out a proof of concept over the next weeks to see if it all works anyway.

-

Some reading to consider: https://www.linuxjournal.com/content/encrypting-nfsv4-stunnel-tls

Mentions SSHFS as well (even faster than clear text NFS in their tests??)

I can’t really decide, in the end. Each approach has its own set of downsides and upsides it looks like.

What is most important? Reliability (eg NFS restarting TCP transactions), Security (encrypting the data stream), Maintainability (KISS), Performance (NFS likely slower than SSH pipe)

Additionally, I wonder if we would see differences in performance when we compare transfer performance of a static file vs a data stream. Or perhaps this consideration is irrelevant since more than likely the bottleneck won’t be network transfer anyway, right?

-

@Sebastian-Roth said in Feature request for FOG 1.6.x - Replace NFSv3:

@george1421 said in Feature request for FOG 1.6.x - Replace NFSv3:

The downside with using port 22 ssh is there may be some policies where a certain encryption structure must be used and changing the sshd in certain circumstances will break imaging. The thought would be to then spin up a new sshd server on a different port so the sshd configuration could be tightly managed by FOG.

Hmmm, there are pros and cons on both sides with using default SSH on port 22 and spinning up an extra one on another port. Whichever we decide there will be setups that can’t handle it this or the other way round. So I would suggest we try to make it default to port 22 but build scripts and all in such a way that it’s fairly easy for anyone to switch to a non-standard SSH port if needed. @george1421 @Quazz What do you think?

We’ll need to work out a proof of concept over the next weeks to see if it all works anyway.

I agree with trying to stick to 22 where possible, but to make it configurable. I can imagine some environments have custom ports.

-

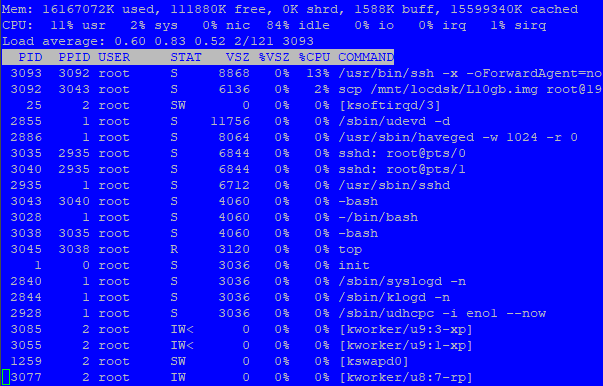

Updated benchmarks. FOG Server 1.5.9 w/kernel 4.19.145(guess) running on Dell o7010. Target computer Dell o7010 both server and target have ssd sata drives. All copy tests use a 10GB file.

Make 10GB file on target computer to FOG hard drive over NFS

# time dd if=/dev/zero of=r10-1gb.img count=1024 bs=104857601024+0 records in 1024+0 records out 10737418240 bytes (11 GB, 10 GiB) copied, 93.0698 s, 115 MB/s real 1m33.072s user 0m0.013s sys 0m4.699sCopy file using scp to FOG server x3 includes entering root password on FOG server

# time scp /mnt/t2/r10gb.img root@192.168.10.1:/images/r11gb.img The authenticity of host '192.168.10.1 (192.168.10.1)' can't be established. ECDSA key fingerprint is SHA256:OpIsFYWVDCr/ovMlmPPSl46jpT332P3+BHnchdxzTCI. Are you sure you want to continue connecting (yes/no/[fingerprint])? yes Warning: Permanently added '192.168.10.1' (ECDSA) to the list of known hosts. root@192.168.10.1's password: r10gb.img 100% 10GB 111.1MB/s 01:32 real 1m43.380s user 0m44.117s sys 0m12.580s # time scp /mnt/t2/r10gb.img root@192.168.10.1:/images/r11gb.img root@192.168.10.1's password: r10gb.img 100% 10GB 111.1MB/s 01:32 real 1m35.493s user 0m44.476s sys 0m12.223s # time scp /mnt/t2/r10gb.img root@192.168.10.1:/images/r11gb.img root@192.168.10.1's password: r10gb.img 100% 10GB 111.1MB/s 01:32 real 1m35.447s user 0m44.404s sys 0m11.946sTiming using piping over ssh instead of scp

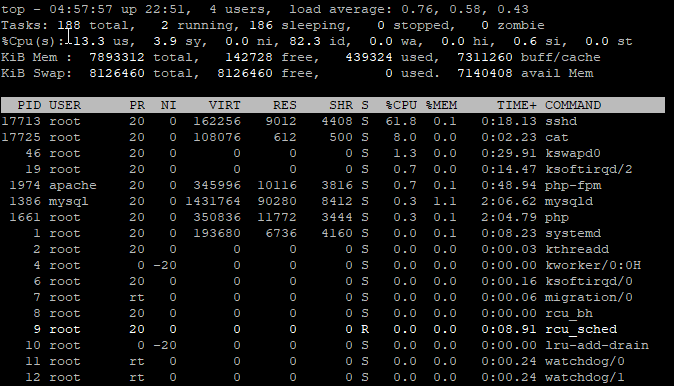

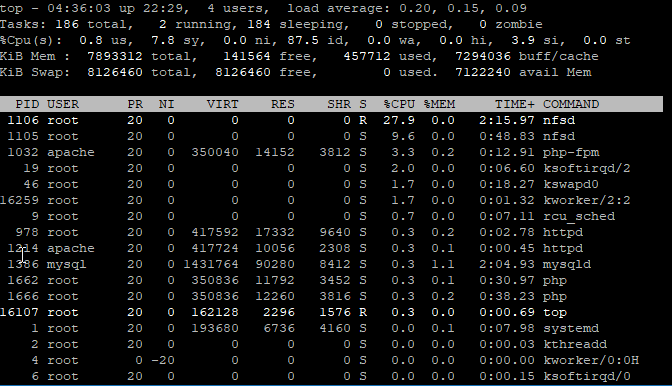

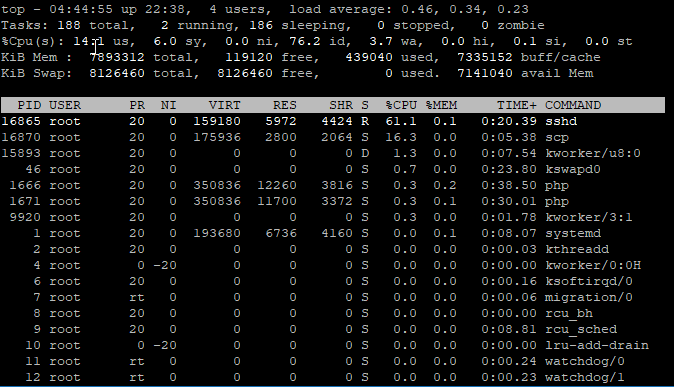

# time cat /mnt/t2/r10gb.img | ssh root@192.168.10.1 "cat > /images/r12gb.img" root@192.168.10.1's password: real 1m36.133s user 0m43.906s sys 0m11.090s # time cat /mnt/t2/r10gb.img | ssh root@192.168.10.1 "cat > /images/r12gb.img" root@192.168.10.1's password: real 1m36.794s user 0m43.751s sys 0m12.099sWhile the cpu load is heavier on both the target computer and the FOG server using ssh the actual copy times almost identical between nfs, scp, and ssh. Just the CPU load increased when sshd was involved.

-

@george1421 Would it be better to use SCP or RSYNC?

Can you run an example using RSYNC to establish the “SSH” connection and transfer to see what the FOG Server and Client load looks like?

I think you’ll see the same types of speeds. I think part of the issue with the cat pipe cat “load” is due mostly to the 2 processes being opened plus the addition of the SSH establishment.

If we are just looking to test ssh, scp is the best tool for the job, though rsync will probably give us more configuration options.

-

Interesting, I repeated the same test with the 5.6.18 kernel and got faster transfer times.

Kernel 5.6.18

Straight file copy over NFS# time cp r10gb.img /mnt/t2/ real 0m46.336s user 0m0.052s sys 0m7.169s # time cp r10gb.img /mnt/t2/ real 0m48.108s user 0m0.045s sys 0m8.881sNow scp

# time scp /mnt/t2/r10gb.img root@192.168.10.1:/images/r11gb.img root@192.168.10.1's password: r10gb.img 100% 6875MB 111.1MB/s 01:01 real 1m5.796s user 0m29.704s sys 0m6.750sNow piped over ssh

# time cat /mnt/t2/r10gb.img | ssh root@192.168.10.1 "cat > /images/r12gb.img" root@192.168.10.1's password: real 1m5.241s user 0m29.134s sys 0m6.849s # I had to repeat it a second time just to confirm it was actually 30 #seconds improvement # # time cat /mnt/t2/r10gb.img | ssh root@192.168.10.1 "cat > /images/r12gb.img" root@192.168.10.1's password: real 1m6.662s user 0m29.833s sys 0m6.966sSo for a straight nfs copy kerne 5.6.18 is about 45 seconds faster copying the file. For the ssh route it was about 30 seconds faster with 5.6.18 over 4.19.145

-

@Tom-Elliott said in Feature request for FOG 1.6.x - Replace NFSv3:

Would it be better to use SCP or RSYNC?

I don’t know the answer at the moment but I can/will surely test it. I have some screen shots of CPU loading while doing these transfers with 5.6.18 kernel. I setup rsyncd on one of my servers and I’m using it to evacuate a second physical server of data. It seems pretty fast moving 3.5GB image files. Just for disclosure this is on a 10GbE network

3,515,218,762,752 20% 176.05MB/s 5:17:22 (xfr#70, to-chk=213/284)If ssh/encryption route is decided I want to look into the kernel to ensure it has all of the crypto APIs enabled and if enabled do they have an impact on transport times.

-

@george1421 said in Feature request for FOG 1.6.x - Replace NFSv3:

root@192.168.10.1's password: r10gb.img 100% 6875MB 111.1MB/s 01:01 real 1m5.796sI assume something went wrong with the test file here. You seem to get faster copy because the file is smaller - 6875 MB vs. 10 GB in the last tests. Transfer rate in scp was and is around 111 MB/s!

-

@Tom-Elliott said in Feature request for FOG 1.6.x - Replace NFSv3:

Would it be better to use SCP or RSYNC?

In essence we need something that is able to pipe contents of a single file to partclone for writing to disk or the other way round. I don’t see how rsync (used for many files) or scp would help us to do this. While you can actually scp into/from stdin/out I can’t see this being much of a gain compared to using sshfs where we mount the remote filesystem directly.

-

This post is deleted! -

@george1421 said in Feature request for FOG 1.6.x - Replace NFSv3:

Their testing shows that ubuntu 20.04 moves data the fastes, then 18.04, Centos 8 and finally Cento 7 is the slowest.

What protocol are they using? Some proprietary stuff I’d imagine. That would break it down to subsystem IO being faster on newer kernals and Ubuntu leveraging some kind of optimized IO?!

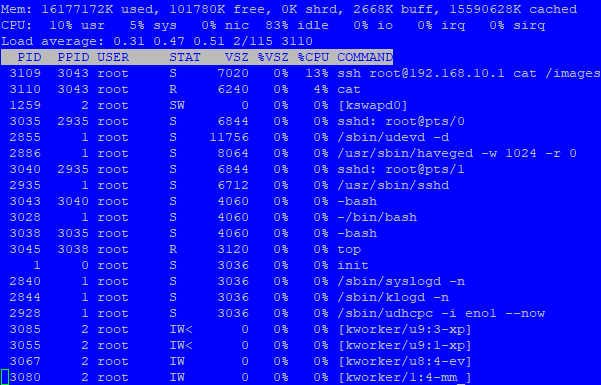

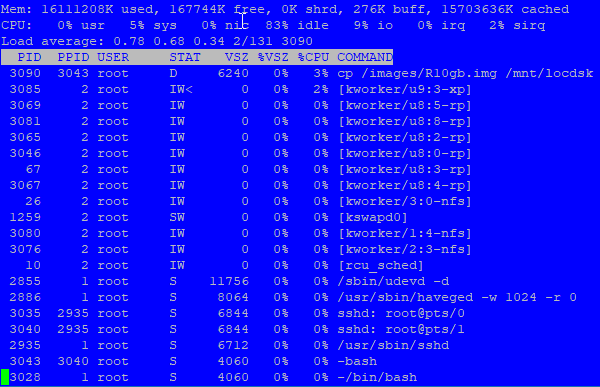

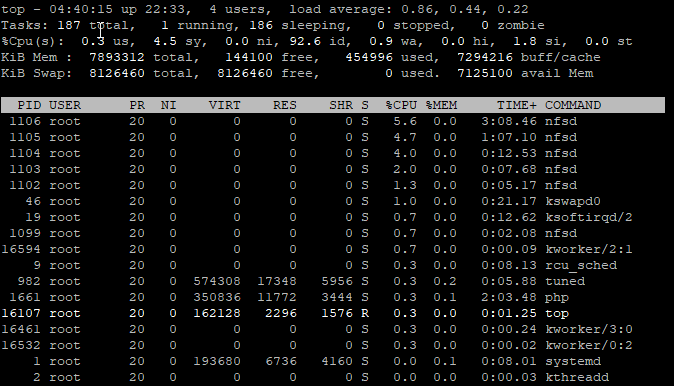

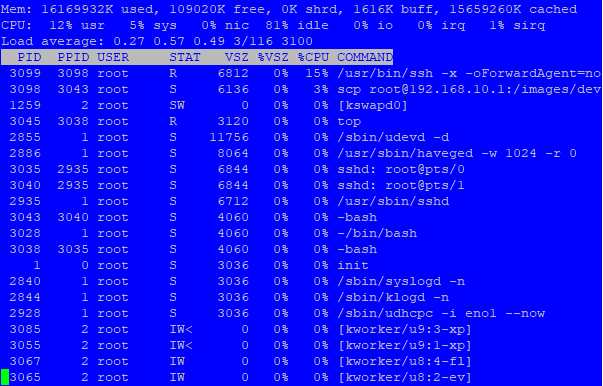

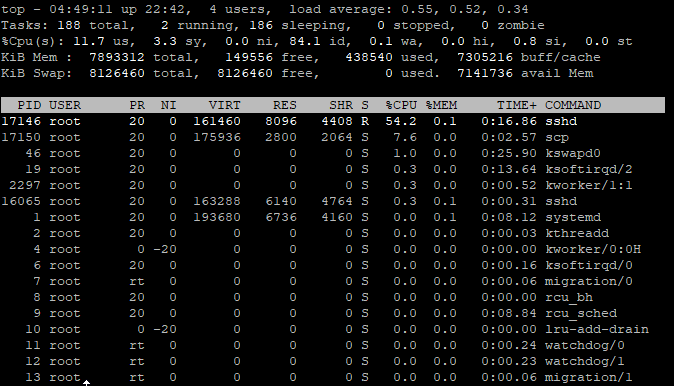

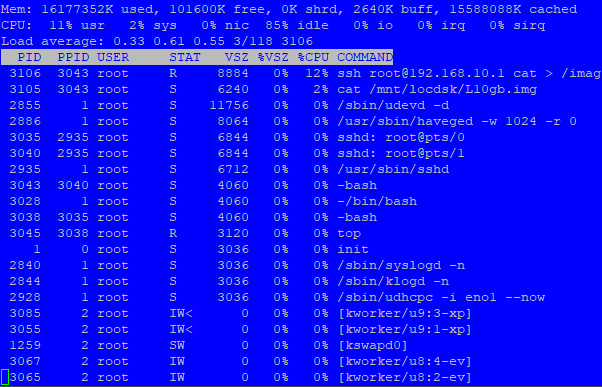

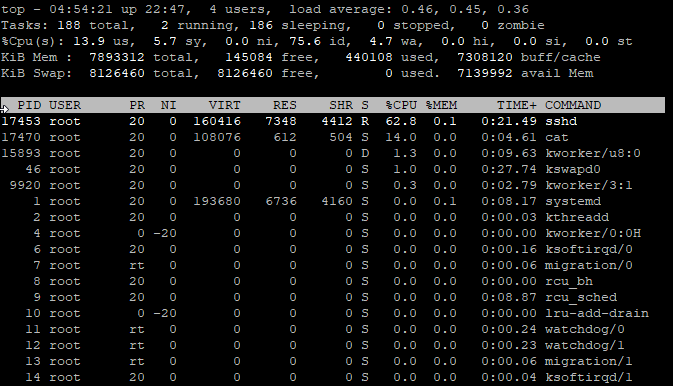

FOG Server ssh pipeline

That picture shows both a

scpandsshcommand. So either one is spawned from the other (kind of likely when I look at the many command line options and PIDs) or you can two commands in parallel. The headline “ssh pipeline” doesn’t fit I would think. -

@Sebastian-Roth I’m going to redo those stats this morning and delete the first ones. I do this I botched getting pictures aligned with the test. I’ll fully document the testing protocol so it can be duplicated if we need verification.

Transfer rate in scp was and is around 111 MB/s!

Understand both the fog server and target computer are on an isolated network with their main task is being file transfer and not servicing 100s of client computers with the fog client installed. The 111MB/s tells me the scp speed is being bottle-necked by the network (1GbE ~= 125MB/s theoretical max). I’ll test local and remote write speeds on each system this morning.

-

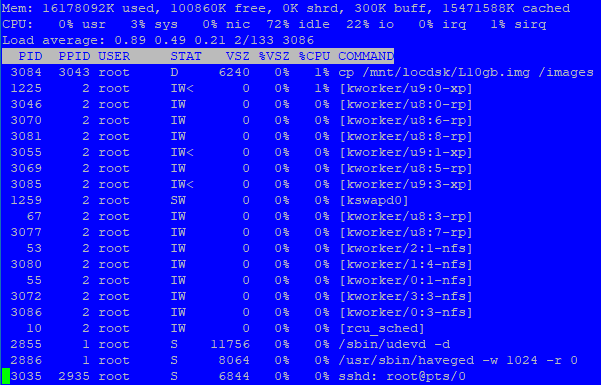

Testing systems Dell o7010 both fog server and client computer. Both systems have local ssd sata drives. The target computer is running a customized linux kernel 5.6.18 and a customized init but both as based on FOG 1.5.9. The customization was done to aid in debugging and bench-marking the systems.

Testing script

mkdir /mnt/locdsk mount /dev/sda1 /mnt/locdsk mkdir /images mount -o nolock,proto=tcp,rsize=32768,wsize=32768,intr,noatime "192.168.10.1:/images/dev" /images #Test 1 creation of local and remote file by target computer time dd if=/dev/zero of=/mnt/locdsk/L10gb.img count=1024 bs=10485760 time dd if=/dev/zero of=/images/R10gb.img count=1024 bs=10485760 #Test 2 cp files to and from server time cp /mnt/locdsk/L10gb.img /images time cp /mnt/locdsk/L10gb.img /images/L10gb-1.img time cp /images/R10gb.img /mnt/locdsk time cp /images/R10gb.img /mnt/locdsk/R10gb-1.img #Test 3 scp files to and from server time scp /mnt/locdsk/L10gb.img root@192.168.10.1:/images/L10gb-2.img time scp /mnt/locdsk/L10gb.img root@192.168.10.1:/images/L10gb-3.img time scp root@192.168.10.1:/images/dev/R10gb.img /mnt/locdsk/R10gb-2.img time scp root@192.168.10.1:/images/dev/R10gb.img /mnt/locdsk/R10gb-3.img #Test 4 ssh pipeline to and from server time cat /mnt/locdsk/L10gb.img | ssh root@192.168.10.1 "cat > /images/L10gb-4.img" time cat /mnt/locdsk/L10gb.img | ssh root@192.168.10.1 "cat > /images/L10gb-5.img" time ssh root@192.168.10.1 "cat /images/dev/R10gb.img" | cat > /mnt/locdsk/L10gb-6.img time ssh root@192.168.10.1 "cat /images/dev/R10gb.img" | cat > /mnt/locdsk/L10gb-7.imgTesting results as captured.

## Building the test files both local and remote # time dd if=/dev/zero of=/mnt/locdsk/L10gb.img count=1024 bs=10485760 10737418240 bytes (11 GB, 10 GiB) copied, 20.2216 s, 531 MB/s **real 0m20.223s user 0m0.001s sys 0m6.460s # time dd if=/dev/zero of=/images/R10gb.img count=1024 bs=10485760 10737418240 bytes (11 GB, 10 GiB) copied, 93.3867 s, 115 MB/s **real 1m33.390s user 0m0.003s sys 0m5.369s ## Confirm that files exist and are properly sized # ls -la /mnt/locdsk/ total 10485785 drwxr-xr-x 3 root root 4096 Oct 9 08:25 . drwxr-xr-x 3 root root 1024 Oct 9 08:23 .. -rw-r--r-- 1 root root 10737418240 Oct 9 08:26 L10gb.img drwx------ 2 root root 16384 Jan 10 2013 lost+found # ls -la /images/ total 10519109 drwxrwxrwx 3 sshd root 63 Oct 9 2020 . drwxr-xr-x 19 root root 1024 Oct 9 08:23 .. -rwxrwxrwx 1 sshd root 0 Sep 28 13:36 .mntcheck -rw-r--r-- 1 root root 10737418240 Oct 9 2020 R10gb.img drwxrwxrwx 2 sshd root 26 Sep 28 13:36 postinitscripts### Copy Local to Remote ### # time cp /mnt/locdsk/L10gb.img /images ** real 1m34.821s user 0m0.083s sys 0m7.314s # time cp /mnt/locdsk/L10gb.img /images/L10gb-1.img **real 1m34.759s user 0m0.046s sys 0m6.801s

### Copy Remote to Local ### # time cp /images/R10gb.img /mnt/locdsk **real 1m41.710s user 0m0.084s sys 0m11.327s # time cp /images/R10gb.img /mnt/locdsk/R10gb-1.img **real 1m41.520s user 0m0.095s sys 0m11.392s

### SCP Local to Remote ### # time scp /mnt/locdsk/L10gb.img root@192.168.10.1:/images/L10gb-2.img The authenticity of host '192.168.10.1 (192.168.10.1)' can't be established. ECDSA key fingerprint is SHA256:OpIsFYWVDCr/ovMlmPPSl46jpT332P3+BHnchdxzTCI. Are you sure you want to continue connecting (yes/no/[fingerprint])? yes Warning: Permanently added '192.168.10.1' (ECDSA) to the list of known hosts. root@192.168.10.1's password: L10gb.img 100% 10GB 110.0MB/s 01:33 **real 1m40.007s user 0m44.460s sys 0m13.378s # time scp /mnt/locdsk/L10gb.img root@192.168.10.1:/images/L10gb-3.img root@192.168.10.1's password: L10gb.img 100% 10GB 109.5MB/s 01:33 **real 1m37.404s user 0m44.420s sys 0m13.068s

### SCP Remote to Local ### # time scp root@192.168.10.1:/images/dev/R10gb.img /mnt/locdsk/R10gb-2.img root@192.168.10.1's password: R10gb.img 100% 10GB 101.9MB/s 01:40 **real 1m44.166s user 0m43.986s sys 0m22.887s # time scp root@192.168.10.1:/images/dev/R10gb.img /mnt/locdsk/R10gb-3.img root@192.168.10.1's password: R10gb.img 100% 10GB 102.0MB/s 01:40 **real 1m44.620s user 0m43.437s sys 0m23.061s

### SSH Pipeline Local to Remote ### # time cat /mnt/locdsk/L10gb.img | ssh root@192.168.10.1 "cat > /images/L10gb-4.img" root@192.168.10.1's password: **real 1m35.562s user 0m42.701s sys 0m12.975s # time cat /mnt/locdsk/L10gb.img | ssh root@192.168.10.1 "cat > /images/L10gb-5.img" root@192.168.10.1's password: **real 1m35.749s user 0m43.478s sys 0m11.166s

### SSH Pipeline Remote to Local ### # time ssh root@192.168.10.1 "cat /images/dev/R10gb.img" | cat > /mnt/locdsk/L10gb-6.img root@192.168.10.1's password: **real 1m43.745s user 0m44.738s sys 0m20.828s # time ssh root@192.168.10.1 "cat /images/dev/R10gb.img" | cat > /mnt/locdsk/L10gb-7.img root@192.168.10.1's password: **real 1m43.564s user 0m43.976s sys 0m21.966s