@Tom-Elliott said in HP Z8 Fury G5 Workstation Desktop PXE boot:

@alessandro19884 Can you change your bootfile from snponly.efi to ipxe.efi and see if that works better?

This is the other thing I was going to suggest

@Tom-Elliott said in HP Z8 Fury G5 Workstation Desktop PXE boot:

@alessandro19884 Can you change your bootfile from snponly.efi to ipxe.efi and see if that works better?

This is the other thing I was going to suggest

@alessandro19884 After you updated to fog 1.6, you’d want to re-do the download of the latest kernel and init.

Working 1.6 will already have the latest ipxe pull.

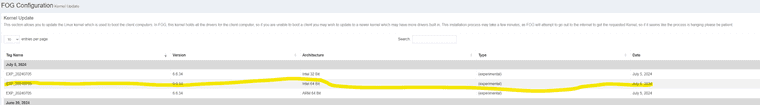

I’d suggest this kernel

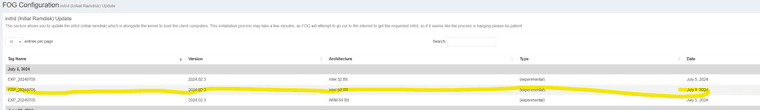

And this init

@alessandro19884 That model and setup should work on 1.5.10 as I recall. We have lots of Z2 Gx machines.

I would suggest for sure updating to the latest kernel and init as @george1421 mentioned. This sounds like a driver issue within fos

For 1.5.10 you should be able to download the latest experimental bzImage kernel from the fog kernel update screen under configuration.

You’ll have to update the init manually, there’s a doc on that here: https://docs.fogproject.org/en/latest/manual-kernel-upgrade

If you don’t want to do the ipxe compile yourself you could also give the working-1.6 branch a try as it has the latest pull of ipxe. It’s a new web UI too.

See https://docs.fogproject.org/en/latest/installation/server/install-fog-server/#choosing-a-fog-version for info on selecting a git branch to upgrade from.

The latest versions of the dev-branch and working-1.6 also both have a GUI for the init.xz updates.

Also as far as logs to check, there is a logviewer in the fog configuration menu that is very helpful.

Some locations of logs with potentially helpful info:

/opt/fog/log #various logs here

/var/log/php-fpm/www-error.log

@george1421 https://docs.fogproject.org/en/latest/compile_ipxe_binaries

Just throwing in the permalink to the doc on this process.

@EuroEnglish I haven’t been able to recreate the issue. I would suggest maybe trying an update to the kernel and init, if you’re on the latest dev-branch version there should be a July 5th dated bzImage and Init available from the fog configuration kernel update and init update menus.

The only other things I can think of might be your Windows 11 partition layout being non standard or something? It should all be booted into RAM at that point though, so that doesn’t make much sense, but it’s possible that the efi partition is too small or something, that’s just a hairbrained theory though. Other thing could be a custom background image? Maybe it stops liking the size of it?

Hopefully trying the newer kernel just solves the problem though.

@EuroEnglish Sorry I’ve been away on vacation on and off the last week.

I will try to recreate this on my setup but I am running Fog 1.6 (you can try it out too by checking out the ‘working-1.6’ branch instead of the dev branch).

And I am using a newer kernel and init. Once of the features of the newest kernel and init is support mac address pass-thru properly, but if you’re not actually registering the hosts, this won’t make much difference, it could still give you some benefit though.

We have a spare L13 or L390 laptop and such so I will test having it have win 11 loaded and being unregistered and see what happens.

@EuroEnglish I have not experienced this issue at all as I’ve upgraded machines to windows 11. I even have a few L13 yogas, and they aren’t doing this.

What version of Fog are you running?

What version of ipxe your fog is running? (The version displays when booting to pxe like iPxe 1.2.1 or something like that)

Also, are you saying it’s slow in the actual fog pxe menu or getting into the pxe menu? Sounds like that actual menu yeah? Like you’ve booted to fog via pxe and see the menu, when trying to select an option on a registered host it freezes up?

What kind of adapter are you using to get the L13 to boot to pxe? USB-C? The special lenovo proprietary adapter?

Do you have mac address pass-thru enabled in the bios?

What version of the FOS kernel/init are you using. You can find this in the Fog GUI under configuration I believe, if you have a recent enough version.

@professorb24 If they are routable, you need to make sure the DHCP options are set for both vlans/scopes.

If you can give more info on what is and isn’t working we can give you more directed help.

Like do you have it working in 1 of the 2 vlans?

@professorb24

I use fog within multiple VLANs but they are all routable to one another.

If you have isolated vlans you’d have to at least have storagenodes on the other vlans and then could maybe make that work with multiple adapters having replication take place on an adapter on the same vlan as the master and imaging take place on the other vlan with a separate adapter.

The steps to configure routing depend on your network infrastructure and can involve switch config and firewall config.

@pbriec The specs of my lenovo adapter also state WOL is supported but I can’t get it to work from off or from sleep with or without FOG being the one to send the wake packet.

But I am able to get WOL to work on a standard desktop from Fog without issue now. So do another pull of working-1.6 to get fog’s WOL task updated.

I’d suggest running it without -y to make sure you get prompted to do any database schema updates via the web-ui. It’s not often that you have to push that button but there are for sure some when upgrading from 1.5.x to 1.6.x. Unless @Tom-Elliott has added something to the installer to make -y automate the schema updates.

cd /path/to/fog/installer

git checkout working-1.6

git pull

cd bin

sudo ./installfog.sh

@george1421 I don’t often use the s0 or s3 sleep on my laptop, I just go full on full off. I don’t have a lot of need for WOL to work on laptops in our environment so I’m not too worried about it, but I’ll test wake from sleep mode.

@pbriec The specs say it does WOL from s0/s3 which are sleep modes, not full off modes.

I was able to recreate the issue where the wol task wouldn’t delete and was causing computers to boot to fog and showing that error screen and @Tom-Elliott believes he has that fixed (I’m about to test that)

I did confirm that add WOL to a normal task (like image deploy or inventory) does work as expected in the Newer Fog. So WOL functionality still works the same. I tested that on a standard desktop with built-in ethernet.

@pbriec I can confirm that these sql commands fix the issue with the snapin table ajax error.

I am trying to recreate your wol issues too.

I am unable to get wol with or without fog on my lenovo laptop with the lenovo branded usb-c ethernet adapter. Are you using a usb/usb-c adapter for ethernet or are you using a docking station?

@luilly23 said in Cannot find disk on system (get harddisk) - Dell Latitude 3140:

@Kureebow Dell’s website says storage can be UFS, eMMC, or SSD.

What’s on your laptop?

https://www.dell.com/en-us/search/latitude 3140Perhaps there is still no fog or partclone support for UFS or eMMC storage.

I’ve previously been able to image with partclone on eMMC. I’ve moved away from such devices as we found them painfully slow for our needs, but if you’re having issues with that I’d be happy to help.

@Kureebow said in Cannot find disk on system (get harddisk) - Dell Latitude 3140:

@luilly23 I cant seem to find anything in the fog wiki for UFS being unsupported.

While not everything from the wiki has been migrated to it just yet, docs.fogproject.org is the new home of our docs. Also posts within this forum are another great place to look.

@Kureebow UFS is supported in the latest dev branch, you could also just download the latest kernel and init and that may do the trick.

The Surface Go 4 has UFS storage and we had to update the kernel config to support UFS drives. See https://forums.fogproject.org/topic/17112/surface-go-4-incompatible/2?_=1716208953314

and

https://github.com/FOGProject/fos/pull/78

and

https://github.com/FOGProject/fos/commit/71b1a3a46c43b61b692e31de21754dfc55606b64 and https://github.com/FOGProject/fos/blob/dc9656b08f369f9746372020456158d95cd2e0fa/configs/kernelx64.config#L3093-L3100

In that post you’ll also see that UFS, at least on the surface go 4, only supports native 4k blocks. Which means, if you are making you image on a VM (VMware for sure on this) then you’re partitioning with 512e blocks instead of 4k blocks. 512e (e for emulated) is still the most common block size as it allows for better backwards compatibility while still using “better” 4k block storage on your disk in the background.

This matters because you won’t be able to correctly deploy a 512 block image to a 4k block disk, the block numbers won’t align properly and it will either completely fail or it will just not resize the disk correctly at the end.

I ended up maintaining a separate 4kn image and had to get approval to buy a separate surface go 4 to maintain the image on. On the plus side, that did give me the motivation to dial in my image creation process further with lots of automation.

I imagine there is a VM Hypervisor out there that allows for setting the block size, but I know for sure that VMWare doesn’t. I found that bhyve within FreeBSD did have a method for this, but it required other work arounds for getting around Windows 11 security requirements, and I didn’t want to base my image off something with security workarounds.

In the end I am maintaining a separate image, I was able to get management to let us buy a separate surface go 4 for maintaining the 4k disk image.

I found that bhyve based VMs can be set to 4k blocks but it was cumbersome to get it to boot to fog to capture the image at the end. And when that image was deployed, it did not expand on the surface go.

Some info from the debug session

cat /images/4KDisk-Base-Dev/d1.original.fstypes

/dev/vda3 ntfs

[Fri Jan 26 root@fogclient ~]# cat /images/4KDisk-Base-Dev/d1.partitions

label: gpt

label-id: 9865AAFC-B984-4860-ACF5-4D6F2513747D

device: /dev/vda

unit: sectors

first-lba: 6

last-lba: 16777210

sector-size: 4096

/dev/vda1 : start= 256, size= 76800, type=C12A7328-F81F-11D2-BA4B-00A0C93EC93B, uuid=7C4743B5-7150-4672-B521-7B537528D7E7, name="EFI system partition", attrs="GUID:63"

/dev/vda2 : start= 77056, size= 4096, type=E3C9E316-0B5C-4DB8-817D-F92DF00215AE, uuid=F6216A84-0172-4445-B616-E36DFA20C731, name="Microsoft reserved partition", attrs="GUID:63"

/dev/vda3 : start= 81152, size= 16501760, type=EBD0A0A2-B9E5-4433-87C0-68B6B72699C7, uuid=B6DA06DC-A5D0-434C-A6FE-494A1EFB515E, name="Basic data partition"

/dev/vda4 : start= 16582912, size= 193792, type=DE94BBA4-06D1-4D40-A16A-BFD50179D6AC, uuid=7BB90563-4BB7-4281-98EA-3FF4BCF1FCA5, attrs="RequiredPartition GUID:63"

[Fri Jan 26 root@fogclient ~]# cat /images/4KDisk-Base-Dev/d1.minimum.partitions

label: gpt

label-id: 9865AAFC-B984-4860-ACF5-4D6F2513747D

device: /dev/vda

unit: sectors

first-lba: 6

last-lba: 16777210

sector-size: 4096

/dev/vda1 : start= 256, size= 76800, type=C12A7328-F81F-11D2-BA4B-00A0C93EC93B, uuid=7C4743B5-7150-4672-B521-7B537528D7E7, name="EFI system partition", attrs="GUID:63"

/dev/vda2 : start= 77056, size= 4096, type=E3C9E316-0B5C-4DB8-817D-F92DF00215AE, uuid=F6216A84-0172-4445-B616-E36DFA20C731, name="Microsoft reserved partition", attrs="GUID:63"

/dev/vda3 : start= 81152, size= 16501760, type=EBD0A0A2-B9E5-4433-87C0-68B6B72699C7, uuid=B6DA06DC-A5D0-434C-A6FE-494A1EFB515E, name="Basic data partition"

/dev/vda4 : start= 16582912, size= 193792, type=DE94BBA4-06D1-4D40-A16A-BFD50179D6AC, uuid=7BB90563-4BB7-4281-98EA-3FF4BCF1FCA5, attrs="RequiredPartition GUID:63"

[Fri Jan 26 root@fogclient ~]# cat /images/4KDisk-Base-Dev/d1.fixed_size_partitions

1:2:4

[Fri Jan 26 root@fogclient ~]# cat /images/4KDisk-Base-Dev/d1.

d1.fixed_size_partitions d1.minimum.partitions d1.original.swapuuids d1.shrunken.partitions

d1.mbr d1.original.fstypes d1.partitions

[Fri Jan 26 root@fogclient ~]# cat /images/4KDisk-Base-Dev/d1.shrunken.partitions

label: gpt

label-id: 9865AAFC-B984-4860-ACF5-4D6F2513747D

device: /dev/vda

unit: sectors

first-lba: 6

last-lba: 16777210

sector-size: 4096

/dev/vda1 : start= 256, size= 76800, type=C12A7328-F81F-11D2-BA4B-00A0C93EC93B, uuid=7C4743B5-7150-4672-B521-7B537528D7E7, name="EFI system partition", attrs="GUID:63"

/dev/vda2 : start= 77056, size= 4096, type=E3C9E316-0B5C-4DB8-817D-F92DF00215AE, uuid=F6216A84-0172-4445-B616-E36DFA20C731, name="Microsoft reserved partition", attrs="GUID:63"

/dev/vda3 : start= 81152, size= 16501760, type=EBD0A0A2-B9E5-4433-87C0-68B6B72699C7, uuid=B6DA06DC-A5D0-434C-A6FE-494A1EFB515E, name="Basic data partition"

/dev/vda4 : start= 16582912, size= 193792, type=DE94BBA4-06D1-4D40-A16A-BFD50179D6AC, uuid=7BB90563-4BB7-4281-98EA-3FF4BCF1FCA5, attrs="RequiredPartition GUID:63"

gdisk -l /dev/sda

GPT fdisk (gdisk) version 1.0.8

Partition table scan:

MBR: protective

BSD: not present

APM: not present

GPT: present

Found valid GPT with protective MBR; using GPT.

Disk /dev/sda: 31246336 sectors, 119.2 GiB

Model: KLUDG4UHGC-B0E1

Sector size (logical/physical): 4096/4096 bytes

Disk identifier (GUID): 9865AAFC-B984-4860-ACF5-4D6F2513747D

Partition table holds up to 128 entries

Main partition table begins at sector 2 and ends at sector 5

First usable sector is 6, last usable sector is 31246330

Partitions will be aligned on 256-sector boundaries

Total free space is 14469877 sectors (55.2 GiB)

Number Start (sector) End (sector) Size Code Name

1 256 77055 300.0 MiB EF00 EFI system partition

2 77056 81151 16.0 MiB 0C01 Microsoft reserved ...

3 81152 16582911 62.9 GiB 0700 Basic data partition

4 16582912 16776703 757.0 MiB 2700

It’s a 128 GB drive, the image was a 64 GB drive, I expected it to expand to 128 GB

ntfsresize info on parts 4 and 3

ntfsresize --info /dev/sda4

ntfsresize v2022.10.3 (libntfs-3g)

Device name : /dev/sda4

NTFS volume version: 3.1

Cluster size : 4096 bytes

Current volume size: 793772032 bytes (794 MB)

Current device size: 793772032 bytes (794 MB)

Checking filesystem consistency ...

100.00 percent completed

Accounting clusters ...

Space in use : 14 MB (1.7%)

Collecting resizing constraints ...

You might resize at 13193216 bytes or 14 MB (freeing 780 MB).

Please make a test run using both the -n and -s options before real resizing!

[Fri Jan 26 root@fogclient ~]# ntfsresize --info /dev/sda3

ntfsresize v2022.10.3 (libntfs-3g)

Device name : /dev/sda3

NTFS volume version: 3.1

Cluster size : 4096 bytes

Current volume size: 67591208960 bytes (67592 MB)

Current device size: 67591208960 bytes (67592 MB)

Checking filesystem consistency ...

100.00 percent completed

Accounting clusters ...

Space in use : 24382 MB (36.1%)

Collecting resizing constraints ...

You might resize at 24381546496 bytes or 24382 MB (freeing 43210 MB).

Please make a test run using both the -n and -s options before real resizing!

@Ceregon I’ve never messed with cloning a raid array. Anything can be done, but whether or not it’s going to work with built-in stuff is a different question.

I imagine you have vroc/vmd enabled in the bios on the machine where you’re deploying already. I’ve never got to play with Vroc but I’m familiar with it, just wasn’t able to convince management to buy me the stuff to try it a few years back.

My first guess is that /dev/md124 doesn’t exist because the raid volume doesn’t exist yet, but it sounds like you found that in a debug session on a host you’re trying to deploy too. So that’s probably out. But I just wonder if the VROC volume needs to be created beforehand to be deployed to, but I don’t have a full understanding of when that volume is made.

My next guess would be that a RAID array is a multiple disk system, so the image needs to be captured in multiple disk mode

Are you having different disk sizes for these RAID volumes? would capturing with multiple disk or dd be an option?

In theory a RAID is a single volume, and you may be able to capture it correctly and it sounds like you’ve found others in the forum that have done that?

Other possibility is the need for different VROC drivers in the bzImage kernel, but I feel like if that was the case, then you wouldn’t be able to see the disk at all when capturing.

You could also capture in debug mode and mount the windows drive before starting the capture to see if you can read stuff?

This is from part of a postdownload script that will mount the windows disk to the path /ntfs

. /usr/share/fog/lib/funcs.sh

mkdir -p /ntfs

getHardDisk

getPartitions $hd

for part in $parts; do

umount /ntfs >/dev/null 2>&1

fsTypeSetting "$part"

case $fstype in

ntfs)

dots "Testing partition $part"

ntfs-3g -o force,rw $part /ntfs

ntfsstatus="$?"

if [[ ! $ntfsstatus -eq 0 ]]; then

echo "Skipped"

continue

fi

if [[ ! -d /ntfs/windows && ! -d /ntfs/Windows && ! -d /ntfs/WINDOWS ]]; then

echo "Not found"

umount /ntfs >/dev/null 2>&1

continue

fi

echo "Success"

break

;;

*)

echo " * Partition $part not NTFS filesystem"

;;

esac

done

if [[ ! $ntfsstatus -eq 0 ]]; then

echo "Failed"

debugPause

handleError "Failed to mount $part ($0)\n Args: $*"

fi

echo "Done"

Also, hot tip, once you’re in debug mode, you can run passwd and set a root password for that debug session. Then run ifconfig to get the ip. Then you can ssh into your debug session with ssh root@ip then put in the password you set when prompted. Then you can copy and paste this stuff and it’s a lot easier to copy the output or take screenshots.

Another possibilty could be using pre and post download scripts to fix the raid volume in the linux side, I found this information https://www.intel.com/content/dam/support/us/en/documents/memory-and-storage/linux-intel-vroc-userguide-333915.pdf but I didn’t dig into to that too much.

Did a debug session and ntfsresize with -c to check and --info shows partition 3 as resizable but it’s not being resized after imaging.

Running the image in deploy and seeing what it says after imaging and will see if there are any errors.

I fear this is going to be a 4kn drive alignment/resize issue.

@JJ-Fullmer I just took another look as I’ve been just maintaining a separate 4kn image and realized that the disk isn’t expanding after imaging.

I’ll do a debug session tomorrow and report back.