@sebastian-roth

I’ve only been able to test this in one environment and only with 2 PCs. It did work in that particular test. In a couple of weeks, we are going to have an opportunity to hit a good number of PC’s in another environment where it previously failed, so we’ll have more data then. So far so good though! Thank you much!

Posts

-

RE: Multicast image is pushed, but it does not "finish/finalize"posted in FOG Problems

-

RE: Multicast image is pushed, but it does not "finish/finalize"posted in FOG Problems

@sebastian-roth

We are using 1.5.9

The kernel we use does vary between stores though. 5.10.50 is a popular one though, but we’ve used older and I’ve tried the 5.10.71 (nothing newer than that).That’s very strange too. Especially considering that the issue seemingly appeared with no changes made to FOG and across multiple locations.

-

RE: Multicast image is pushed, but it does not "finish/finalize"posted in FOG Problems

@dmcadams FYI, yes this is a Windows 11 image, but I can assure you that this has nothing to do with Windows 11. We first noticed this at 2 other locations using Windows 10. We just happen to be testing with 11 right now.

-

Multicast image is pushed, but it does not "finish/finalize"posted in FOG Problems

Hello, we first experienced this issue back in December during one of our store openings. Since it was a new install, we just dealt with it and thought this was some strange glitch. Since then, we’ve actually noticed the same thing at 2 other locations, both of which we made no changes, and didn’t previously see this issue. We really don’t multicast often, so that’s why its been several months.

Issue:

Multicast image is deployed and gets to 100% on all partitions. No matter how many systems we have going, only 1 PC will actually “finish” the image and reboot. The other PCs will all sit on the partclone screen, and we have to stop the FOG task, then manually reboot the PCs. These PCs that need to be manually rebooted do not get their PC name changed (it remains the same name as the source image PC). So whatever stage/phase where the PC name gets changed, is only running on 1 PC. All others will remain stuck until manual intervention.As mentioned, we didn’t see this issue prior to our December opening. We opened a location in November and successfully multicasted over 120+ PCs in a few chunks. That same location, with no changes made to FOG, now experiences this issue.

We have since tried a few different kernel versions with no luck.Has anyone experienced this? Ultimately, we do have a work around, so this isn’t a huge deal. It just gets frustrating as most of what we do is remote, so this issue does cause a delay in the process to wait for onsite assistance.

-

RE: Multicast session is not startingposted in FOG Problems

@sebastian-roth

Thanks for the great information. We will give that a try. Also, the FOG_MULTICAST_RENDEZVOUS was actually one of our last attempts. I will revert that back to the default of blank first, and remove that portion from the commands that you sent.These client machines also have NVMe drives, so on the receiver side, instead of “/dev/sda1” should we use “/dev/nvme0”?

-

RE: Multicast session is not startingposted in FOG Problems

@george1421

Yes they are all on the 192.168.48.0/24 network. -

RE: Multicast session is not startingposted in FOG Problems

@sebastian-roth

Yes, same hardware and same configuration. At least as 2 other sites.

A couple of other sites have some differences.Is there a better log that I can look at to see why its just hanging there? I was hoping for one of those debug modes but for Multicast, or that the Multicast log would show something.

-

RE: Multicast session is not startingposted in FOG Problems

@george1421

At this site, we have not had Multicast working. We do have 6 other sites using the same type of setup and networking gear. Those sites we have Multicast working, and we don’t recall needing to do anything special to get it working, other than tweaking some performance settings like IGMP.

Yes, they are on the same IP subnet as the Fog server.Here is a snip of the Multicast.log that I just attempted:

[09-30-21 1:44:21 pm] | Task ID: 5 Name: Multi-Cast Task - Multicast is new [09-30-21 1:44:21 pm] | Task ID: 5 Name: Multi-Cast Task - Multicast image file found, file: /images/TournamentImage [09-30-21 1:44:21 pm] | Task ID: 5 Name: Multi-Cast Task - Multicast 2 clients found [09-30-21 1:44:21 pm] | Task ID: 5 Name: Multi-Cast Task - Multicast sending on base port 59270 [09-30-21 1:44:21 pm] | Command: /usr/local/sbin/udp-sender --interface ens160 --min-receivers 2 --max-wait 600 --mcast-rdv-address 192.168.48.4 --portbase 59270 --full-duplex --ttl 32 --nokbd --nopointopoint --file /images/TournamentImage/d1p1.img;/usr/local/sbin/udp-sender --interface ens160 --min-receivers 2 --max-wait 10 --mcast-rdv-address 192.168.48.4 --portbase 59270 --full-duplex --ttl 32 --nokbd --nopointopoint --file /images/TournamentImage/d1p2.img;/usr/local/sbin/udp-sender --interface ens160 --min-receivers 2 --max-wait 10 --mcast-rdv-address 192.168.48.4 --portbase 59270 --full-duplex --ttl 32 --nokbd --nopointopoint --file /images/TournamentImage/d1p3.img;/usr/local/sbin/udp-sender --interface ens160 --min-receivers 2 --max-wait 10 --mcast-rdv-address 192.168.48.4 --portbase 59270 --full-duplex --ttl 32 --nokbd --nopointopoint --file /images/TournamentImage/d1p4.img; [09-30-21 1:44:21 pm] | Task ID: 5 Name: Multi-Cast Task - Multicast has started [09-30-21 1:44:31 pm] | Task ID: 5 Name: Multi-Cast Task - Multicast is already running with pid: 3317 [09-30-21 1:44:41 pm] | Task ID: 5 Name: Multi-Cast Task - Multicast is already running with pid: 3317 [09-30-21 1:44:51 pm] | Task ID: 5 Name: Multi-Cast Task - Multicast is already running with pid: 3317 [09-30-21 1:45:01 pm] | Task ID: 5 Name: Multi-Cast Task - Multicast is already running with pid: 3317 [09-30-21 1:45:11 pm] | Task ID: 5 Name: Multi-Cast Task - Multicast is already running with pid: 3317 [09-30-21 1:45:21 pm] | Task ID: 5 Name: Multi-Cast Task - Multicast is already running with pid: 3317 [09-30-21 1:45:31 pm] | Task ID: 5 Name: Multi-Cast Task - Multicast is already running with pid: 3317 [09-30-21 1:45:41 pm] | Task ID: 5 Name: Multi-Cast Task - Multicast is already running with pid: 3317 [09-30-21 1:45:51 pm] | Task ID: 5 Name: Multi-Cast Task - Multicast is already running with pid: 3317 [09-30-21 1:46:01 pm] | Task ID: 5 Name: Multi-Cast Task - Multicast is already running with pid: 3317 -

Multicast session is not startingposted in FOG Problems

Hi,

We are experiencing an issue at a new location where the Multicast session is not starting. See the attached picture. It will go all the way to the partclone screen, and just hang there. We have verified that Unicast image works just fine, using the same image, on the same machines. We can even do multiple systems with that image via Unicast. We’ve been trying with 2 systems, and also tried with 2 different systems.

We setup FOG with default settings, using Ubuntu 20.04 LTS and then we tried again with 18.04 LTS. Changing only IP and passwords really. Any help would be appreciated!

-

RE: Multiple I/O Errors during imagingposted in FOG Problems

Another part of the truth table though, is that all of these Crucial P2 drives were actually imaged with a ~1TB image many months ago when they were initially installed.

Maybe this way using an older kernel. You can try manually downloading older kernels from our website. Put into

/var/www/html/fog/service/ipxe/and set Host Kernel to the older kernel filename on a specific host (host’s settings in the FOG web UI) for testing.@Sebastian-Roth So I’m trying the easy things first. I have a system that just would not image to the P2 drive at all. Always throwing errors within a short time. This system works great with a 2.5" SSD, and also a different model of M.2 NVMe drive (ADATA 1TB). I installed an M.2 heatsink on the P2 and got the same errors. So…

I went down the old kernel path like you suggested and found a kernel that not only worked on a drive that previously failed over and over, but it also didn’t produce any errors, and maintained great speeds (under 2hrs for a 800gb image). I had to try a few kernels before finding one that would even boot without a kernel panic, but finally landed on 4.19.6 (64bit). Is there anything that makes sense about why this kernel would work? Maybe it isn’t reading the temperatures correctly (or it is?) so its not throwing the I/O errors? What are your thoughts? -

RE: Multiple I/O Errors during imagingposted in FOG Problems

@george1421

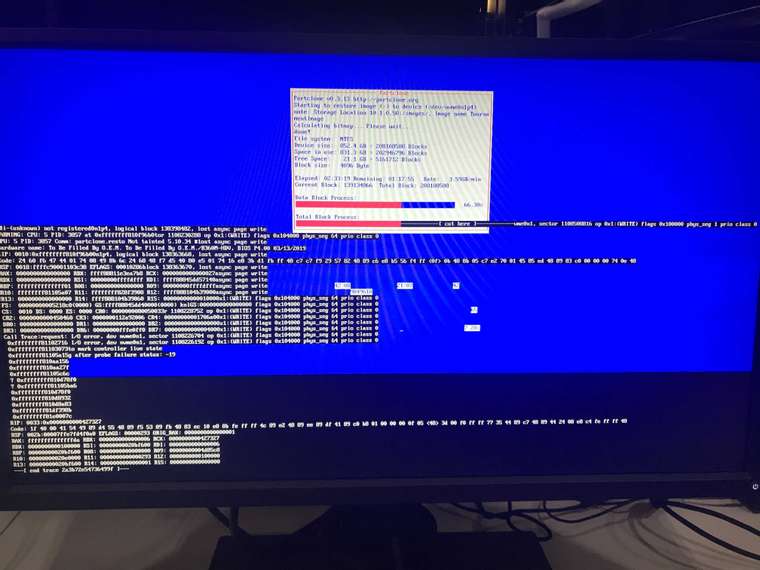

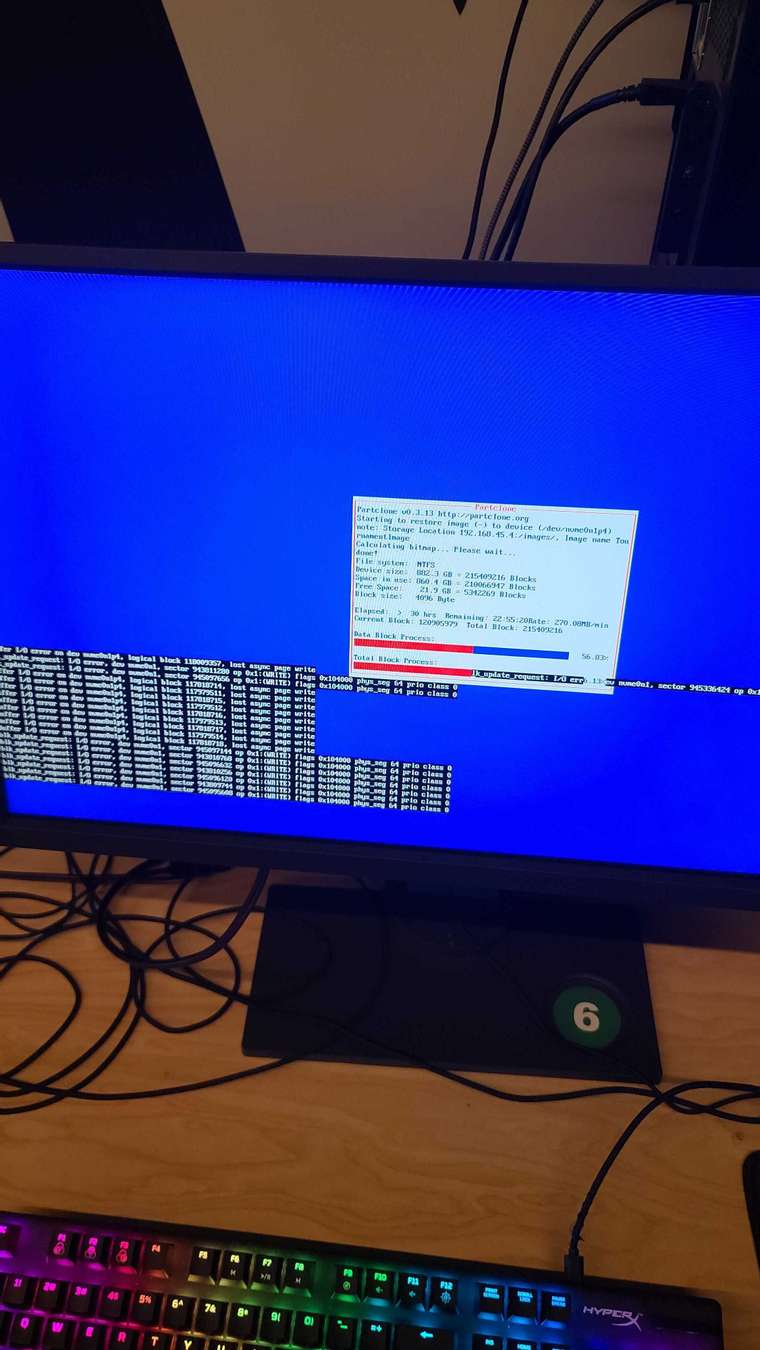

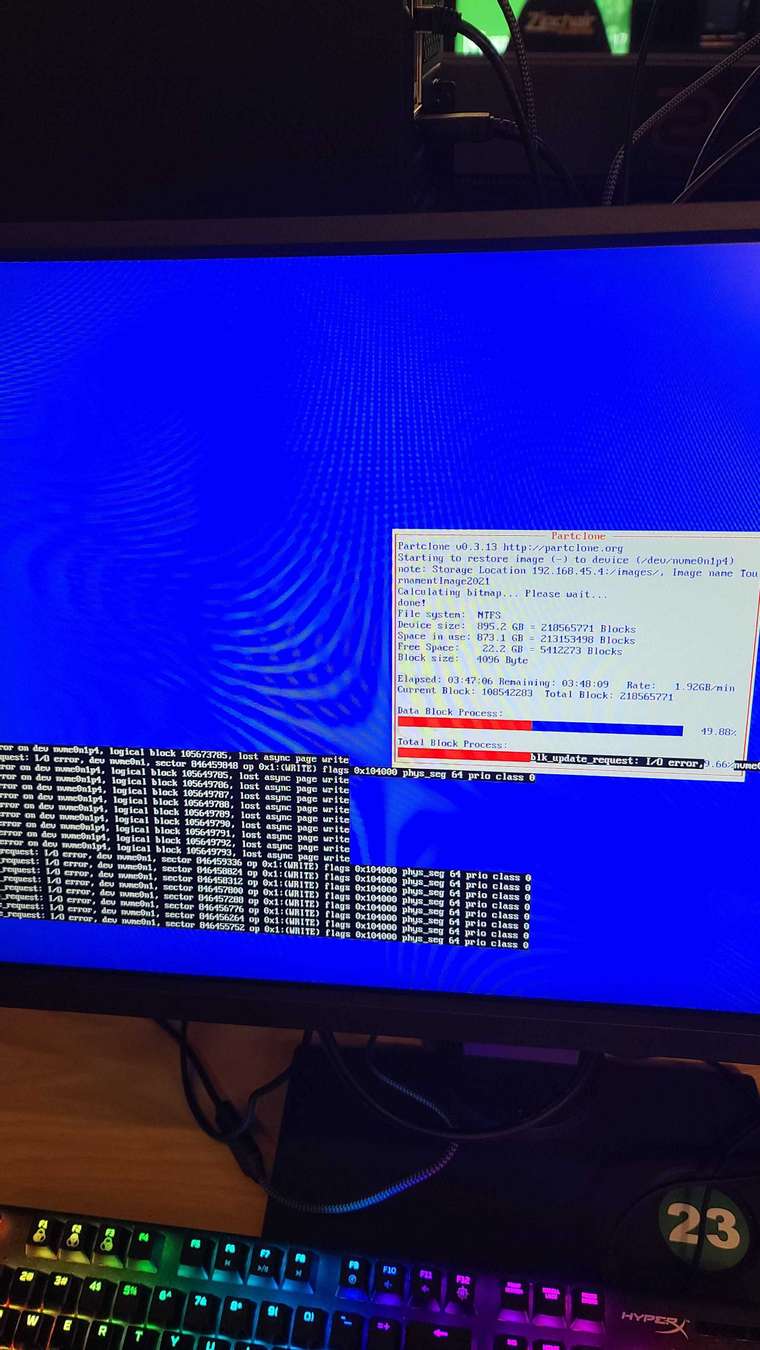

Sure enough there are massive errors. Sorry, I don’t know how to export that from my client and paste it here. I tried to capture it within pictures, and just grab the majority of different errors seen. Also note in the last one, I did the lsblk command with no results shown.

-

RE: Multiple I/O Errors during imagingposted in FOG Problems

@george1421 said in Multiple I/O Errors during imaging:

One other thing we can try is to run a deploy in debug mode (check the debug checkbox before scheduling the task). PXE boot the target computer. You will be dropped to the FOS Linux command prompt after a few pages of text. You can start imaging from the command prompt by keying in fog. Proceed with imaging step by step until you get the error. Press Ctrl-C to get back to the command prompt. From there look at the messages in /var/log (syslog or messages, I can’t remember ATM). See if there is any clues at the end of the log that might give us an idea. This command might give us a cloue grep nvme /var/log/syslog

Also after you get that error and get back to the FOS Linux command prompt key in lsblk to see if the drive really went away.Could you help explain how to proceed with imaging step by step while in debug mode? I can get there, and keyed in fog to receive the variables, I’m just not sure of what the syntax would be to begin a unicast image, or what else I’m missing here.

-

RE: Multiple I/O Errors during imagingposted in FOG Problems

@george1421

Thank you for the feedback!I’ve taken your advice and since tested with a MB BIOS flash and a firmware update on the P2 drives (there was one available), and also a few different FOG kernels just in case. Same results with all of that.

I currently have 2 systems that just won’t take this large image with the P2 drives, so I was able to use the same systems and do a multicast on 1TB SSD’s instead. That passed with flying colors.

We don’t have any commercial build systems here, but we do have a different model of M.2 drive, so I’m currently testing with that.

I wasn’t able to get the debug mode to work just yet, but I’ll give that a try again after this different model of M.2 testing. I’ll provide an update to my testing.

Another part of the truth table though, is that all of these Crucial P2 drives were actually imaged with a ~1TB image many months ago when they were initially installed. Most locations were brand new systems with these drives, but we also have one location that has older model systems (B360M chipset) where we just upgraded the M.2 drive to these Crucials. To the best of my knowledge, the first round of imaging did not produce any of these issues, which is about 250+ systems. It wasn’t until the 2nd, and some 3rd round that these issues started to occur.

-

Multiple I/O Errors during imagingposted in FOG Problems

We have been experiencing a wealth of I/O errors and nvme errors during imaging over the past few weeks. I’ll include several pictures. I’m not sure where else to look in terms of logs, as I never see any of these within the logs themselves.

We have 7 locations, each with their own FOG server. These errors have been seen at all locations. There are currently 2 locations that I am really focusing on to try and figure this out right now. Multiple systems at this point, probably in the range of 15 or more.

Images that we are attempting:

- A small typical Windows Image, about 35gb in size. Not sysprep.

- A very large image with all of our data on it, 700-800gb in size.

The errors typically occur on only the larger image as it takes much longer to apply. The smaller image can be applied in a matter of minutes, so it is less likely to occur here, although, we have seen it multiple times.

Methods of deployment:

Both unicast and multicast. Error has even occurred with just 1 at a time on both uni and multi.FOG Version: 1.5.9

All of the FOG versions were upgraded last month. I believe they were all 1.5.8. I was not apart of imaging system on the old version, but I’ve been told that none of these errors occurred prior to the upgrade. Our issue may be resolved by installing the older version, but that is something that I’d like to ask for help with. I can’t seem to find how to do that.Ubuntu OS tried and currently in use:

18.04 LTS desktop, 20.04 LTS desktop, and 20.04 LTS serverKnown Kernels tried:

4.19.145

5.10.34

5.10.50The systems are somewhat of a mix. Some are MSI B460M-Pro motherboard, while others are ASRock B360M-VDH.

All of them have the same model of drive to the best of my knowledge:

Crucial P2 2TB NVMe PCIe M.2 SSD (CT2000P2SSD8)Sometimes if this error occurs, it will only “timeout” a handful of times, and the image will continue until the end.

Sometimes, it will bring the network speed to a crawl, and will be transferring the image somewhere in the Kb/s range. At that point, we are really forced to shut it down and try again.

Sometimes when we try again, it will succeed. Sometimes, it just won’t.Currently, if I can image a single system with our larger image, it is a miracle. We have 250+ systems that we are to maintain, and we’d like to keep using FOG. Any help is appreciated!