@nadachowski said in FOG and different types of hardware:

FOG goes into a loop.

Please be as specific as possible. Can you provide screenshots or pictures?

@nadachowski said in FOG and different types of hardware:

FOG goes into a loop.

Please be as specific as possible. Can you provide screenshots or pictures?

@george1421 said in FOS fails to capture image from md RAID host:

since you referenced my tutorial I assumed you are using the intel raid.

I referenced your Intel RAID tutorial because I found another forum post on mdadm where you linked to it and stated that it was similar in principle. I am actually working on capturing an image from a Linux host with md RAID, and deploying the same image to other hosts with identical hardware.

By way of update, I removed the large sda device (a hardware RAID) and the USB stick, so my disk layout was thus (from the running host OS):

# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 139.8G 0 disk

├─sda1 8:1 0 300M 0 part

├─sda2 8:2 0 30G 0 part

│ └─md0 9:0 0 30G 0 raid1 /

└─sda3 8:3 0 2G 0 part

└─md127 9:127 0 2G 0 raid1

sdb 8:16 0 139.8G 0 disk

├─sdb1 8:17 0 300M 0 part /boot/efi

├─sdb2 8:18 0 30G 0 part

│ └─md0 9:0 0 30G 0 raid1 /

└─sdb3 8:19 0 2G 0 part

└─md127 9:127 0 2G 0 raid1

I then configured the following in FOG, with the noted outcomes:

Host Primary Disk: /dev/md126 (equivalent to md0 on live system above)

Image Type: Multiple Partition Image - All Disks (3)

Host Kernel Arguments: mdraid=true

Outcome: fails to find disks

Host Primary Disk: /dev/md126 (equivalent to md0 on live system above)

Image Type: Multiple Partition Image - Single Disk (2)

Host Kernel Arguments: mdraid=true

Outcome: fails to read partition table

Host Primary Disk: /dev/sdb

Image Type: Multiple Partition Image - Single Disk (2)

Host Kernel Arguments: [not noted. I forget]

Outcome: fails to read partition table

Host Primary Disk: /dev/sda

Image Type: Multiple Partition Image - All Disks (3)

Host Kernel Arguments: [none]

Outcome: capture succeeds. Deployment on new system succeeds. System boots and partition table on sda looks correct. md127 is lost. Partition table on sdb is not correct:

# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 139.8G 0 disk

├─sda1 8:1 0 300M 0 part

├─sda2 8:2 0 30G 0 part

│ └─md0 9:0 0 30G 0 raid1 /

└─sda3 8:3 0 2G 0 part

sdb 8:16 0 139.8G 0 disk

├─sdb1 8:17 0 1G 0 part

└─sdb2 8:18 0 138.8G 0 part

I’m not sure if the deployment procedure touched sdb or not. I suspect not. I will try again and watch closer or record it. I suppose I can rebuild my RAIDs from here, but I would of course prefer to have FOG automate that process to the extent possible.

Edit: added FOG settings detail.

If I use the whole device for md-RAID, then partition the md device, this doesn’t prevent the OS from seeing the underlying physical devices. It will still see sdb, sdc and md126. I’m not familiar with Intel RAID on Linux, but it sounds from your description like the result is similar to what I’m seeing. In any case, I don’t know of any way to present the md-RAID device to Linux and hide the member physical devices.

At this point I have removed sda and disabled USB for now until I can get somebody to remove the USB drive from the system. The unfortunate side effect is that I have no keyboard input in FOS with USB disabled (over IPMI, and presumably on the physical console as well). So I will retry the capture job in non-debug mode and see what happens.

db

I can see how mirroring two disks and then partitioning the RAID device would be simpler, but we have ruled it out as an option because we want to overprovision the SSDs.

We can remove the USB drive and probably the large unpartitioned drive (sda) as well, just leaving us with sdb, sdc, md126 and md127, but it sounds like we may still not have success. I think I will try it anyway just to be thorough.

db

@nadachowski said in FOG and different types of hardware:

FOG goes into a loop.

Please be as specific as possible. Can you provide screenshots or pictures?

Ubuntu 18.04.6 LTS

Web Server 10.13.6.7

I’m trying to capture an image from a host that boots UEFI from two hard disks in RAID 1. The way this works is that both disks have an EFI partition that is kept in sync by the OS. The computer boots from either of these disks and then mdadm assembles the root partition from the second partition on these two disks.

I followed the handy guide here and determined that FOS sees my root partition as /dev/md126. On FOG’s host management screen I set the Host Kernel Arguments to mdraid=true and Host Primary Disk to /dev/md126. I have tried setting the image type to each of the first three values.

In every case, attempts to capture the image have resulted in an error in reading the disk or partition table. I hope the output from one such attempt will prove helpful in identifying the source of my troubles and a possible solution:

[Tue Jun 14 root@fogclient ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 116.4T 0 disk

sdb 8:16 0 139.8G 0 disk

|-sdb1 8:17 0 300M 0 part

|-sdb2 8:18 0 30G 0 part

| `-md126 9:126 0 30G 0 raid1

`-sdb3 8:19 0 2G 0 part

`-md127 9:127 0 2G 0 raid1

sdc 8:32 0 139.8G 0 disk

|-sdc1 8:33 0 300M 0 part

|-sdc2 8:34 0 30G 0 part

| `-md126 9:126 0 30G 0 raid1

`-sdc3 8:35 0 2G 0 part

`-md127 9:127 0 2G 0 raid1

sdd 8:48 1 14.3G 0 disk

`-sdd1 8:49 1 14.3G 0 part

[Tue Jun 14 root@fogclient ~]# mdadm -D /dev/md126

/dev/md126:

Version : 1.2

Creation Time : Wed May 25 20:32:17 2022

Raid Level : raid1

Array Size : 31439872 (29.98 GiB 32.19 GB)

Used Dev Size : 31439872 (29.98 GiB 32.19 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Tue Jun 14 15:26:24 2022

State : clean

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Consistency Policy : resync

Name : ubuntu-server:0

UUID : 7f76bb36:09c97d07:2528dfc0:ade215db

Events : 222

Number Major Minor RaidDevice State

0 8 34 0 active sync /dev/sdc2

1 8 18 1 active sync /dev/sdb2

==================================

=== ==== ===== ====

=== ========= == === == ===

=== ======== ==== == ==== ===

=== ======== ==== == =========

=== ==== ==== == =========

=== ======== ==== == === ===

=== ======== ==== == ==== ===

=== ========= == === == ===

=== ========== ===== ====

==================================

===== Free Opensource Ghost ======

==================================

============ Credits =============

= https://fogproject.org/Credits =

==================================

== Released under GPL Version 3 ==

==================================

Version: 1.5.9

Init Version: 20200906

* Press [Enter] key to continue

* Verifying network interface configuration.........Done

* Press [Enter] key to continue

* Checking Operating System.........................Linux

* Checking CPU Cores................................40

* Send method.......................................NFS

* Attempting to check in............................

Done

* Press [Enter] key to continue

* Mounting File System..............................Done

* Press [Enter] key to continue

* Checking Mounted File System......................Done

* Press [Enter] key to continue

* Checking img variable is set......................Done

* Press [Enter] key to continue

* Preparing to send image file to server

* Preparing backup location.........................Done

* Press [Enter] key to continue

* Setting permission on /images/0cc47abc024c........Done

* Press [Enter] key to continue

* Removing any pre-existing files...................Done

* Press [Enter] key to continue

* Using Image: veeam_barracuda_2022-06-14

* Looking for Hard Disks............................Failed

* Press [Enter] key to continue

##############################################################################

# #

# An error has been detected! #

# #

##############################################################################

Init Version: 20200906

Could not find any disks (/bin/fog.upload)

Args Passed:

Kernel variables and settings:

bzImage loglevel=4 initrd=init.xz root=/dev/ram0 rw ramdisk_size=275000 web=http://10.13.6.7/fog/ consoleblank=0 rootfstype=ext4 mdraid=true nvme_core.default_ps_max_latency_us=0 mac=0c:c4:7a:bc:02:4c ftp=10.13.6.7 storage=10.13.6.7:/images/dev/ storageip=10.13.6.7 osid=50 irqpoll hostname=vcc-ldc-vs-11 chkdsk=0 img=veeam_barracuda_2022-06-14 imgType=mpa imgPartitionType=all imgid=2 imgFormat=5 PIGZ_COMP=-6 fdrive=/dev/md126 hostearly=1 pct=5 ignorepg=1 isdebug=yes type=up mdraid=true

* Press [Enter] key to continue

Note also that /dev/sda is an empty filesystem and doesn’t need to be imaged. /dev/sdd is a USB stick and also unnecessary to the image. I don’t know if there’s a way to tell FOG to skip these devices.

I didn’t find a solution, but went with the workaround of designating a host primary disk, then creating a debug deploy task. Once booted, I was able to check nvme order. If correct, I ran ‘fog’ to deploy. If incorrect, I rebooted the host and tried again. Time consuming, but the outcome was correct.

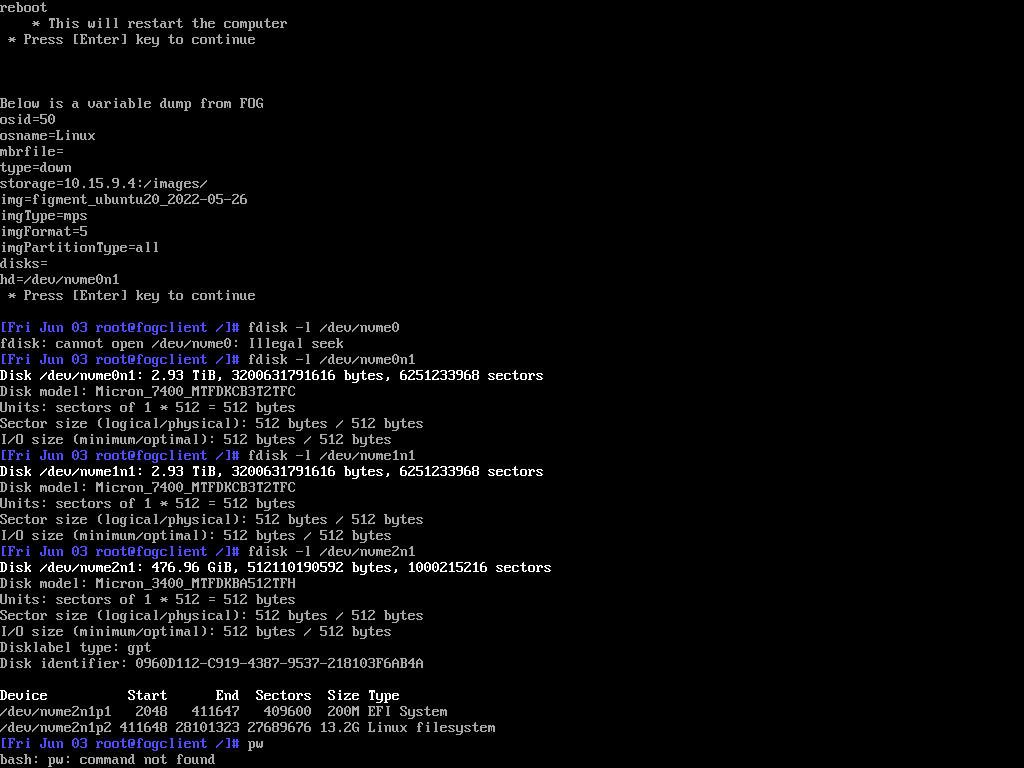

FOG Web Server 10.15.9.4

Ubuntu 18.04.6 LTS

I’m deploying to a small number of hosts with identical hardware. These hosts have 3 nvme drives, which are detected as nvme0n1, nvme1n1 and nvme2n1. One of these is ~500GB and the other two are ~3TB:

Disk /dev/nvme0n1: 2.93 TiB, 3200631791616 bytes, 6251233968 sectors

Disk /dev/nvme1n1: 2.93 TiB, 3200631791616 bytes, 6251233968 sectors

Disk /dev/nvme2n1: 476.96 GiB, 512110190592 bytes, 1000215216 sectors

My objective is to deploy my image to the small nvme drive. The challenge is that each time I reboot, these drives are detected in random order, so designating the group or host primary disk does not deploy the image to the correct drive in all cases.

Is it possible to change the ‘hd’ variable on the fly, such as during a debug deploy task? Or is there a better approach? I could ask remote hands to disconnect the larger drives during deploy, but a software-based intervention is preferred.

@sebastian-roth said in Image capture: reading partition tables failed:

@david-burgess You might want to point to a specific Primary Disk within the host settings, e.g.

/dev/sda

Thank you. This was the fix.

I have read a few tutorials about the post-download script, and maybe I’m just too tired to get it, but I am unclear on what path or binaries are available to the script.

To put my problem in context, I need to wipe the nvme drive in the client after the image is deployed. The OS (Ubuntu) will be installed on a SATA drive, so we’re just wiping the secondary drive and the only nvme device in the host.

So I’ve added these lines to fog.postdownload:

drive=`nvme list|awk -F'[/ ]' '/dev/ {print $3}'`

nvme format $drive

What’s not clear to me is the environment this will run in, and thus where nvme-cli needs to be installed. The host’s OS? FOG?