Side note, it appears that the KEA DHCP server in pfSense 2.7.2 does not properly support serving the correct boot file to BIOS vs EFI systems. It all works fine with Windows DHCP. This had nothing to do with my original issue.

Posts

-

RE: Error at beginning of capture--Could not fix partition layout (runFixparts)posted in FOG Problems

-

RE: Error at beginning of capture--Could not fix partition layout (runFixparts)posted in FOG Problems

@Tom-Elliott Sure, which versions do you need? I tried to find which build of Fog 1.5.9 I’m using in my working installation, but I’m not sure how. It wasn’t a part of the tarball filename.

I’ve got good internet now, and I’d be happy to spin up another VM to retrace my steps from earlier that resulted in a non-working install, if that would be helpful. I feel like either something is broken in the code, or I’m missing something obvious, because I’ve set up many Fog servers in the past, and I’ve never run into this issue.

-

RE: Error at beginning of capture--Could not fix partition layout (runFixparts)posted in FOG Problems

I found a combination that worked. I installed Ubuntu Server 20.04.6 LTS, did apt updates, and installed Fog 1.5.9. Now my clients go all the way through the capture process, and everything is working as I remember.

The only thing that seems to have made a difference is using an older version of Fog, in my case 1.5.9. Hopefully this gets fixed in an upcoming release, or maybe the inits and bzimages can be fixed if that’s the problem.

-

RE: Error at beginning of capture--Could not fix partition layout (runFixparts)posted in FOG Problems

After several more dropped connections, I finally got all the files updated. Tried capturing with a Win7 client and a Win10 client, and both still give the same error. I suspect that if I set the image to RAW it might work, but I don’t have the storage space in my test environment to sufficiently test it, and a RAW image isn’t what I want anyways.

-

RE: Error at beginning of capture--Could not fix partition layout (runFixparts)posted in FOG Problems

I just got this error while updating init_32.xz. Now I’m wondering if my crappy internet is causing the download to time out.

root@ubuntu:/home/ben# wget https://fogproject.org/inits/init_32.xz -O /var/www/html/fog/service/ipxe/init_32.xz --2024-09-14 21:45:37-- https://fogproject.org/inits/init_32.xz Resolving fogproject.org (fogproject.org)... 162.213.199.177 Connecting to fogproject.org (fogproject.org)|162.213.199.177|:443... connected. HTTP request sent, awaiting response... 301 Moved Permanently Location: https://github.com/FOGProject/fos/releases/latest/download/init_32.xz [following] --2024-09-14 21:45:38-- https://github.com/FOGProject/fos/releases/latest/download/init_32.xz Resolving github.com (github.com)... 140.82.114.4 Connecting to github.com (github.com)|140.82.114.4|:443... connected. HTTP request sent, awaiting response... 302 Found Location: https://github.com/FOGProject/fos/releases/download/20240807/init_32.xz [following] --2024-09-14 21:45:39-- https://github.com/FOGProject/fos/releases/download/20240807/init_32.xz Reusing existing connection to github.com:443. HTTP request sent, awaiting response... 302 Found Location: https://objects.githubusercontent.com/github-production-release-asset-2e65be/59623077/b104c0f7-dce0-426a-badd-6695188b4366?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=releaseassetproduction%2F20240915%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20240915T024540Z&X-Amz-Expires=300&X-Amz-Signature=4c6919c4de514d2eb5e9c93475af52989c33fc489abc385aef7acd0acba63aba&X-Amz-SignedHeaders=host&actor_id=0&key_id=0&repo_id=59623077&response-content-disposition=attachment%3B%20filename%3Dinit_32.xz&response-content-type=application%2Foctet-stream [following] --2024-09-14 21:45:40-- https://objects.githubusercontent.com/github-production-release-asset-2e65be/59623077/b104c0f7-dce0-426a-badd-6695188b4366?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=releaseassetproduction%2F20240915%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20240915T024540Z&X-Amz-Expires=300&X-Amz-Signature=4c6919c4de514d2eb5e9c93475af52989c33fc489abc385aef7acd0acba63aba&X-Amz-SignedHeaders=host&actor_id=0&key_id=0&repo_id=59623077&response-content-disposition=attachment%3B%20filename%3Dinit_32.xz&response-content-type=application%2Foctet-stream Resolving objects.githubusercontent.com (objects.githubusercontent.com)... 185.199.108.133, 185.199.110.133, 185.199.111.133, ... Connecting to objects.githubusercontent.com (objects.githubusercontent.com)|185.199.108.133|:443... connected. HTTP request sent, awaiting response... 200 OK Length: 25500004 (24M) [application/octet-stream] Saving to: ‘/var/www/html/fog/service/ipxe/init_32.xz’ /var/www/html/fog/service/ipxe/init_32. 65%[=================================================> ] 16.05M 8.62KB/s in 29m 57s 2024-09-14 22:30:39 (9.15 KB/s) - Read error at byte 16826286/25500004 (Success). Retrying. --2024-09-14 22:30:40-- (try: 2) https://objects.githubusercontent.com/github-production-release-asset-2e65be/59623077/b104c0f7-dce0-426a-badd-6695188b4366?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=releaseassetproduction%2F20240915%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20240915T024540Z&X-Amz-Expires=300&X-Amz-Signature=4c6919c4de514d2eb5e9c93475af52989c33fc489abc385aef7acd0acba63aba&X-Amz-SignedHeaders=host&actor_id=0&key_id=0&repo_id=59623077&response-content-disposition=attachment%3B%20filename%3Dinit_32.xz&response-content-type=application%2Foctet-stream Connecting to objects.githubusercontent.com (objects.githubusercontent.com)|185.199.108.133|:443... connected. HTTP request sent, awaiting response... 401 Unauthorized Username/Password Authentication Failed.How would this be handled when the Fog installer is updating the kernel? Is there a default local set of files already in place that would be used as a fallback? If the download failed, would network booting work at all? I’m going to retry the download and see if it goes through.

-

Error at beginning of capture--Could not fix partition layout (runFixparts)posted in FOG Problems

I’ve got a small form factor Dell PC with Windows 10 acting as the hypervisor:

- Intel Core i7-4790 @ 3.6 GHz

- 16 GB DDR3

- 256 GB Kingston SATA SSD

I’ve got a VM with Ubuntu server 24.04.1 LTS, fresh install, with all apt updates, and a fresh Fog 1.5.10.1593 install on top of that with minimal customization and configuration.

I’ve set up many Fog servers in the past, and I know that this should have been sufficient to make a working Fog system.

During my troubleshooting, I’ve set up VMs using Ubuntu 16, 18, 20, 22, and 24 to rule out any funny package version stuff. I’m seeing the exact same error/issue on all versions of Ubuntu.

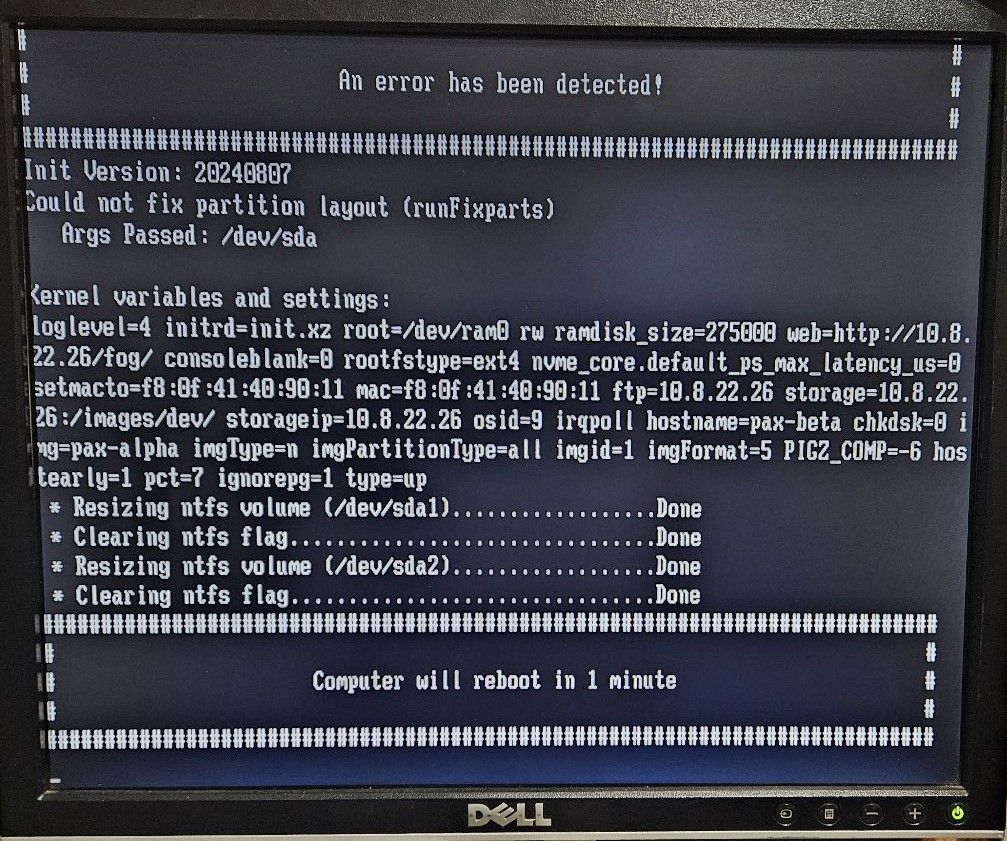

After the client boots from the network and starts the script with all the partition management and prep stuff, it throws an error:

I’ve tried running a debug capture, and when I type

fixpartsat the prompt (after hitting enter twice) it says command not found.I’ve tried updating the Kernel using the web GUI (http://myfogserver/fog/management/index.php?node=about&sub=kernelUpdate), but no change.

I’m currently trying the manual kernel update using the script at https://wiki.fogproject.org/wiki/index.php?title=Kernel_Update, but it’s going to take a few hours because my cellular internet connection sucks.

Maybe this is a bug where a package didn’t get included somewhere? I’ve read a little bit about

fixpartsnot being included in some releases, but I think I also read about that being fixed. It’s not clear to me. I’m not sure how to manually slipstream a package into init.xz. I feel like that’s not what the user is expected to do here, though.I think it’s possible that updating the kernel and inits manually at the command line might fix the problem, and I shall report back here with my results.

-

Details of image_export.csvposted in FOG Problems

Dell PowerEdge R730

Dual Xeon CPUs

32 GB RAM

8 x 2TB SAS drives in RAID5

Fog 1.5.9 is running fine under Windows Server 2019 HypervisorHello all, I’m trying to copy a fog image from Fog server A to Fog server B. I’ve done this before by copying the image files from A to B using FTP and using the import/export function in the Fog web GUI. I have the image files from server A via FTP, I don’t have the image listed in image_export.csv from server A because at some point this image was deleted from the GUI on server A.

I need to get this image somehow imported to the new Fog server. I’m also curious about what all the different columns mean in image_export.csv. I can clearly see things like image name, description, date, username, etc., but there are several more fields with either single digit values or several strings separated by colons. I was thinking about taking some time to reverse engineer it, but I was hoping someone out there would already know what all those columns mean.

Thanks,

Ben -

RE: Problem capturing image - " no space left on device"?posted in FOG Problems

I’ve never run into this specific problem until today. Generally, issues with resizing can be fixed by increasing the CAPTURERESIZEPCT in Fog Settings > General Settings. I agree with what @Quazz said about certain kinds of fragmentations causing issues with the theoretical minimum partition size. I’ve found that the more used (fragmented?) a drive is, the more likely it is to fail during resize. I was able to get past the

no space left on deviceandnumerical result out of rangeerrors by increasing the CAPTURERESIZEPCT to 15%. -

RE: capture a image over the fog boot menuposted in General

This would be a great feature to have, even if it were limited to only capturing to the image already assigned to the already registered host. It would be a good idea to have an extra confirmation message and invert the color scheme to make sure the user realizes what they’re about to do. However, deploying incorrectly can cause just as much damage as capturing incorrectly. FOG is a very sharp, double-edged tool.

Sometimes it’s a chore to go find another PC with network access so I can log in and set up the capture task or I’ll have to set up the capture task using the same PC before rebooting it. I can’t always carry my laptop with me and I don’t enjoy navigating any web interfaces on my phone.

-

RE: Add setting for amount of free space on resizable imagesposted in FOG Problems

Cool! I didn’t know that option was there. Thanks.

-

Add setting for amount of free space on resizable imagesposted in FOG Problems

Would it be possible to implement a setting in the Fog web interface to control how much the partitions are shrunk when capturing an image? I’m running into this problem more often where my resizable images don’t successfully resize before capture, I’m guessing because the script running parted is being a little too aggressive and not leaving enough free space. Parted usually throws an error like

No free mft record for $MFT: No space left on device Could not allocate new MFT record: No space left on deviceI know from experience if I could set it to leave just a percent or two more free space it would probably resize successfully. It would be really nice to be able to set a target percent of free space on resizable images.

-

RE: Mounting and extracting files from an imageposted in Feature Request

Ran this from the Ubuntu 18 box:

root@ubuntu18:~# zstdmt -dc </images/_Windows10Prox641909/d1p4.img | partclone.info -s - Partclone v0.3.11 http://partclone.org Showing info of image (-) File system: NTFS Device size: 9.9 GB = 2412369 Blocks Space in use: 9.6 GB = 2351625 Blocks Free Space: 248.8 MB = 60744 Blocks Block size: 4096 Byte image format: 0002 created on a: 64 bits platform with partclone: v0.3.13 bitmap mode: BIT checksum algo: NONE checksum size: n/a blocks/checksum: n/a reseed checksum: n/a -

RE: Mounting and extracting files from an imageposted in Feature Request

I bet my issue is partclone being an older version. I spun up a new Ubuntu 18 server during lunch and installed partclone and zstd and ran

zstdmt -dc </images/_Windows10Prox641909/d1p4.img | partclone.restore -C -O /d1p4.extracted.img -Nf 1 --restore_raw_fileand it extracted the image successfully. I suppose it’s time for me to get away from Ubuntu 16.

I’m a little hesitant to jump into learning how to compile programs from source. The last time I compiled anything was in QBasic in the late 90s.

@Quazz I really appreciate your help.

-

RE: Mounting and extracting files from an imageposted in Feature Request

I’m finding that partclone error messages aren’t very helpful. Perhaps I should be using some debug or verbose option.

root@fog:~# zstdmt -dc </images/_Windows10Prox641909/d1p4.img | partclone.info -s - Partclone v0.2.86 http://partclone.org Display image information main, 153, not enough memory Partclone fail, please check /var/log/partclone.log ! -

RE: Mounting and extracting files from an imageposted in Feature Request

I ran

apt updateandapt upgradebut my partclone is still at 0.2.86. I also ranzstdmt -dc </images/_Windows10Prox641909/d1p4.img | partclone.restore -C -O /d1p4.extracted.img -Nf 1 --restore_raw_fileand this time it brought up a text-based graphical interface for partclone and it gave me the same error that I needed 820488013636592786 bytes of memory.

I did some digging on Google and now I’m wondering if I need to upgrade to Ubuntu 18 to be able to get the new partclone.

EDIT: My zstd is at version 1.3.1. I’m able to decompress the image file (3 GB) to another image file that is roughly the size of the data that should be on that partition (8 GB), so I’m thinking zstd is ok.

-

Mounting and extracting files from an imageposted in Feature Request

Re: Mount and Extract files from images

TL;DR: +1 for this feature

Since I started using Fog around 2017 there have been several occasions where it would have been really handy to be able to decompress and mount a Fog image so I can grab a few files or folders from it. I tend to use Fog for backing up old machines just as much as deploying new ones. Right now I’ve got a dummy VM on a lab server set to boot from the network and I’ll deploy an image to it when I need to recover something. This works OK, and I usually end up just mounting the .vhdx to another test VM as a secondary drive so I can browse and copy what I need. It just takes a while when I have an image that is several hundred GB and I only need one file from it. I know even if this were done on the Fog server it would still have to decompress and extract the entire image, but it would be nice if this could be automated. It would eliminate a lot of image juggling and deploying and potential human error.

I’ve spent the last two days trying to figure out how to mount a Fog image in Ubuntu 16 Server. I can decompress it but partclone always gives some kind of error or tells me I need almost an exabyte of memory. Here is what I’ve tried:

sudo -i cd /images/_Windows10Prox641909 touch d1p4.extracted.img cat d1p4.img | zstd -dcf | partclone.restore -C -s - -O /d1p4.extracted.img --restore_raw_fileand here is what I get:

Partclone v0.2.86 http://partclone.org Starting to restore image (-) to device (d1p4.extracted.img) There is not enough free memory, partclone suggests you should have 820488013636592786 bytes memory Partclone fail, please check /var/log/partclone.log !I’ve come across several examples of this being done as well as different ways to do the same thing, but none of them have worked for me. If I could figure out what partclone needs or figure out the correct syntax I could script the process and make it a bit less painful. I’ve also tried

partclone.ntfsinstead ofpartclone.restorebut it gives the same results. This Ubuntu box has 2.17 TB free space so there should be plenty of room to extract the entire image to a raw file.d1p4.imgis a 127 GB NTFS partition in this case.Thank you for your time and consideration.

-

RE: Image Size on Client Incorrectposted in Bug Reports

I’ve seen the OP’s issue for several years on ~20 fog servers on various hardware platforms (virtual and bare metal), using both resized and non-resized images. I can confirm that this was still an issue on 1.5.8 but today I upgraded to 1.5.9 and it seems to be resolved. Old images still show the incorrect size, but recapturing them updates the image size on client to the correct value, which is approximately the minimum required hard drive capacity on the client when deploying the image.

-

RE: Adding needed repository... Failed!posted in FOG Problems

I just ran into this issue:

* Adding needed repository....................................Failed!Fresh install of Ubuntu server 18.04 LTS using network installer on an old IBM X3650 server. The only software I selected to install during Ubuntu setup was the SSH server. Downloaded and extracted the tarball from https://github.com/FOGProject/fogproject/archive/1.5.5.tar.gz. Could not get past the error until I ran this:

sudo apt-get install software-properties-commonIt looks like the FOG install script expected that package to already be installed. Hope this helps someone.

Ben

-

RE: Seems like you are trying to restore to an empty disk. Be aware this will most probably cause trouble.posted in FOG Problems

@Quazz Nice explanation of the issues regarding the order of the partitions and the starting positions. I think that’s exactly what was going on. Since the actual usage of the original 1TB drive was only ~150GB, I went with a 240GB SSD which has always worked for me before. It gave me that “Seems like you are trying to restore to an empty disk…” error every time I tried to deploy that image to anything smaller than 2TB. Now that the two little partitions at the end of the original drive are gone, I recaptured it and it deployed just fine to a 240GB SSD.

-

RE: Seems like you are trying to restore to an empty disk. Be aware this will most probably cause trouble.posted in FOG Problems

Success! The issue all along appears to have been the fact that the original 1TB hard drive had two small partitions at the end of the drive. I know one of the partitions was a 20GB recovery partition, and the other was 2GB and I don’t know what it was for. The last things I did was delete the partitions using gparted, make sure the drive still booted windows, then manually resize the main partition and start a capture. I believe it will work just fine the way I’m used to it working now that the two partitions at the end are gone. I normally look at the size of the data on the drive and chose an SSD based on that, even if the original hard drive was 1TB, 2TB, or more.

In short, if you run into this issue, make sure there are no partitions hanging around at the end of the drive after the main partition. It would be helpful if Fog / PartClone could see that and give a slightly more detailed error. I appreciate everyone’s help on this.