Replication oddity after moving Master node

-

@Kiweegie You might draw a picture of your setup to make this more clear. In first sight I have no idea where the image “appearing out of the blue” might come from.

-

@sebastian-roth You’re quite right, i’ve just re-read that myself and it’s confusing… I was using non-real names for reasons of privacy.

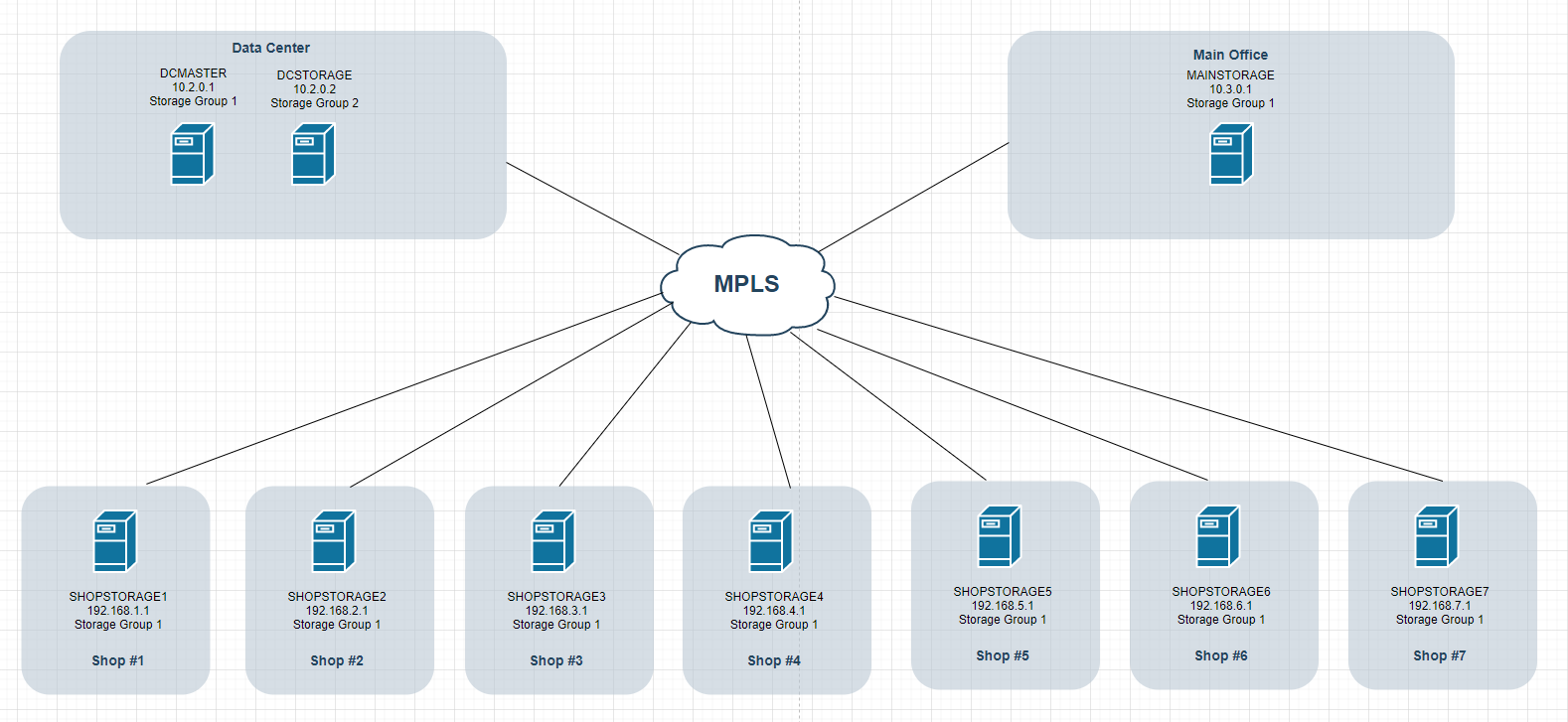

This image should hopefully help show the layout a little more clearly.

DCMASTER is set as Master node for Storage Group 1

DCSTORAGE is set as Master node for Storage Group 2MAINSTORAGE is a storage node on Storage Group 1

All the SHOPSTORAGE nodes are storage nodes on Storage Group 2Image capture to DCMASTER is working fine - I can see the mac address of the image machine hitting /mnt/images/dev as the image itself is uploading, that part seems fine.

Images in question have been assigned to both Storage Group 1 and Storage Group 2 with Storage Group 2 set as primary. The goal is to have DCMASTER host all images and sync all of them to all storage nodes via membership to both Storage groups.

2 issues being faced:

MAINSTORAGE is showing the PreSysprep and PostSysprep image folder structure before it’s fully uploaded and showing on DCMASTER

None of the SHOPSTORAGE nodes are getting the images replicated. I can try rsyncing the images over to DCSTORAGE manually so they sync in turn to the other storage nodes but was looking for this to happen automatically.

Am I correct in stating that if MAINSTORAGE is replicating from DCMASTER then the folder names and sizes should be identical? Should the structure show up on MAINSTORAGE before it appears on DCMASTER?

We’re using the location plugin in case that has any impact (plus LDAP, WOLBroadcast plugins).

If you need more information than above let me know

regards Tom

-

After rsyncing one of the images from DCMASTER to DCSTORAGE both are now showing up on the storage node… and in turn seem to be in process of replicating to the other Storage nodes.

I’ll need to double check all of them once replication process finished to see if they have same file sizes etc.

regards Tom

-

So I just added 2 more Storage nodes to the equation. On both replication didn’t kick in til I’d initiated a manual rsync from Master to storage node. After doing that (again for one image only) the other 3 images all synced OK.

Wondering if there needs to be some syncing of SSH keys or similar to pemit the servers to talk to each other and thats not being handled automatically? Just a theory…

regards Tom

-

@kiweegie said in Replication oddity after moving Master node:

Wondering if there needs to be some syncing of SSH keys or similar to pemit the servers to talk to each other and thats not being handled automatically? Just a theory…

Not that I know of! Sounds strange that you need to manually sync one before it does the other ones for you.

@Tom-Elliott Would you have an idea?

-

@sebastian-roth I’m not aware of anything special with this. This doesn’t make much sense to me. We don’t use SSH at all for replicating data. LFTP is used. So Maybe selinux was causing issues that rsync corrected for? I don’t know other wise.

-

Morning gents, just revisting this as I have some more findings. The replication issue seems to be limited to the Master node not replicating images listed for Storage Group 2 for which a DCSTORAGE is the master.

Using the previously sent image of the setup, images capture to DCMASTER and it replicates fine to storage nodes with the same storage group set Storage Group 1

It is not being captured or replicating to DCSTORAGE however which is the master storage node for the second storage group.

I’ve checked the wiki re replication and it states there that:

Images always capture to the primary groups master storage node - so the image which is set as Storage Group 2 should capture to the master node for that storage group DCSTORAGE but is actually capturing to the Master Node DCMASTER.

So it would seem that that image is not seeing that DCSTORAGE is its master storage node even though the image(s) are defined as such.

I’ve confirmed also that DCSTORAGE is set as the Master node for Storage Group 2

Anything else I could me missing here?

regards Tom

-

@kiweegie said in Replication oddity after moving Master node:

Morning gents, just revisting this as I have some more findings. The replication issue seems to be limited to the Master node not replicating images listed for Storage Group 2 for which a DCSTORAGE is the master.

Using the previously sent image of the setup, images capture to DCMASTER and it replicates fine to storage nodes with the same storage group set Storage Group 1

It is not being captured or replicating to DCSTORAGE however which is the master storage node for the second storage group.

I’ve checked the wiki re replication and it states there that:

Images always capture to the primary groups master storage node - so the image which is set as Storage Group 2 should capture to the master node for that storage group DCSTORAGE but is actually capturing to the Master Node DCMASTER.

So it would seem that that image is not seeing that DCSTORAGE is its master storage node even though the image(s) are defined as such.

I’ve confirmed also that DCSTORAGE is set as the Master node for Storage Group 2

Anything else I could me missing here?

regards Tom

Additional note - when image is captured (to Master node) it sets ownership as the local unix user:root not fogproject. Not sure if this has bearing on things?

-

@kiweegie So the way replication works for Storage groups:

- An image can be defined to multiple storage groups.

- An image can be told which storage group is the image’s Primary Group

What this does:

The image’s Primary Storage group will then replicate to the other storage groups master nodes that are defined for that image.

If I’m to draw a picture of your environment:

So this image is configured to have Storage Group 1 and Storage Group 2, but what it sounds like, too me, is this image has Storage Group 1 set as the Primary group. Can you go to the image and validate that Storage Group 2 is defined as this image’s Primary Storage group?

Can you also get the output from mariadb/mysql of:

SELECT imageID,imageName,ngID,ngName FROM imageGroupAssoc LEFT OUTER JOIN images ON igaImageID = imageID LEFT OUTER JOIN nfsGroups ON igaStorageGroupID = ngID WHERE imageName = 'nameofimage'; -

@tom-elliott said in Replication oddity after moving Master node:

SELECT imageID,imageName,ngID,ngName

FROM imageGroupAssoc

LEFT OUTER JOIN images ON igaImageID = imageID

LEFT OUTER JOIN nfsGroups ON igaStorageGroupID = ngID

WHERE imageName = ‘nameofimage’;Thanks for getting in touch Tom.

The whole both storage groups “can” apply to an image was throwing me I confess. I actually added a brand new storage group “Storage Group 3” for the sake of the earlier example and deleted Storage group 2.

I’ve set only Storage Group 3 on the image(s) I want to sync from the DCSTORAGE node and made sure it’s set as master

Output of SQL above is as follows:

Database changed MariaDB [fog]> SELECT imageID,imageName,ngID,ngName -> FROM imageGroupAssoc -> LEFT OUTER JOIN images ON igaImageID = imageID -> LEFT OUTER JOIN nfsGroups ON igaStorageGroupID = ngID -> WHERE imageName = 'My_image'; +---------+------------+------+-----------------+ | imageID | imageName | ngID | ngName | +---------+------------+------+-----------------+ | 9 | My_image | 4 | Storage Group 3 | +---------+------------+------+-----------------+ 1 row in set (0.01 sec)On the assumption this is correct (now) do I need to restart any services or servers themselves to have them pick up on this change and start syncing?

regards Tom

-

@kiweegie You shouldn’t need to, but it wouldn’t hurt.

I haven’t played in the replicator code in quite some time though.

You can restart the replication services via:

for i in FOG{Image,Snapin}Replicator.service; do systemctl restart $i;done -

@kiweegie Based no what I see in the SQL, “My_image” is only set for Storage Group 3. So if My_image is not on DCSTORAGE, it won’t get replicated.

So what you should see in teh output there would be something like:

+---------+-----------+------+-----------------+ | imageID | imageName | ngID | ngName | +---------+-----------+------+-----------------+ | 9 | My_image | 4 | Storage Group 3 | | 9 | My_image | 3 | DCSTORAGE | +---------+-----------+------+-----------------+ -

@tom-elliott So to clarify that last point Tom, the SQL should reflect under ngName both the Storage group name AND the Storage node name? DCSTORAGE in this case is the name of the Storage node master server for the Storage Group 3 storage group.

I’ve compared to the other image(s) which are only hosted on (and are being replicated from) the Master node and they only reflect the main storage group under ngName.

I’ve made note of the replicator service restart command, cheers. I went a bit neanderthal and just rebooted all my servers just now to see if that kicked things into life.

Checking the Image Replicator logs under FOG Configuration > Log Viewer it only references DCMASTER and the 2 nodes in its storage group. Does replication from the Storage master of the other storage group show in these logs? Or do we need to check local log files on the server itself?

cheers Tom