Improve MD RAID imaging speed?

-

Does anyone have tips for improving speed & efficiency when imaging MD RAID systems?

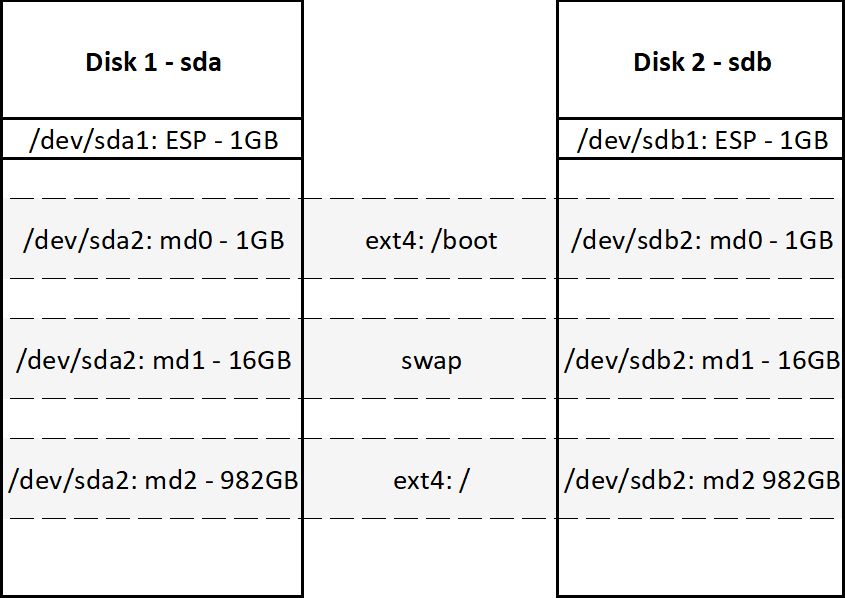

I’m working with UEFI boot machines with dual 1TB drives. UEFI throws us a curve ball in that you can’t make the EFI System Partition part of a RAID array; instead you need duplicated normal ESPs on both drives (a shortcoming of the EFI specification, apparently). The remainder of the drives consist of RAID 1 arrays, e.g.:

As far as I can tell, FOG’s only imaging option here is multi-partition, non-resizable. And that sets Partclone into dd mode, which as you can imagine on 1TB (SATA) drives is slow. Taking the image (yesterday) took 5 or 6 hours. Restoring an image which I’m doing today looks like it will take circa four hours (for both drives).

I’ve scoured the wiki (and the internet) for ideas to speed up this process, but I’m drawing a blank. It seems highly inefficient to have Partclone write nearly 1TB worth of zeroes.

Sidenote: in case anyone wonders why the machines are set up this way - they will be imaged centrally but then deployed to remote locations with no on-site technical resource. RAID 1 is one of our strategies for reducing down time on drive failure.

-

@robpomeroy When you image are you using the md virtual device? Typically its /dev/md126 or 127 if you are using the intel “fake” raid controller. If I get time this AM I was going to grab an dell 7040 to see if I can duplicate the issue. As long as you are not using lvm, single disk resizable should work withe

mddisks. -

@george1421 OK I didn’t understand the problem untill I tried to set it up. For uefi the EFI partition needs to be a standard partition. The second part is in uefi mode the intel raid adapter isn’t seen. In bios mode the ubuntu 20.04 installer doesn’t see the intel raid. Creating a software md disk based raid the in bios mode the boot partition needs to be a standard partition. Hmmmm

-

Hey George - I’m using Linux MD RAID, not Intel RST. FWIW, the devices under Linux are at

/dev/md0, etc. as you would expect.The ESP partitions are always formatted as VFAT. I imagine the MD RAID physical partitions and their child partitions will be visible provided you load the

mdraidkernel driver.Partclone’s documentation mentions nothing about handling MD RAID (that I can find).

-

@robpomeroy As far as I can see, RAID 1 is part of the issue here.

So, and I realize you’re likely aware of these things already, but just so others might better understand:

The RAID, in and of itself, has nothing to do with the imaging speed. This is because of how FOG handles imaging. You should be able to capture an image regardless of how it’s setup as long as it meets FOGs requirement. That said, Linux RAID as presented to the system is not a typical scenario FOG would prefer to use. What do I mean?

FOG handles particular filesystems. NTFS, EXT (2,3,4) for resizing. As SDA2 is partitioned as Linux Raid (Type = FD), there would be no method of capturing the 2nd partition as resizable. As far as fog is concerned, it’s dealing with 2 partition filesystem or which both would present as raw.

So 1tb capture at 5-6 hours seems pretty good. (Long but it makes sense as it’s likely capturing raw anyway.)

Now on the deploy, you are not just writing to 1 disk. Typically a deploy goes MUCH faster than capture (for obvious reasons I think). In your particular case, however, you have RAID 1. So when the image is writing to the disk it’s also writing to the redundant disk. This the basic premise of RAID 1. So, quite literally, you’re writing to the disk 2 times for one file (or block in this case?)

If you had a proper raid system (Hardware or software based, that presents as a single disk) in RAID 1, you would probably have no issues in capturing the image in Resizable mode. Of course, with RAID 1, you’d still likely see a bit of a time deploying the image as, it again, would be deploying 2 writes for 1 block (or whatever).

-

@tom-elliott - yes, exactly. I believe the slowness in deploying an image could also be down to the lack of insight Partclone has into the contents of the MD RAID partitions? I imagine it is faithfully writing long sequences of zeroes, because we’re in dd/raw mode.

I’m hoping that, soon if not yet, Partclone will be able to “lift the veil” on MD RAID 1 partitions and clone in a more efficient manner. Since we have all the data in that partition, we don’t need to worry about faithfully recreating striping/checksums, etc. I’d be perfectly happy with a solution that imaged only one drive, and left the other drive to be populated by a RAID re-sync, if that improved speed to boot.

Are there any other tools FOG might have at its disposal (he asks, plaintively)?

-

@robpomeroy First let me say that partclone can handle md raid just fine. The issue is related to disk structure and EFI requirements.

If the entire disk was one md raid volume it would be easier the fog’s logic of looping through the partitions would work as intended. So if you were to setup md0 and then partition that space for boot, root, and swap that would address the first part. The second part is a bit trickier in that you need a valid efi partition on each disk. That way if disk 0 failed the system still could boot from disk 1.

This is just me thinking out loud at the moment: Now a postinit script might help us here. postinit scripts are run before anything really starts on the target computer. You could use the postinit script (on a deploy) to wipe the disk, create the efi partition on each disk then setup md0 on the remaining disk. Then have fog image a single partition (/dev/sda2). Since all of the machines are alike you wouldn’t need to worry about resizing the root partition to different disk sizes.

If you were using the intel RST you would use a postinit script to setup the md raid so FOG could image it.

ref: https://forums.fogproject.org/topic/9463/fog-postinit-scripts-before-the-magic-begins

ref: https://forums.fogproject.org/topic/7882/capture-deploy-to-target-computers-using-intel-rapid-storage-onboard-raidIt looks like it might be possible to create the ESP partition with FOS Linux in a postinit script if you pluck the details out of here: https://wiki.archlinux.org/index.php/EFI_system_partition

So how to debug this: If you were to schedule a debug deploy and then pxe boot the computer, that would drop you at the state where the postinit script would run (almost). Do what you need to do to setup the md raid and then run the

fogscript to start the deployment. -

RST gives me the heebie jeebies.

-

@robpomeroy said in Improve MD RAID imaging speed?:

RST gives me the heebie jeebies.

Not suggesting you use RST raid, only given the example how to setup md raid in a postinit script.

I also edited my last post with a few more details.

-

@george1421 - Embarrassingly I’m a bit out of my depth to take this any further. I’ve been furiously reading up on specifics of MD and EFI, but I don’t have the breadth of OS installation experience and deep filesystem knowledge to pull it all together. (I tend to spend most of my time higher up the application stack.)

What might work for me, is to turn my attention to a backup/recovery solution - still with FOG’s assistance. As I’ve posted elsewhere, ReaR is looking like a strong contender for that. I need to run a PoC to confirm, but that might give me the higher speeds I’m looking for, as well as being more forgiving of my knowledge deficit.

-

@robpomeroy While not detracting from FOG’s capabilities, there is a better solution out there if you are looking at a third party application that you should keep in the back of your mind. Veeam backup agent (free). You can set a NAS device as a target. Veeam backup agent (free) will do a bare metal restore, daily incremental backups, file level restores. The only risk when dealing with a commercial solution (free or not) is they can and sometimes do change their free forever stance. I’m not saying anything bad or specific about Veeam, its just something you need to consider. I use Veeam B&R in the office and Veeam agent (free) in my home and home lab.

-

@george1421 Right, thanks George. Interesting to see a personal vote for Veeam. It’s on my PoC list, alongside ReaR and SystemImager.

-

@robpomeroy FWIW I have instructions for booting the veeam bare metal recovery image with FOG. This one is for windows, but the linux instructions are in the my home lab at the moment.

https://forums.fogproject.org/post/134569 But very similar concept to netbooting linux -

@george1421 Yep, thanks, that’s exactly the approach I have in mind for my centralised systems.