HP ProBook 640 G8 imaging extremely slowly

-

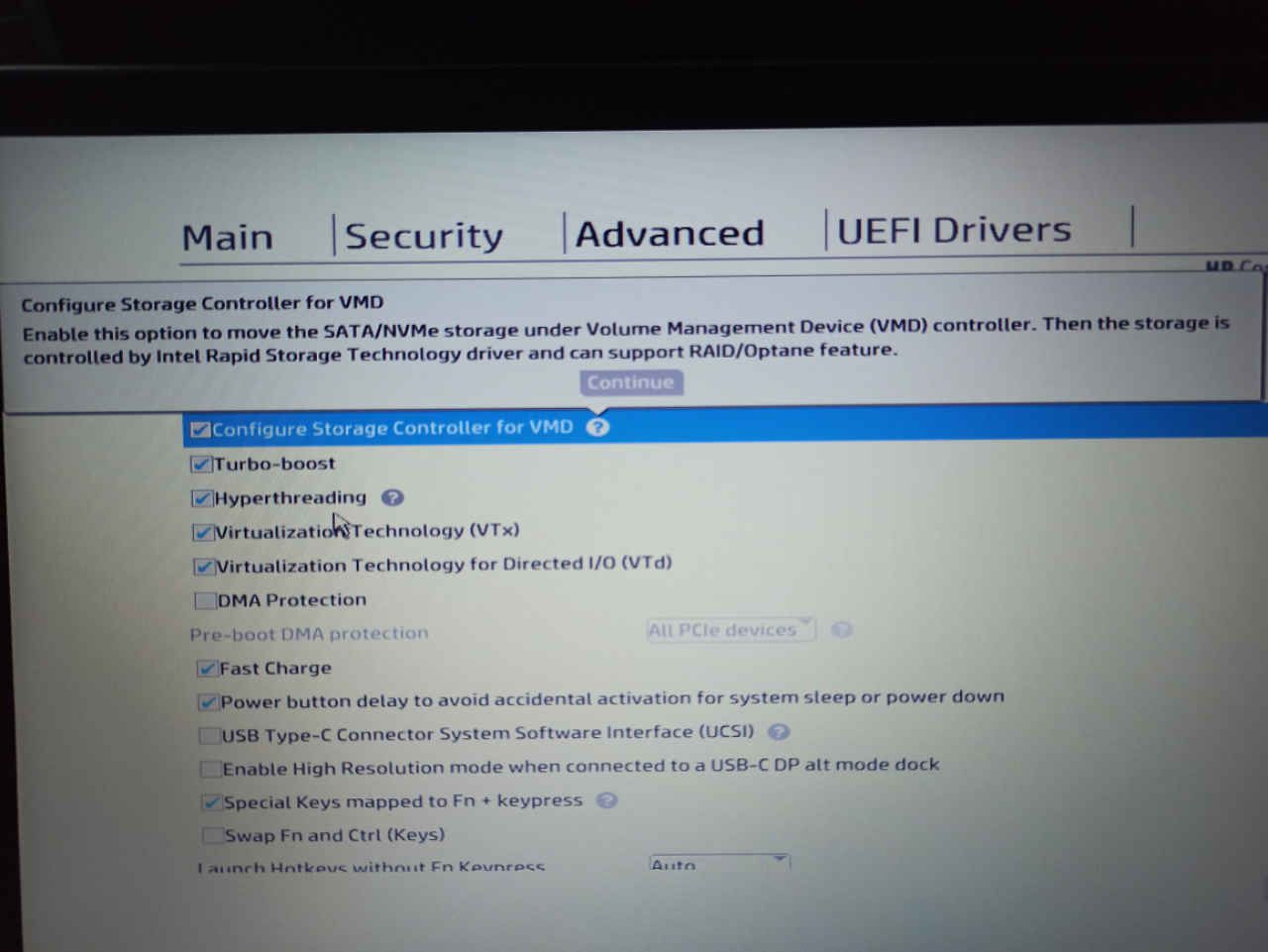

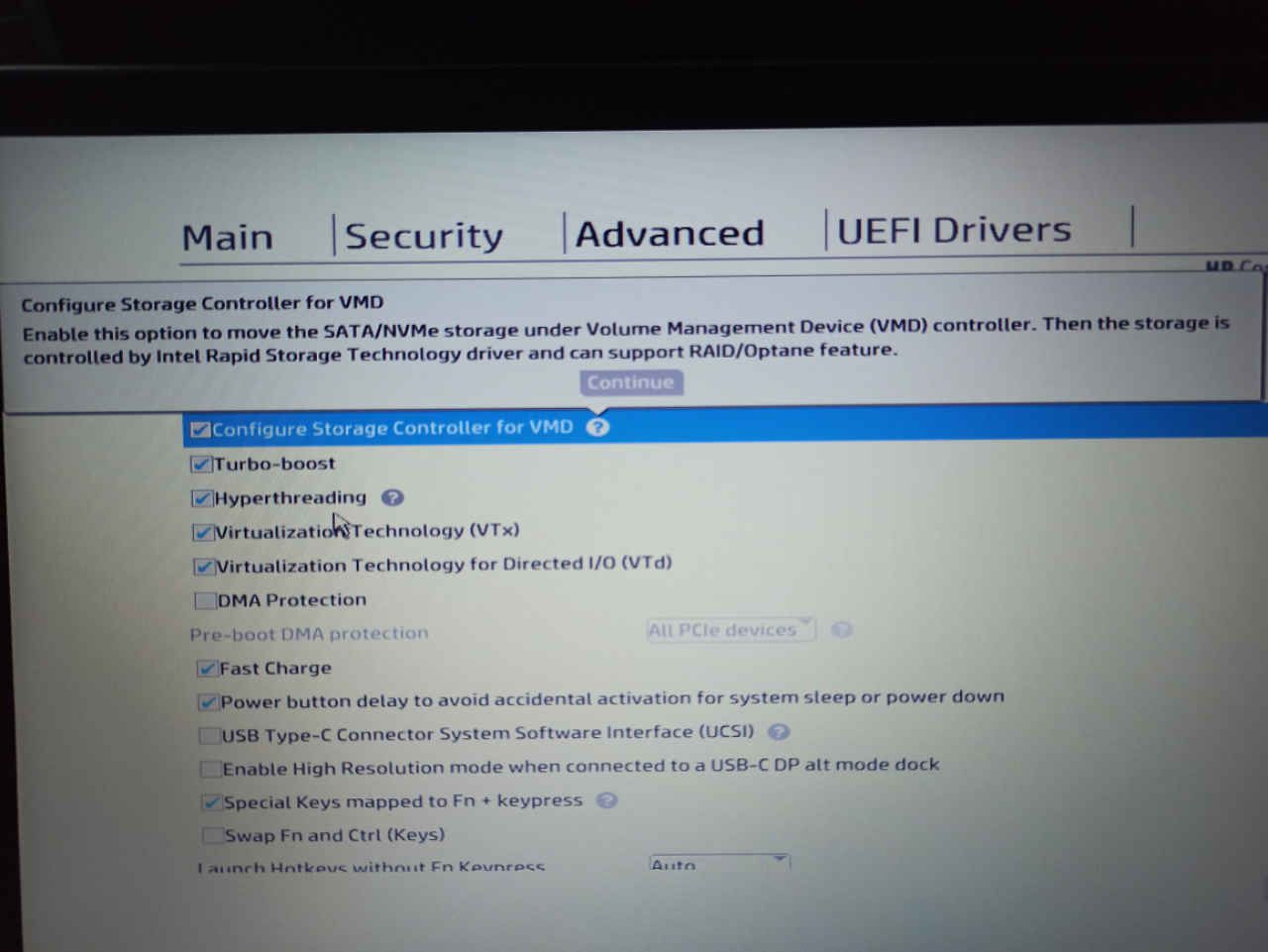

Same problem here but solved (I think). Same model, same extremely slow cloning performance with 5.10.12-e1000e-3.8.7 kernel and same workaround with usb device connected (pendrive, but not other like mouse). Solution for me was enabling “Storage Controller for VMD” under “System Settings” menu.

I’m pending to test another laptops, only got one yet, but tried twice and looks to be working as spected (5-6 GB/s while enabled, 45MB/s while disabled).

Congrats and thank you very very very much for FOG.

-

@diegotiranya Would you help us test something on this hardware?

- Ensure you know the state of this checkbox in the firmware. We need to capture the hardware IDs with this setting on and off.

- Set up a debug capture/deploy it doesn’t matter. Tick the debug checkbox before scheduling the task.

- PXE boot the target computer.

- After a few screens of text you need to clear with the enter key you will be dropped to the FOS Linux command prompt. At that command prompt key in

lspci -k -nn > vmdon.txtThis will output the hardware configuration into the vmdon.txt file. To get the file off the pxe booted computer follow the process below. - Power off the computer and repower the computer, change the VMD setting to OFF.

- Continue to PXE boot the computer, it should boot you right back into FOS Linux. Run the same command as above but send to vmdoff.txt.

- Post both files in this thread.

Now to get the files out of FOS Linux do the following.

- Run

ip a sto get the IP address of the target computer in FOS Linux. - Change root’s password by keying in

passwdset it to something simple like hello. - Now you can use scp or WinSCP to move the files from the FOS Linux computer to another system to post here. Login using root and the password you assigned in 2 to the IP address you collected in 1.

-

Glad to help. Output files attached. Let me know if I can do anything else

-

@sebastian-roth said in HP ProBook 640 G8 imaging extremely slowly:

@Dungody Thanks for the update and pictures! Definitely looks like you deploy to the NVMe driver. I still can’t see why a USB key plugged into the ProBook would make a difference. Though it sounds like it does.

In the picture I notice that you have partclone version 0.2.89. In FOG 1.5.9 we use the newer partclone 0.3.13. So I am wondering which version of FOG you use and if that is also playing a role here.

@Jacob-Gallant Would you want to give that a try as well?

I’d be happy to test this (and @diegotiranya storage controller settings) when I can, but we’ve been locked down due to COVID where I am so I can’t get back into the office in the near term. Looks like you have a few other testers now though! Glad I’m not the only one haha.

-

@diegotiranya Just to confirm that VMDON works at normal speed without needing to have a usb flash device installed?

VMDON

0000:00:1d.0 System peripheral [0880]: Intel Corporation Device [8086:09ab] 10000:e0:1d.0 PCI bridge [0604]: Intel Corporation Device [8086:a0b0] (rev 20) Kernel driver in use: pcieportVMDOFF

00:1d.0 PCI bridge [0604]: Intel Corporation Device [8086:a0b0] (rev 20) Kernel driver in use: pcieportLooks like the base addressing also changes between vmd on and off.

-

@george1421 That’s right. In my case, with VMD enabled, there is no need to plug in any usb storage device in order to get normal throughput.

-

@diegotiranya said in HP ProBook 640 G8 imaging extremely slowly:

In my case, with VMD enabled, there is no need to plug in any usb storage device in order to get normal throughput.

But if VMD is disabled you can still get faster speeds if you plugin the USB key?

@Dungody Can you confirm enabling VMD in the UEFI settings will get you faster speeds without plugging in a USB key?

Seems like VMD support has been in the Linux kernel since quite some time now. Though I still wonder why we see it being so terribly slow when VMD is disabled.

-

@sebastian-roth Yes, the USB key workaround works for me too with VMD disabled (but I had to use a usb3, with an old usb2 didnt’ work).

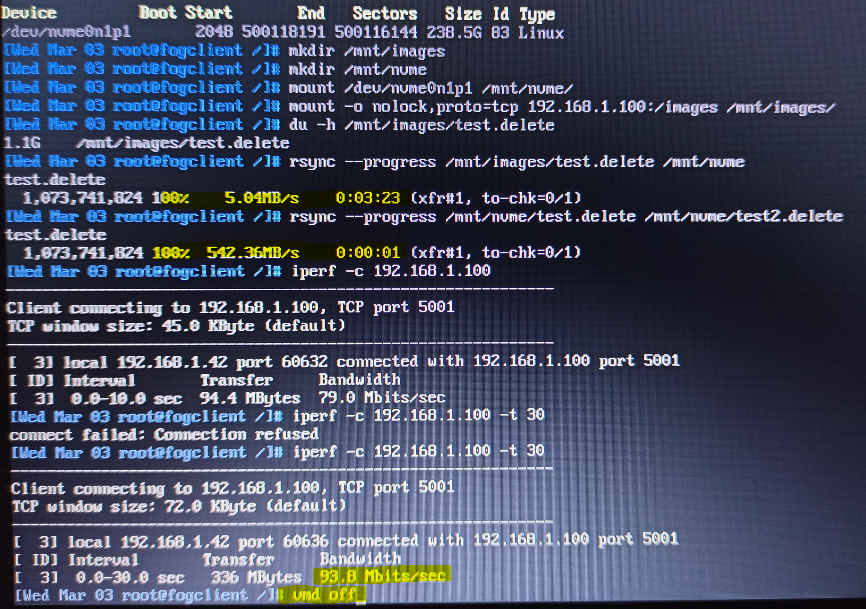

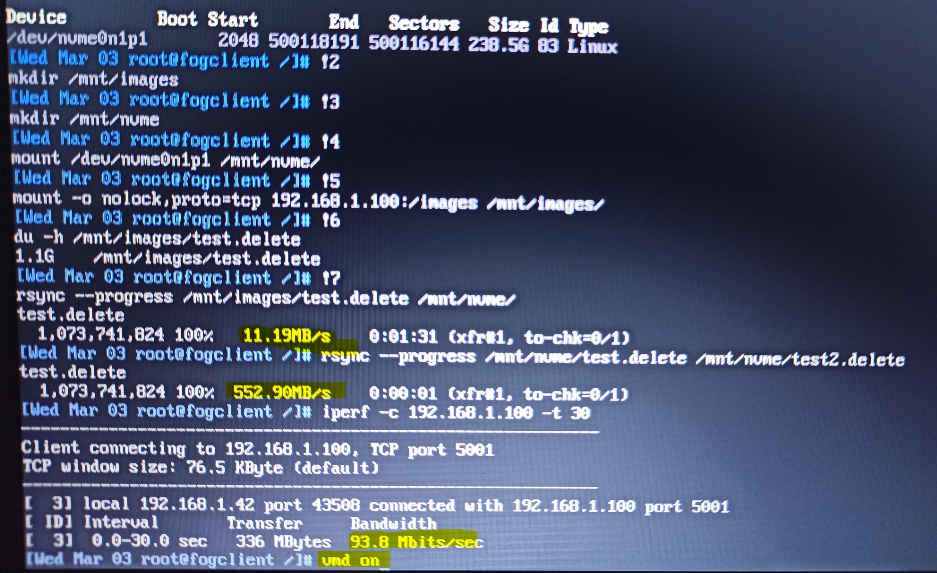

Anyway, I’ve been doing some write testing with dd and rsync from a debug task, getting same speeds in both VMD modes.

Sorry, I didn’t notice before that you suggested to upgrade because of partclone version. I’m using 0.2.89 too (FOG 1.4.4), so I’m going to get the latest and try again.

Thank you

-

Upgraded to 1.5.9 and same behavior: fast with vmd on and slow with vmd off.

I don’t know if this helps but, after some testing, writing speed looks good and the slowlyness seems to occur only when copying from server, but testing network the bandwith looks good too.

One thing that pictures don’t show is that, with vmd off, rsync freezes for a while (even a few minutes) before starting or at the beginning of the copy but not with vmd on. I tried to copy with scp and it hangs too. Locally rsync, cp or dd from /dev/zero work well.

-

I can report that both using the USB3 key method and enabling VMD worked for us as well. Great finds!

-

@diegotiranya said in HP ProBook 640 G8 imaging extremely slowly:

Same problem here but solved (I think). Same model, same extremely slow cloning performance with 5.10.12-e1000e-3.8.7 kernel and same workaround with usb device connected (pendrive, but not other like mouse). Solution for me was enabling “Storage Controller for VMD” under “System Settings” menu.

I’m pending to test another laptops, only got one yet, but tried twice and looks to be working as spected (5-6 GB/s while enabled, 45MB/s while disabled).

Congrats and thank you very very very much for FOG.

Hi !

Just for information : i had the same problem on the same model (very very slow speed) and fixed by this tip !

Many MANY thank for that

-

@jonathan-cool Fun fact … : if i check this option, the speed deploy is “normal” BUT the post deploy result of a beautiful BSOD … “INACESSIBLE BOOT DEVICE”.

If i go back to the BIOS and uncheck this feature, the SYSPREP works …

So … slow speed or BSOD ? The choice is hard … ! -

S Sebastian Roth referenced this topic on

-

S Sebastian Roth referenced this topic on