Read ERROR: No Such File or Directory

-

FOG: 1.5.10

OS: Debian 10 Buster

Kernel version: 5.15.93Hey all,

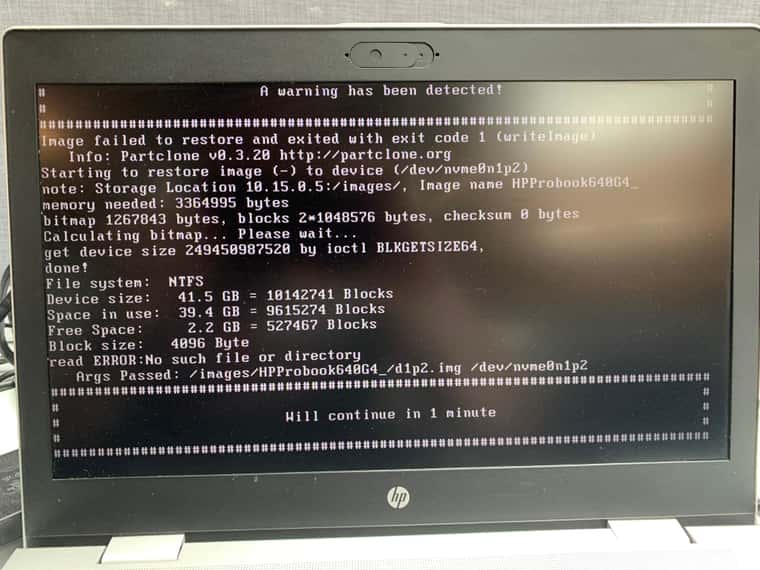

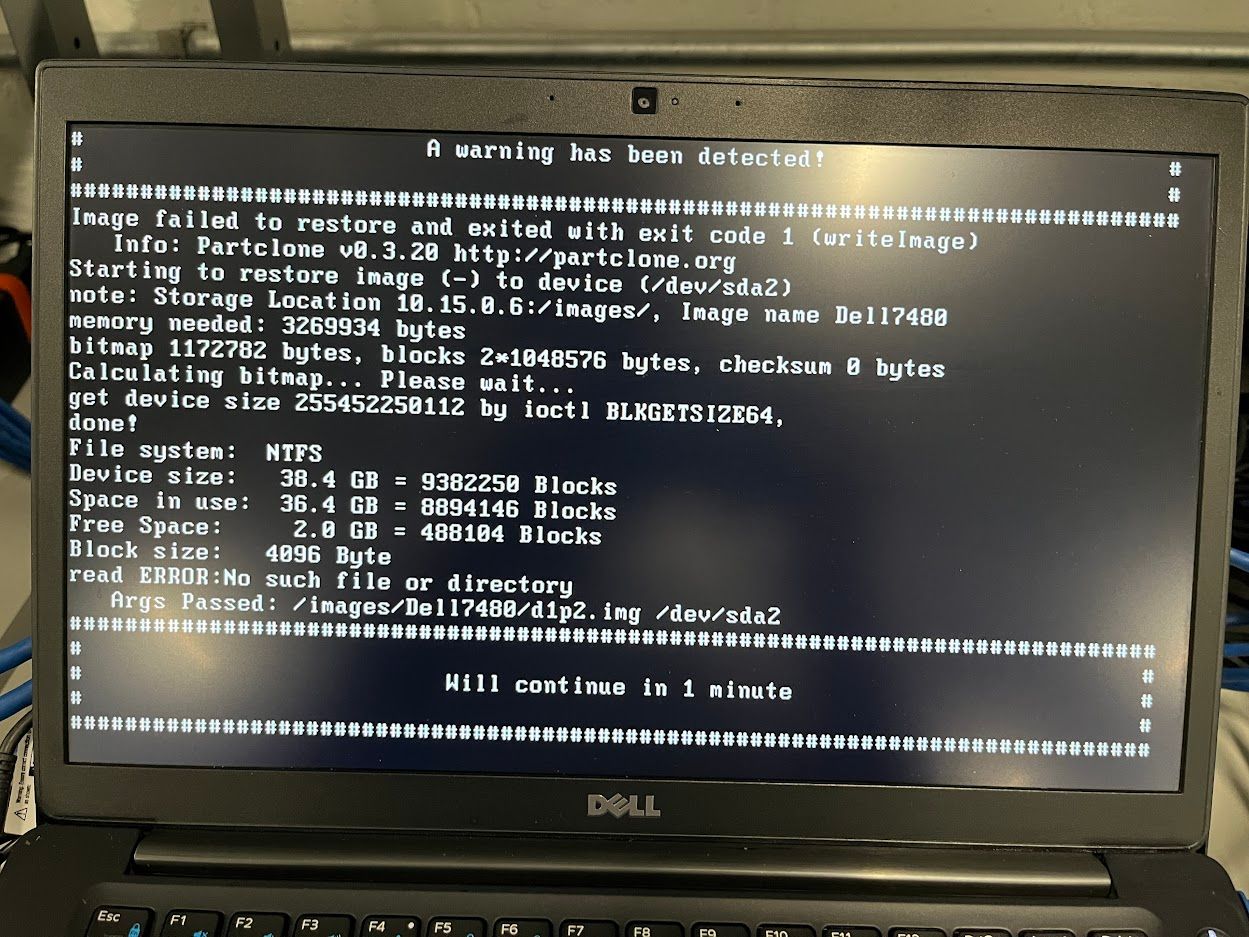

I’ve been trying to resolve this issue for the past week or so and I’m kinda hitting a wall here. On one of my additional storage nodes, I consistently (but not always) get the following error screen when deploying:

I have resolved this issue in the past by ensuring that FOG OS is up to date as well as making sure all of the kernels are the same version, and in this case, these errors are still persisting occasionally. On my most recent deployment faiure, I also ran an

md5sumchecksum on thed1p1.imgcross referencing the image file on the Master node compared to the problematic node and ensured that the checksum outputs match.I have pleaded with it, bargained with it, offered to buy it coffee, and looking I’m looking for suggestions as to what else to try!

Thanks in advance,

-

@danieln This appears to be a warning and not really a true “problem” issue.

Is the machine unable to boot? We typically see this due to the zipped nature of the image files being decompressed and the zip decoding ending before the file has been properly closed. Not sure we’ve been able to fix this particular issue, but we have seen it and it seems the image itself is fine when after the 1 minute “hold”

-

@Tom-Elliott Thanks for the reply. This is helpful info!

Both machines (storage node and target host) are able to boot and the target machine usually starts imaging just fine but after a few minutes of running it’ll throw this warning and reboot after one minute. I have a master node + 4 additional storage nodes running our imaging operation so whenever it reboots and we tell it to deploy again it usually just connects to another node to finish imaging, but this one particular storage node seems to not want to image computers for some reason

Should I maybe change the compression algorithm for the image? It’s odd that the other 3 nodes and the master are able to deploy it. Would an erase and reinstall of FOG on the node be something worth trying?

-

Just bumping this with an update to see if anyone else has any ideas:

I decided to cut my losses and completely erase the storage node, reinstall Debian + FOG, and set it up as a brand new additional storage node. Still getting the same issue

I haven’t been able to run tests on any other image than the one pictured on this screen (Dell7480), but every time this machine has booted into the FOG network and gotten this additional storage node assigned to it to deploy, I get this screen and it reboots. I have even tried rebooting the same machine until I get this additional storage node and it still fails.

I have also tried running a checksum on the

d1p1.imgfile on the master node and the storage node and they appear to be identical:Image on Master Node:

root@2919-fog-master:~# md5sum /images/Dell7480/d1p1.img b4daa9d9f1282416511939f801a41e2c /images/Dell7480/d1p1.imgImage on Storage Node:

root@fog-node-4:~# md5sum /images/Dell7480/d1p1.img b4daa9d9f1282416511939f801a41e2c /images/Dell7480/d1p1.imgWhat’s also interesting about this to me is that the error screen seems to suggest that it’s

d1p2.imgthat is missing/corrupted and notd1p1.img. I’m not sure how these file names play into the full picture of deployment, but could it actually bed1p2that’s the issue here? If so, what is that?Additionally, according to the Fog Replication log, they all seem to be replicating t othe nodes as well without any issue:

[07-06-23 10:33:13 am] * Found Image to transfer to 4 nodes [07-06-23 10:33:13 am] | Image Name: ! Dell 7480 [07-06-23 10:33:14 am] # ! Dell 7480: No need to sync d1.fixed_size_partitions (Node 1) [07-06-23 10:33:14 am] # ! Dell 7480: No need to sync d1.mbr (Node 1) [07-06-23 10:33:14 am] # ! Dell 7480: No need to sync d1.minimum.partitions (Node 1) [07-06-23 10:33:14 am] # ! Dell 7480: No need to sync d1.original.fstypes (Node 1) [07-06-23 10:33:14 am] # ! Dell 7480: No need to sync d1.original.swapuuids (Node 1) [07-06-23 10:33:14 am] # ! Dell 7480: No need to sync d1.partitions (Node 1) [07-06-23 10:33:14 am] # ! Dell 7480: No need to sync d1p1.img (Node 1) [07-06-23 10:33:15 am] # ! Dell 7480: No need to sync d1p2.img (Node 1) [07-06-23 10:33:15 am] * All files synced for this item. [07-06-23 10:33:15 am] # ! Dell 7480: No need to sync d1.fixed_size_partitions (Node 2) [07-06-23 10:33:15 am] # ! Dell 7480: No need to sync d1.mbr (Node 2) [07-06-23 10:33:15 am] # ! Dell 7480: No need to sync d1.minimum.partitions (Node 2) [07-06-23 10:33:15 am] # ! Dell 7480: No need to sync d1.original.fstypes (Node 2) [07-06-23 10:33:15 am] # ! Dell 7480: No need to sync d1.original.swapuuids (Node 2) [07-06-23 10:33:15 am] # ! Dell 7480: No need to sync d1.partitions (Node 2) [07-06-23 10:33:16 am] # ! Dell 7480: No need to sync d1p1.img (Node 2) [07-06-23 10:33:16 am] # ! Dell 7480: No need to sync d1p2.img (Node 2) [07-06-23 10:33:16 am] * All files synced for this item. [07-06-23 10:33:17 am] # ! Dell 7480: No need to sync d1.fixed_size_partitions (Node 3) [07-06-23 10:33:17 am] # ! Dell 7480: No need to sync d1.mbr (Node 3) [07-06-23 10:33:17 am] # ! Dell 7480: No need to sync d1.minimum.partitions (Node 3) [07-06-23 10:33:17 am] # ! Dell 7480: No need to sync d1.original.fstypes (Node 3) [07-06-23 10:33:17 am] # ! Dell 7480: No need to sync d1.original.swapuuids (Node 3) [07-06-23 10:33:17 am] # ! Dell 7480: No need to sync d1.partitions (Node 3) [07-06-23 10:33:18 am] # ! Dell 7480: No need to sync d1p1.img (Node 3) [07-06-23 10:33:18 am] # ! Dell 7480: No need to sync d1p2.img (Node 3) [07-06-23 10:33:18 am] * All files synced for this item. [07-06-23 10:33:19 am] # ! Dell 7480: No need to sync d1.fixed_size_partitions (Node 4) [07-06-23 10:33:19 am] # ! Dell 7480: No need to sync d1.mbr (Node 4) [07-06-23 10:33:19 am] # ! Dell 7480: No need to sync d1.minimum.partitions (Node 4) [07-06-23 10:33:19 am] # ! Dell 7480: No need to sync d1.original.fstypes (Node 4) [07-06-23 10:33:19 am] # ! Dell 7480: No need to sync d1.original.swapuuids (Node 4) [07-06-23 10:33:19 am] # ! Dell 7480: No need to sync d1.partitions (Node 4) [07-06-23 10:33:19 am] # ! Dell 7480: No need to sync d1p1.img (Node 4) [07-06-23 10:33:20 am] # ! Dell 7480: No need to sync d1p2.img (Node 4) [07-06-23 10:33:20 am] * All files synced for this item.Again, this image deploys off of the other three storage nodes just fine with no warnings or errors. We purchased this additional storage node (fog-node-4) for imaging overflow so isn’t absolutely necessary to have it functional, so we’re not in dire straits here. But we’d really like to get it running and figure this issue out if we can.

Please let me know if all of this makes sense and if you have any questions for me that would help our team figure this out.

Thanks very much for your time and insight in advance!

-

Hi all,

Bumping this with some new findings, some custom code I’ve written to try to mitigate and prevent this issue from happening, and another request from the community for some support on this issue:

I have built a custom checksum script that checks all directories in

/imagesfor all (*.img) files on the Master node, and compares it with all of the same .img files on each of my nodes and if the checksums do not match, to return them as a printed list so I can manually remove them for the Master Node to repropogate.For reference (and the curious), here’s the script:

#!/bin/bash # List of nodes to check NODES=("10.15.0.3" "10.15.0.4" "10.15.0.5" "10.15.0.6") # Create temporary files for missing and mismatched files temp_missing=$(mktemp) temp_mismatched=$(mktemp) # Function to handle the comparison for a single node check_node() { local node=$1 local img=$2 local master_checksum=$3 echo "Checking $img on node $node..." local node_checksum=$(ssh -oStrictHostKeyChecking=no -l root "$node" "xxhsum '$img'" 2>/dev/null | awk '{print $1}') if [ -z "$node_checksum" ]; then echo "File $img does not exist on node $node!" echo "$img on node $node" >> "$temp_missing" elif [ "$master_checksum" != "$node_checksum" ]; then echo "Checksums do not match for $img on node $node!" echo "Master: $master_checksum" echo "Node: $node_checksum" echo "$img on node $node" >> "$temp_mismatched" else echo "Checksums match for $img on node $node." fi } # Get a list of directories in /images for dir in /images/*/ ; do # Skip certain directories if [[ "$dir" == "/images/dev/" ]] || [[ "$dir" == "/images/postdownloadscripts/" ]]; then continue fi echo "Processing directory: $dir" # Get a list of image files in the directory for img in "$dir"*.img; do echo "Processing image file: $img" # Calculate the checksum on the master master_checksum=$(xxhsum "$img" | awk '{print $1}') echo "Checksum for $img on master: $master_checksum" # Check the file on each node for node in "${NODES[@]}"; do check_node $node $img $master_checksum & done wait done done # Read results from temporary files mapfile -t missing_files < "$temp_missing" mapfile -t mismatched_files < "$temp_mismatched" # Print results if [ ${#missing_files[@]} -ne 0 ]; then echo "Missing files:" for file in "${missing_files[@]}"; do echo "$file" done else echo "No missing files found." fi if [ ${#mismatched_files[@]} -ne 0 ]; then echo "Mismatched files:" for file in "${mismatched_files[@]}"; do echo "$file" done else echo "No mismatched files found." fi # Clean up temporary files rm "$temp_missing" "$temp_mismatched"So far, the biggest offender here is the node 10.15.0.6 (as seen in the original screenshot post). The master (only sometimes) does not seem to want to replicate to this node correctly and after i run this checksum script, i’ll ssh into it and delete img files from the node and wait for replication, which happens with no issues according to the FOG replicator log, but afterwards, the checksum from the Master never matches the image file on this Node and imaging fails when trying to deploy from this node.

Could this be a FOG version conflict? They’re all on 1.5.10, so i’m not sure what’s going on here. There seems to be something maybe with the compression algorythm on this one node that causes it to sometimes not copy the .img file’s over correctly. Also, it seems like it’s almost always

d1p2.img(which is usually the main data partition).Any ideas here why one particular node wouldn’t (sometimes) copy the img files over correctly?

Thanks again in advance!

-

@danieln The checksums are at the file level.

This would seem more likely to indicate the node that is consistently ahving issues might be having a failing HD?

-

@Tom-Elliott Yeah, that’s about the only other thing I can think of too. It’s a brand new Dell PowerEdge Server with a new HD though, but maybe the HD is just a lemon. I’ll swap out the HD and reinstall FOG and see if that works.

Thanks again for all your input!

-

S Sebastian Roth has marked this topic as solved on