Selective mysql export/import

-

Now that I have two FOG servers, I set up replication from the primary to the secondary. My secondary is not set up for DHCP, but I use it for imaging across networks with the USB boot method, loading ipxe with a local file instead of via PXE boot. This requires a slightly different config from the primary. which has DHCP enabled and serves pxe on an offline network. I believe when I exported the sql fog.db from the primary to secondary it took all the settings with it, and I had to go back to the secondary to reconfigure it for my custom set up again.

So my question is: How can I only export Hosts and Images?

(For the actual image files in /images, I have rsync set up.)

-

@brakcounty from the web ui you should be able to export and import from a csv file. Is that what you are asking or do you need a continuous sync?

-

@george1421 A continuous sync. I want changes made to the Hosts, Images, and groups db (if possible) replicated to the secondary FOG server.

-

@brakcounty

I believe I found it:- Export image and host list on primary server:

sudo mysqldump fog images > fog_images.sqlsudo mysqldump fog hosts > fog_hosts.sql- Import image and host list on secondary server:

sudo mysql -D fog < /mnt/fog_images.sqlsudo mysql -D fog < /mnt/fog_hosts.sqlKeep in mind that the paths shown above are unique to my set up and method for transfer of the sql files. Perhaps there’s a way to dump two tables in one command. But I just figured this out seconds ago lol.

-

@brakcounty While I’m not sure this is the right approach (dumping and then importing the tables) there is a few things to make this a bit more compact with this command

mysqldump -h<hostname> -u<username> -p <databasename> <table1> <table2> <table3> --single-transaction --no-create-info > dumpfile.sqlNow you could take this a bit more with creating a user account that is configured for remote access to mysql much like how a storage node accesses a master node over the network. Then you could run the mysqldump command on the remote FOG server and reach back to the master FOG server to dump its tables. With a this command in <pseudo > form.

mysqldump -h masterfog.domain.com -u fogsql -p password fog hosts images --single-transaction --no-create-info > /tmp/fogexport.sqlThen turn around on the same remote fog server and run the import command:

mysql -u fogsql -p passwprd fog < /tmp/fogexport.sql -

@george1421 Ah I see. Run the dump from the secondary FOG server without having to dump on the primary to a share, mount the share on the secondary, then import. I currently don’t have creds set on mysql on either server. I’ll look into setting creds then try to run mysql -h<hostname> to test.

-

@brakcounty On your master FOG server, look in FOG Configuration -> FOG Settings I think there is a FOG Storage node user account there. The value should exist. I’m not 100% sure at the moment, but try to use those credentials from the remote FOG server to connect back to the master fog server.

If that works then you can automate the process by setting up a cron job at the remote fog server to automate the export and import on a timed basis.

-

@george1421 Those creds worked, but I was prompted for the password. Is there a way to put the password in-line with the command so it runs w/o interaction?

Nevermind found it here

-

After setting up a script and cronjob to pull the tables from the primary fog db and import into the secondary, the import commands are throwing these errors:

ERROR 1062 (23000) at line 23: Duplicate entry '22' for key 'PRIMARY' ERROR 1062 (23000) at line 23: Duplicate entry '395' for key 'PRIMARY'This is my import command:

mysql -D fog images < /root/fog_images.sql mysql -D fog hosts < /root/fog_hosts.sql -

@brakcounty Yeah that was a concern I had. You will probably need to go back and edit the sql dump command and remove the flag on it

-no-create-infoWith that command mysql dump only exports the data not the table definition. The issue you are running into its trying to import just the data and the key field is getting in the way. I was trying to avoid having to drop the table and recreate it, but it looks like that is what you will have to do.The issue is on the export and not your import so to speak with the cron job.

-

@george1421 Ok what about the --single-transaction? Should I leave that?

UPDATE: I left --single-transaction and removed --no-create-info, and no errors were thrown. But I do not see the “test_host” host that I created on the primary, on the secondary. I also created a “testsync” image (not a captured image, just created in the web ui), and that did get imported into the secondary.

Now that I’m thinking about it, I wonder if it matters if I just create a host in Web UI vs actually registering a host.

-

@brakcounty said in Selective mysql export/import:

But I do not see the “test_host” host that I created on the primary, on the secondary.

As I said I don’t think is the best approach here (I don’t know what the right approach is other than using the API because I’ve never needed to do this). But after you upload the new database files, restart apache and then php-fpm on the remote fog server to flush out the caching of the database that both apache and php-fpm does. Its possible you are not seeing it because apache doesn’t know the underlying records have changed in the database.

As for the single transaction you can drop that if you are dumping one table at a time. That flag has to do with how the tables are being dumped and timing.

-

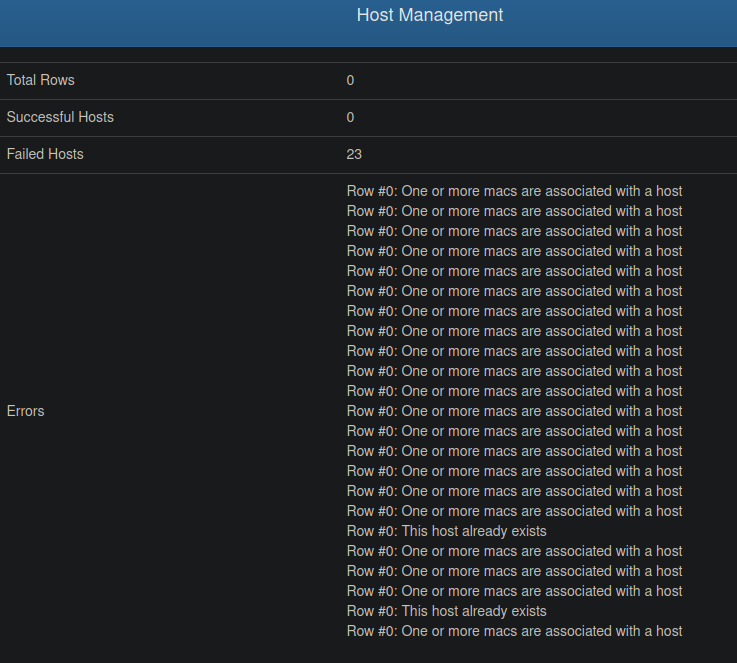

@george1421 I tried restarting apache2 and php, didnt work. I then tried the API export/import method and got this error on the secondary FOG server web UI:

-

@brakcounty I don’t have a good answer for you on either the api or table copy. I was doing some benchmarking with the mysql database and I think there is a way to see what tables FOG is using when I manually insert a host into the database. I have a feeling its the host table and <something> that needs to be exported and imported. Because there is hosts with certain data and another table to hold things like ad connection settings. I’m saying all of this from a crappy memory. i’m not in front of a fog server or my dev environment at the moment.

-

@brakcounty I have a few clues for you now. I was able to setup sql tracing on my FOG dev box. I turned on sql tracing and then from the web ui added a new host definition. This allowed me to see exactly what FOG was doing when it adds a new host.

SELECT COUNT(`hosts`.`hostName`) AS `total` FROM `hosts` WHERE `hosts`.`hostName`='billybob' AND `hosts`.`hostName` <> '0'; SELECT `hostMAC`.* FROM `hostMAC` WHERE `hmMAC`='00:00:01:00:00:01'; SELECT `hmHostID` FROM `hostMAC` WHERE `hostMAC`.`hmPending` IN ('0','') AND `hostMAC`.`hmMAC`='00:00:01:00:00:01' ORDER BY `hostMAC`.`hmID` ASC; SELECT COUNT(`hostMAC`.`hmID`) AS `total` FROM `hostMAC` WHERE `hmMAC` IN ('00:00:01:00:00:01') AND `hmPending` IN ('0','') LIMIT 1; SELECT `hostMAC`.* FROM `hostMAC` WHERE `hmMAC`='00:00:01:00:00:01'; INSERT INTO `hosts` (`hostName`,`hostDesc`,`hostIP`,`hostImage`,`hostBuilding`,`hostCreateDate`,`hostLastDeploy`,`hostCreateBy`,`hostUseAD`,`hostADDomain`,`hostADOU`,`hostADUser`,`hostADPass`,`hostADPassLegacy`,`hostProductKey`,`hostPrinterLevel`,`hostKernelArgs`,`hostKernel`,`hostDevice`,`hostInit`,`hostPending`,`hostPubKey`,`hostSecToken`,`hostSecTime`,`hostPingCode`,`hostExitBios`,`hostExitEfi`,`hostEnforce`) VALUES ('billybob','Some cool host that is invisible','','1','','2021-12-30 18:16:29','','fog','1','domain.com','','','','','11218-','','debug=Y','bzImage2','','','','','','','','sanboot','refind_efi','1') ON DUPLICATE KEY UPDATE `hostName`=VALUES(`hostName`),`hostDesc`=VALUES(`hostDesc`),`hostIP`=VALUES(`hostIP`),`hostImage`=VALUES(`hostImage`),`hostBuilding`=VALUES(`hostBuilding`),`hostCreateDate`=VALUES(`hostCreateDate`),`hostLastDeploy`=VALUES(`hostLastDeploy`),`hostCreateBy`=VALUES(`hostCreateBy`),`hostUseAD`=VALUES(`hostUseAD`),`hostADDomain`=VALUES(`hostADDomain`),`hostADOU`=VALUES(`hostADOU`),`hostADUser`=VALUES(`hostADUser`),`hostADPass`=VALUES(`hostADPass`),`hostADPassLegacy`=VALUES(`hostADPassLegacy`),`hostProductKey`=VALUES(`hostProductKey`),`hostPrinterLevel`=VALUES(`hostPrinterLevel`),`hostKernelArgs`=VALUES(`hostKernelArgs`),`hostKernel`=VALUES(`hostKernel`),`hostDevice`=VALUES(`hostDevice`),`hostInit`=VALUES(`hostInit`),`hostPending`=VALUES(`hostPending`),`hostPubKey`=VALUES(`hostPubKey`),`hostSecToken`=VALUES(`hostSecToken`),`hostSecTime`=VALUES(`hostSecTime`),`hostPingCode`=VALUES(`hostPingCode`),`hostExitBios`=VALUES(`hostExitBios`),`hostExitEfi`=VALUES(`hostExitEfi`),`hostEnforce`=VALUES(`hostEnforce`); INSERT INTO `history` (`hText`,`hUser`,`hTime`,`hIP`) VALUES ('[2021-12-30 18:16:29] Host ID: 10 NAME: billybob has been successfully updated.','fog','2021-12-30 18:16:29','192.168.112.187') ON DUPLICATE KEY UPDATE `hText`=VALUES(`hText`),`hUser`=VALUES(`hUser`),`hTime`=VALUES(`hTime`),`hIP`=VALUES(`hIP`); SELECT `hmMAC` FROM `hostMAC` WHERE `hostMAC`.`hmHostID`='10' AND `hostMAC`.`hmPrimary`='1' ORDER BY `hostMAC`.`hmID` ASC; SELECT `hmHostID` FROM `hostMAC` WHERE `hostMAC`.`hmMAC`='00:00:01:00:00:01' ORDER BY `hostMAC`.`hmID` ASC; SELECT `hmMAC` FROM `hostMAC` WHERE `hostMAC`.`hmHostID`='10' AND `hostMAC`.`hmPrimary`='1' ORDER BY `hostMAC`.`hmID` ASC; SELECT `hmID` FROM `hostMAC` WHERE `hostMAC`.`hmMAC` IN ('00:00:01:00:00:01') ORDER BY `hostMAC`.`hmID` ASC; SELECT `hmMAC` FROM `hostMAC` WHERE `hostMAC`.`hmHostID`='10' AND `hostMAC`.`hmPrimary`='1' ORDER BY `hostMAC`.`hmID` ASC; INSERT INTO `hostMAC` (`hmHostID`,`hmMAC`,`hmDesc`,`hmPending`,`hmPrimary`,`hmIgnoreClient`,`hmIgnoreImaging`) VALUES ('10','00:00:01:00:00:01','','','1','','') ON DUPLICATE KEY UPDATE `hmHostID`=VALUES(`hmHostID`),`hmMAC`=VALUES(`hmMAC`),`hmDesc`=VALUES(`hmDesc`),`hmPending`=VALUES(`hmPending`),`hmPrimary`=VALUES(`hmPrimary`),`hmIgnoreClient`=VALUES(`hmIgnoreClient`),`hmIgnoreImaging`=VALUES(`hmIgnoreImaging`); INSERT INTO `history` (`hText`,`hUser`,`hTime`,`hIP`) VALUES ('[2021-12-30 18:16:29] MACAddressAssociation ID: 10 has been successfully updated.','fog','2021-12-30 18:16:29','192.168.112.187') ON DUPLICATE KEY UPDATE `hText`=VALUES(`hText`),`hUser`=VALUES(`hUser`),`hTime`=VALUES(`hTime`),`hIP`=VALUES(`hIP`); SELECT `msModuleID` FROM `moduleStatusByHost` WHERE `msHostID` = '10' ORDER BY `msID` ASC; INSERT INTO `moduleStatusByHost` (`msHostID`,`msModuleID`,`msState`) VALUES ('10','4','1'),('10','7','1'),('10','1','1'),('10','3','1'),('10','5','1'),('10','8','1'),('10','9','1'),('10','13','1'),('10','10','1'),('10','6','1'),('10','11','1'),('10','2','1'),('10','12','1') ON DUPLICATE KEY UPDATE `msHostID`=VALUES(`msHostID`),`msModuleID`=VALUES(`msModuleID`),`msState`=VALUES(`msState`); SELECT `hosts`.*,`hostMAC`.*,`images`.*,`os`.*,`imagePartitionTypes`.*,`imageTypes`.*,`hostScreenSettings`.*,`hostAutoLogOut`.*,`inventory`.* FROM `hosts` LEFT OUTER JOIN `hostMAC` ON `hostMAC`.`hmHostID`=`hosts`.`hostID` LEFT OUTER JOIN `images` ON `images`.`imageID`=`hosts`.`hostImage` LEFT OUTER JOIN `os` ON `os`.`osID`=`images`.`imageOSID` LEFT OUTER JOIN `imagePartitionTypes` ON `imagePartitionTypes`.`imagePartitionTypeID`=`images`.`imagePartitionTypeID` LEFT OUTER JOIN `imageTypes` ON `imageTypes`.`imageTypeID`=`images`.`imageTypeID` LEFT OUTER JOIN `hostScreenSettings` ON `hostScreenSettings`.`hssHostID`=`hosts`.`hostID` LEFT OUTER JOIN `hostAutoLogOut` ON `hostAutoLogOut`.`haloHostID`=`hosts`.`hostID` LEFT OUTER JOIN `inventory` ON `inventory`.`iHostID`=`hosts`.`hostID` WHERE `hostID`='10' AND `hostMAC`.`hmPrimary` = '1';It looks like you need to pay attention to the hosts, hostMAC, moduleStatusByHost tables. All three of these tables have inserts when a new host is added to the web ui.

-

@george1421 That worked! I added the two additional tables to the export command and the “test” host was imported.