Multi drive image reversing disks.

-

@sebastian-roth The “golden” image was built in VMware with a C and D drive. These are labeled disk0 and disk1 respectively.

The two hosts I’ve deployed images to have been:

Dell XPS Workstation:

- T-force Z330 2tb m.2 nvme

- Crucial MX500 500gb sata ssd

HP Z4 G2 Workstation

- Team Group MP34 M.2 nvme

- Crucial MX500 500gb sata ssd

On both systems, the C drive is being deployed to the sata SSD and the D drive is going to the m.2 drive. This is the opposite of the intended order. It seems that FOG is determining that the sata drive is drive0 no matter which ports the sata drive and m.2 drive are connected to.

If there is a way to capture the image so that it deploys disk0 to the m.2 drive, that would be the most ideal. If not, am I able to edit the backup files on the FOG server to reverse the order?

-

@samdoan said in Multi drive image reversing disks.:

On both systems, the C drive is being deployed to the sata SSD and the D drive is going to the m.2 drive. This is the opposite of the intended order. It seems that FOG is determining that the sata drive is drive0 no matter which ports the sata drive and m.2 drive are connected to.

There is a simple answer and a more complete one. I want to take you through the long way to get your answer so you understand why and how to fix it in the future.

What I want you to do is schedule a debug deployment. No worries since we are not really going to deploy anything, just get to the FOS Linux console.

For one of these computers where the drives are crossed, schedule a deployment task, but before you hit the deploy task button, tick the debug check box then hit the schedule task button. Now pxe boot the target computer. After several screens of text where you need to clear with the enter key you will be dropped to the FOS Linux command prompt. At this command prompt key in

lsblkNote the linux name of the disk you want to be the primary or OS disk. You probably want the one with the nvme in the name. Take that disk name make sure its prefixed with /dev/<disk name> and go back to the FOG UI. Locate the host definition for that troubled target computer. Locate the field call… I think it primary disk. It will be <something> disk. And save the disk name there.Now in the web ui clear the deployment task and then schedule a new deployment task (non debug) then reboot the target computer that should now image correctly.

So what do you do if you have 50 of these computers? Well use a FOG settings group. Place all of the computers of this model in a group. Then set the primary disk for all of these computers to what you just discovered.

-

@samdoan said in Multi drive image reversing disks.:

Team Group MP34 M.2 nvme

This one may take a bit more debugging. How is this team group created? More appropriately how is this team group presented to linux. What I would do here is the same debug deploy as before. In this case go ahead with the

lsblkI would like to see the results of this.In this case I’ll tell you a secret debugging tip.

- At the fos linux command prompt key in

ip a sto get the target computer’s IP address. - Give the root user a password with

passwdMake it something simple like hello. No worries this setting will not stay all changes will be lost on a reboot. - Now using the IP address remote to the target computer using putty from a windows computer or ssh from a linux computer. Login as root and the password you just set.

- Now run the

lsblkcommand putty or ssh console will give you a chance to copy and paste the answer into this forum.

- At the fos linux command prompt key in

-

@george1421 Thanks for the suggestion. I’m going to give this a shot.

-

@george1421 I followed your suggestion by finding the drive name for the nvme drive and setting that as the primary disk on the host. The drive name is /dev/nvme0n1.

However with the Host Primary Disk set to /dev/nvme0n1 or /dev/sda, I receive an error stating “Could not find any disks”

-

-

@samdoan said in Multi drive image reversing disks.:

On both systems, the C drive is being deployed to the sata SSD and the D drive is going to the m.2 drive. This is the opposite of the intended order. It seems that FOG is determining that the sata drive is drive0 no matter which ports the sata drive and m.2 drive are connected to.

From the output of the picture you posted we see that FOS enumerates your drives as

disks=/dev/nvme0n1 /dev/sda(so NVMe first and SATA drive second). This is in line with what we see in the code as FOS uses the simple Linuxsortcommand - resulting in “…nvme…” before “…sd…” (code ref).If there is a way to capture the image so that it deploys disk0 to the m.2 drive, that would be the most ideal. If not, am I able to edit the backup files on the FOG server to reverse the order?

I am not sure I understand what you mean. If you capture the image from a machine that has NVMe and SATA drive it will capture the drives in the same order as it would deploy them - see my comment above on the order.

If you want to mess with the order within the image you should be able to just rename all the files.

d1.*->d2.*and vice versa. -

@sebastian-roth said in Multi drive image reversing disks.:

I am not sure I understand what you mean. If you capture the image from a machine that has NVMe and SATA drive it will capture the drives in the same order as it would deploy them - see my comment above on the order.

If you want to mess with the order within the image you should be able to just rename all the files.

d1.*->d2.*and vice versa.The image was captured on a virtual machine which has the correct drive order. When deploying the image, the primary drive is defaulting to the SSD vs the NVME. It’s obvious now that this is the correct order based on how linux enumerates disks however, I do need these two disks switched around.

When using your first suggestion by defining the “Host Primary Disk,” FOG throws the error:

Could not find any disks (/bin/fog.download)This occurs whether I define the “Host Primary Disk” as /dev/nvme0n1 or /dev/sda which seems odd so perhaps I have the incorrect syntax?

Lastly, I also did attempt renaming all of the files. I renamed all the d1 files to d2 and all the d2 files to d1. After this change, I receive an error when deploying that states:

Target partition size(17 MB) is smaller than source(214730 MB). Use option -C to disable size checking(Dangerous). -

@george1421 I followed all of your steps and was able to define the “Host Primary Disk” to be the /dev/nvme0n1 disk, however it fails after deploying an image due to “Could not find any disks”

The host that I am deploying to has two disks: /dev/sda and /dev/nvme0n1. I also tried setting “Host Primary Disk” to /dev/sda but I received the same error “Could not find any disks.” This seems odd because when I leave the primary disk field blank, the image deploys to /dev/sda as the primary disk by default.

-

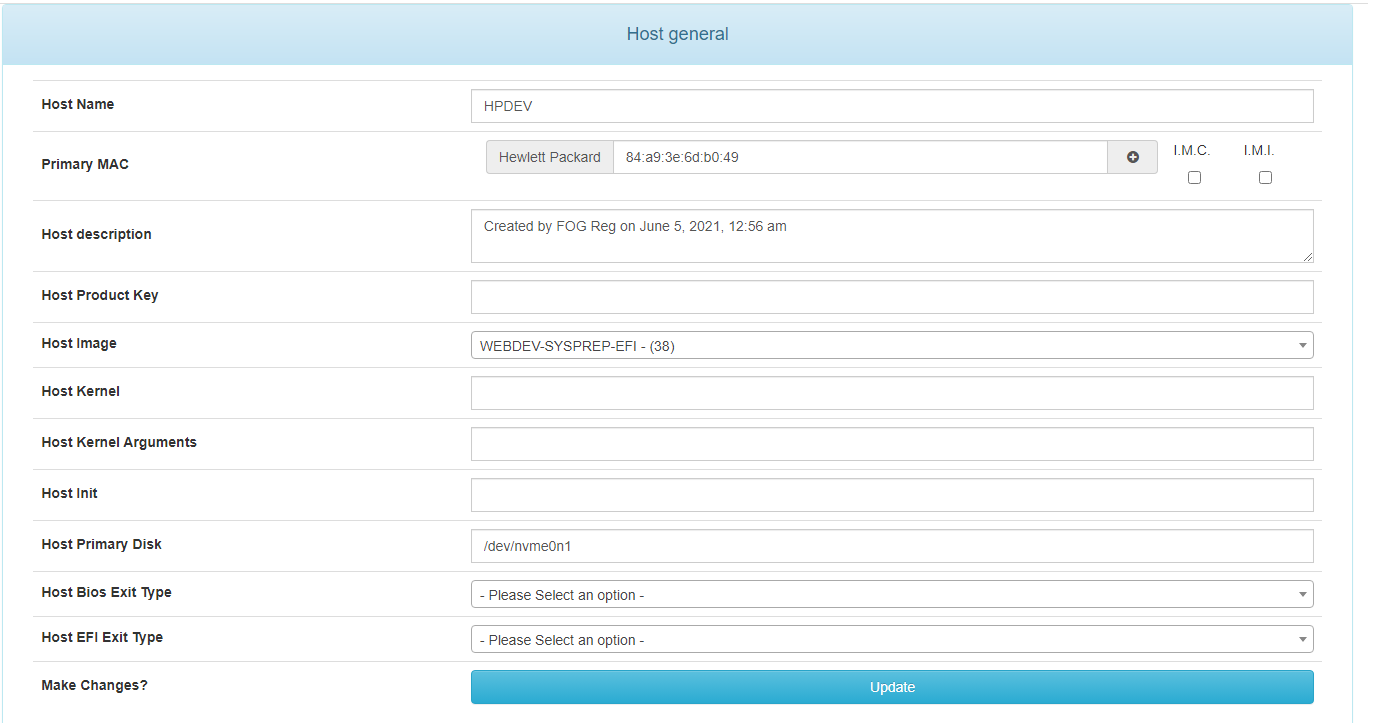

@samdoan Will you post a screen shot of the settings you have for this target host?

If they look right, then lets setup again a target deploy to this computer with the primary disk set. You will be dropped to a fos linux command prompt. I want you to key in these commands in order and take a clear to read screen shot of it.

lsblk

cat /proc/cmdlineI think maybe something isn’t entered right or the hardware is reacting strange. If everything check out you can run imaging in single step mode by keying in

fogand then pressing enter at each break point. In the imaging program. I have a feeling there is an error just prior to the screen shot you just provided that will give us a bit better understanding of what is going on with this hardware. -

@samdoan said in Multi drive image reversing disks.:

Lastly, I also did attempt renaming all of the files. I renamed all the d1 files to d2 and all the d2 files to d1.

Sorry, my explaination might have confused you when I told you to rename d1.*. Also rename the dXpY.img files. E.g. d1p3.img -> d2p3.img

-

@sebastian-roth No apology needed, I thought that was implied and that was exactly what I did. Every reference to d1 was renamed to d2 and I still encountered that error.

-

@george1421 said in Multi drive image reversing disks.:

@samdoan Will you post a screen shot of the settings you have for this target host?

If they look right, then lets setup again a target deploy to this computer with the primary disk set. You will be dropped to a fos linux command prompt. I want you to key in these commands in order and take a clear to read screen shot of it.

lsblk

cat /proc/cmdlineI think maybe something isn’t entered right or the hardware is reacting strange. If everything check out you can run imaging in single step mode by keying in

fogand then pressing enter at each break point. In the imaging program. I have a feeling there is an error just prior to the screen shot you just provided that will give us a bit better understanding of what is going on with this hardware.Here is a screenshot of the host settings:

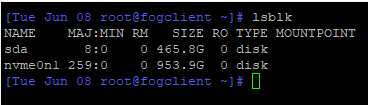

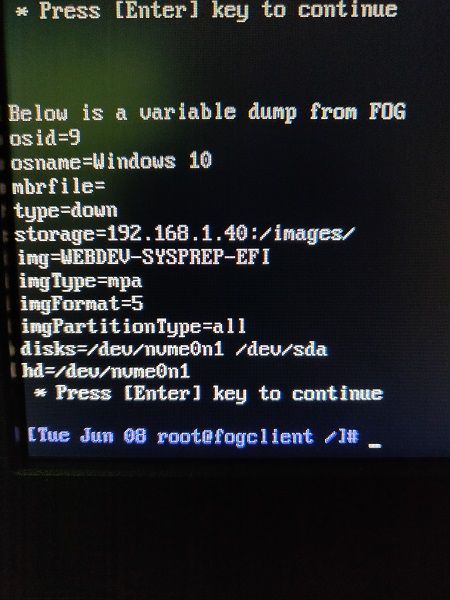

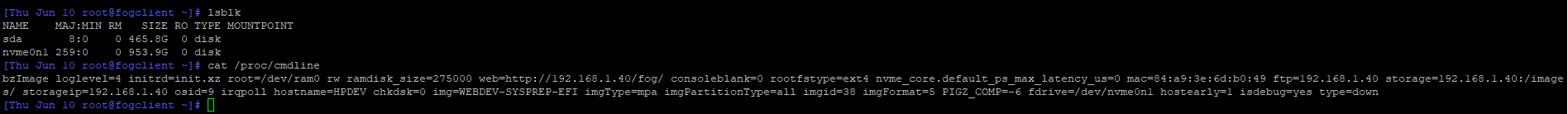

Here is the other requested screenshot:

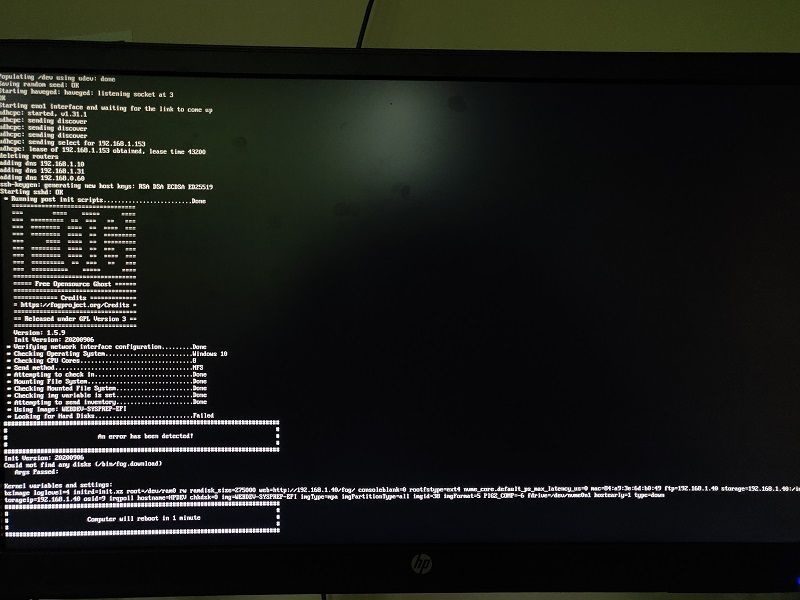

[Thu Jun 10 root@fogclient ~]# lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT sda 8:0 0 465.8G 0 disk nvme0n1 259:0 0 953.9G 0 disk [Thu Jun 10 root@fogclient ~]# cat /proc/cmdline bzImage loglevel=4 initrd=init.xz root=/dev/ram0 rw ramdisk_size=275000 web=http://192.168.1.40/fog/ consoleblank=0 rootfstype=ext4 nvme_core.default_ps_max_latency_us=0 mac=84:a9:3e:6d:b0:49 ftp=192.168.1.40 storage=192.168.1.40:/images/ storageip=192.168.1.40 osid=9 irqpoll hostname=HPDEV chkdsk=0 img=WEBDEV-SYSPREP-EFI imgType=mpa imgPartitionType=all imgid=38 imgFormat=5 PIGZ_COMP=-6 fdrive=/dev/nvme0n1 hostearly=1 isdebug=yes type=downIt appears that its failing on the step “Looking for Hard Disks”

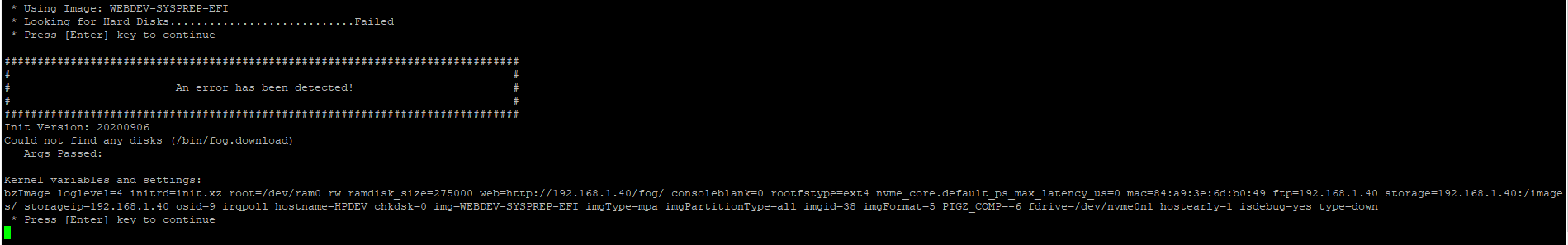

* Using Image: WEBDEV-SYSPREP-EFI * Looking for Hard Disks............................Failed * Press [Enter] key to continue ############################################################################## # # # An error has been detected! # # # ############################################################################## Init Version: 20200906 Could not find any disks (/bin/fog.download) Args Passed: Kernel variables and settings: bzImage loglevel=4 initrd=init.xz root=/dev/ram0 rw ramdisk_size=275000 web=http://192.168.1.40/fog/ consoleblank=0 rootfstype=ext4 nvme_core.default_ps_max_latency_us=0 mac=84:a9:3e:6d:b0:49 ftp=192.168.1.40 storage=192.168.1.40:/images/ storageip=192.168.1.40 osid=9 irqpoll hostname=HPDEV chkdsk=0 img=WEBDEV-SYSPREP-EFI imgType=mpa imgPartitionType=all imgid=38 imgFormat=5 PIGZ_COMP=-6 fdrive=/dev/nvme0n1 hostearly=1 isdebug=yes type=down * Press [Enter] key to continue -

@samdoan said in Multi drive image reversing disks.:

Looking for Hard Disks

Its probably failing in this section of the code: https://github.com/FOGProject/fos/blob/a46d5e9534715dad09fcfbc631ff681f4b42d208/Buildroot/board/FOG/FOS/rootfs_overlay/usr/share/fog/lib/funcs.sh#L1376

Everything connects correctly so this is not an OP misconfiguration (IMO)

-

@samdoan said in Multi drive image reversing disks.:

Target partition size(17 MB) is smaller than source(214730 MB).

I would imagine this error can only happen if partition layout files (dX.*) and image files (dXpY.img) are being mixed up somehow.

About the error when setting “Host Primary Disk”: seems like there is an issue in the code when using Multiple Disk image type.

-

@sebastian-roth said in Multi drive image reversing disks.:

@samdoan said in Multi drive image reversing disks.:

Target partition size(17 MB) is smaller than source(214730 MB).

I would imagine this error can only happen if partition layout files (dX.*) and image files (dXpY.img) are being mixed up somehow.

About the error when setting “Host Primary Disk”: seems like there is an issue in the code when using Multiple Disk image type.

I’ve gone back and double and triple checked my process for re-naming the image files. I am renaming them accurately and still receive the error Target partition size(17 MB) is smaller than source(214730 MB) in part clone when attempting to reverse the two disks by renaming.

-

@sebastian-roth So I found in addition to renaming dXpY.*, you also need to edit the dX.size files as they also reference the disk number

1:1610612736002:214748364800After making this change partclone successfully runs and is correctly deploying disk1 to /dev/sda. However after deploying disk1, the process completes without deploying disk2 to /de/nvme0n1. Is there something else I need to edit in order to tell the fog server that there’s another disk? How does fog server know that there are X number of disks in the image?

-

@samdoan said in Multi drive image reversing disks.:

So I found in addition to renaming dXpY.*, you also need to edit the dX.size files as they also reference the disk number

Ahhh, good call. This part was added later because of the random enumeration when you have two NVMe drives. I didn’t have that in mind when I told you to simply rename the files. FOS is trying to use the size information to match disk size. So even if you rename the img and partition files it still tried to match to the information found in the dX.size files.

Either edit or delete those dX.size files. If you delete the files FOS will skip the size matching and just do them in alphabetical order as mentioned before - nvme0n1 before sda.

After making this change partclone successfully runs and is correctly deploying disk1 to /dev/sda. However after deploying disk1, the process completes without deploying disk2 to /de/nvme0n1. Is there something else I need to edit in order to tell the fog server that there’s another disk? How does fog server know that there are X number of disks in the image?

FOG enumerates drives/disks using the

lsblkcommand when it boots up and should process both drives. Make sure Host Primary Disk is not set for this host. -

@sebastian-roth said in Multi drive image reversing disks.:

After making this change partclone successfully runs and is correctly deploying disk1 to /dev/sda. However after deploying disk1, the process completes without deploying disk2 to /de/nvme0n1. Is there something else I need to edit in order to tell the fog server that there’s another disk? How does fog server know that there are X number of disks in the image?

FOG enumerates drives/disks using the

lsblkcommand when it boots up and should process both drives. Make sure Host Primary Disk is not set for this host.Renaming the disks does work. I know this because I captured a new, simple image and was able to reverse the order in which the disks are deployed.

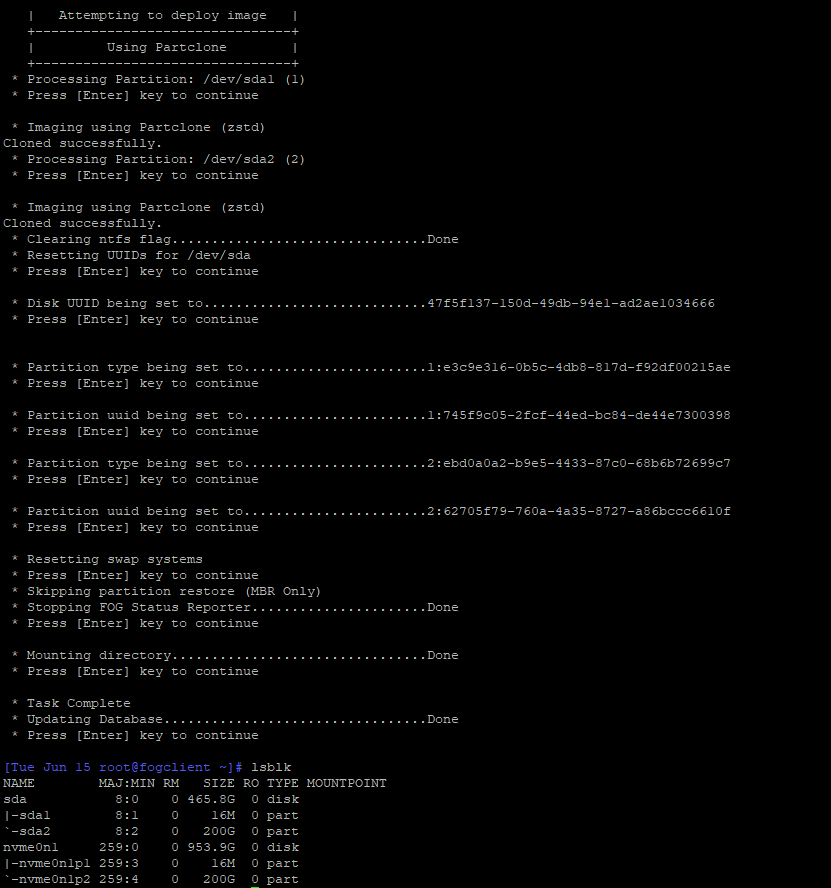

But when I try this on my real image, it’s deploying the same image to both physical disks which is weird. At the bottom you can see that /dev/sda and /dev/nvme0n1 have the same partition layout. This tells me that it’s deploying Disk1 to /dev/sda AND /dev/nvme0n1.

Debug deployment - You can see it process /dev/sda1 and /dev/sda2 but not /dev/nvmen01 - 04

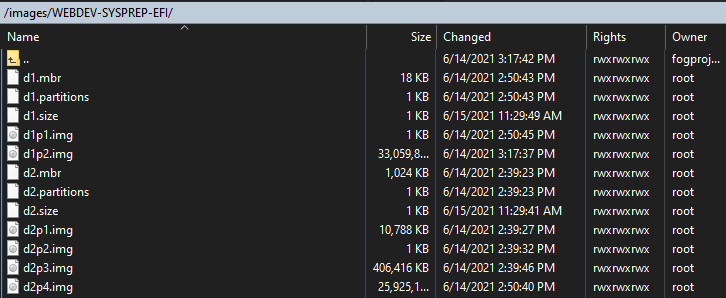

Here are the raw files in the capture:

-

@samdoan said in Multi drive image reversing disks.:

This tells me that it’s deploying Disk1 to /dev/sda AND /dev/nvme0n1

I don’t think this is the case! It’s just the partition layout that was deployed to /dev/nvme0n1 on an earlier run and it’s still like that because for some reason it only deploys one disk right now.

Please check your image and host settings again. Is the image set to All Disks? Also make sure Host Primary Disk is not set for the host you deploy to.