@george1421

Now with a browser go to http://<fog_server_ip>/fog/service/ipxe/boot.php?mac=00:00:00:00:00:00 That will display the text behind the iPXE menu. On that page search for default. That will be the section that is called when the timeout happens and boots from the local hard drive. By changing globally the exit modes for both bios and uefi to exit, it should put the exit command in the iPXE Menu script.

And it is putting the exit command in the iPXE Menu script

choose --default fog.local --timeout 5000 target && goto ${target}

:fog.local

exit || goto MENU

I’ve tried to mess with the embedded script itself and I found that if I replace

:netboot

chain tftp://${next-server}/default.ipxe ||

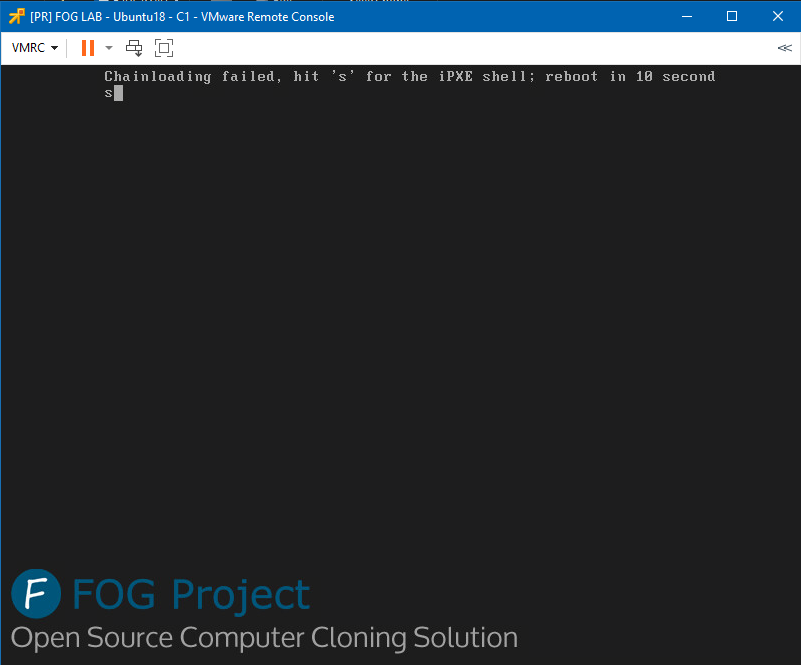

prompt --key s --timeout 10000 Chainloading failed, hit 's' for the iPXE shell; reboot in 10 seconds && shell || reboot

with this

chain tftp://${next-server}/default.ipxe || echo Chainloading failed

and try to “Boot from Hard Drive” using “EXIT” method, it is WORKING! The “Chainloading failed” is not echoing back to me. But if I write this like that

chain tftp://${next-server}/default.ipxe ||

echo Chainloading failed

the “Chainloading failed” is echoing back to me. So I guess the issue here is not with the chain command but with the syntax.

Apparently

command || command

is not the same as

command ||

command

So I’ve tried to leave this “prompt” command but in this manner:

:chainloadfailed

prompt --key s --timeout 10000 Chainloading failed, hit 's' for the iPXE shell; reboot in 10 seconds && shell || reboot

:netboot

chain tftp://${next-server}/default.ipxe || goto chainloadfailed

And now it works! When I use EXIT by clicking “Boot from Hard Drive”, iPXE is correctly exiting. And if I rename default.ipxe on my server to something else (to simulate failed chainloading), the “Chainloading failed, hit ‘s’ (…)” message is appering so I guess that the core of this issue is incorrect syntax in iPXE script, and the solution is to write this like I did.

Straight away I say that if I write it like this (in one line)

:netboot

chain tftp://${next-server}/default.ipxe || prompt --key s --timeout 10000 Chainloading failed, hit 's' for the iPXE shell; reboot in 10 seconds && shell || reboot

the script is not working, beacuse it is dropping me straight to iPXE shell, so the better solution is to write this like this:

:chainloadfailed

prompt --key s --timeout 10000 Chainloading failed, hit 's' for the iPXE shell; reboot in 10 seconds && shell || reboot

:netboot

chain tftp://${next-server}/default.ipxe || goto chainloadfailed

So I guess, we’ve solved this mistery. Next-server variable is working - I’ve tried to echo it and it echoed IP address of my FOG server, by the way.